By Vincenzo Ciancaglini, Marco Balduzzi, Salvatore Gariuolo, Rainer Vosseler, and Fernando Tucci

Key Takeaways

- Understanding the security of agentic AI systems requires analyzing their multi-layered architecture and identifying the specific risks at each layer.

- If left unprotected, these systems can be compromised by attackers, who can exfiltrate sensitive information, manipulate behavior through data poisoning, or disrupt critical components via supply chain attacks.

- Due to their complex structure, risks may not remain confined to a single component or layer - they can propagate across the different parts of the system.

- For organizations that are currently using or planning to adopt Agentic, a two-pronged approach to security - combining solid design principles with ad-hoc solutions - is recommended.

As the adoption of agentic AI accelerates, so do the stakes for securing these systems and the organizations that rely on them. The complexity of securing agentic architectures - where each layer demands a tailored strategy - raise critical questions. What does the architecture of an agentic AI system actually look like? How do the different components interact with one another? More importantly, what are the related security risks introduced by this structure? These are all critical questions for any organization seeking the best practices for deploying an agentic AI application.

This research builds on a previous article in this series, where we introduced the concept of agentic AI and explained how these systems differ from traditional AI systems. We have organized our new findings into four parts: an overview of agentic AI architecture and its layers; a threat modeling analysis highlighting key risks at each layer; practical security recommendations combining design principles with ad-hoc solutions; and a conclusion summarizing the main insights.

Agentic AI system architecture and its layers

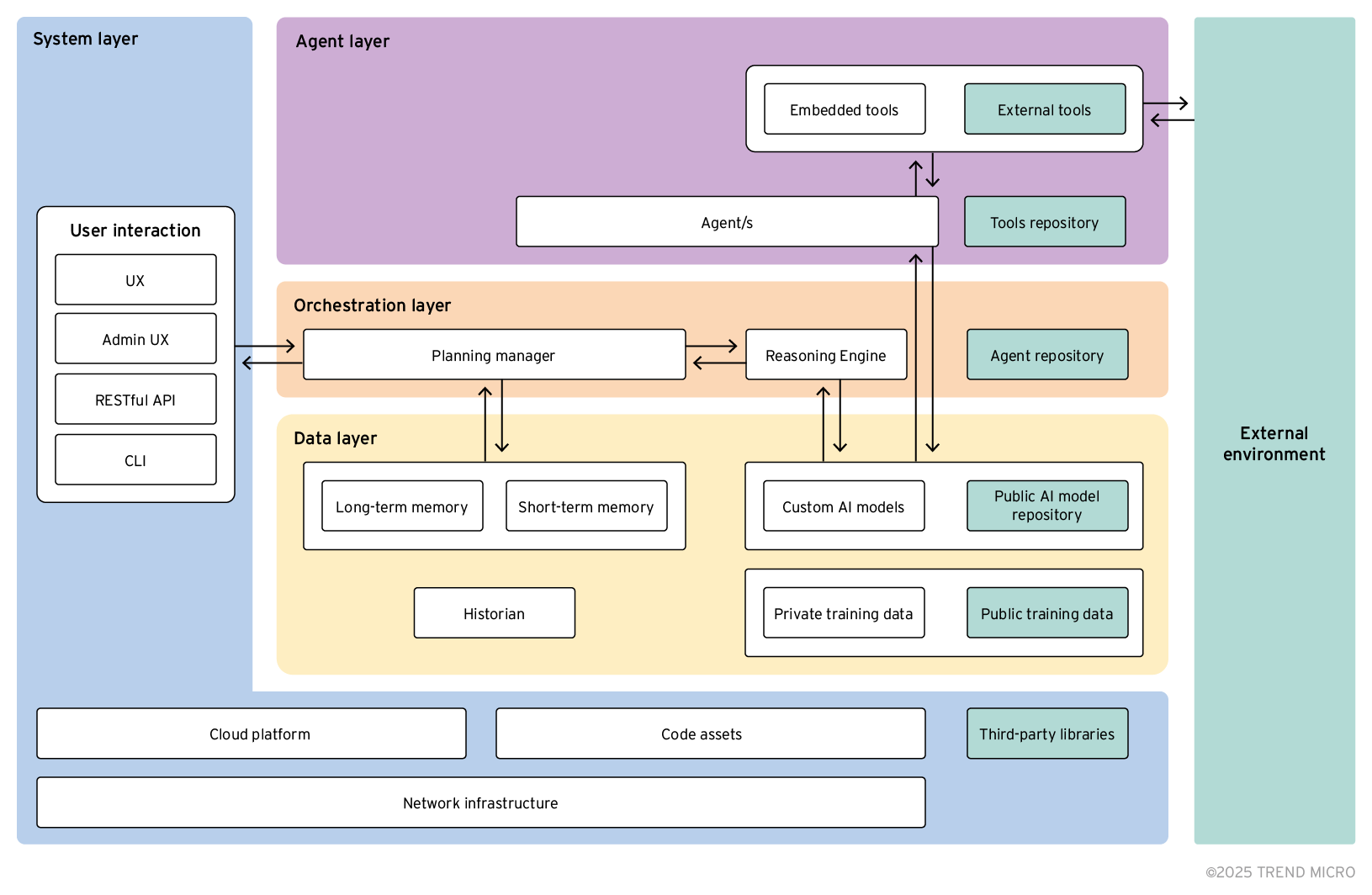

A bird’s-eye view of the agentic AI architecture lends a broader perspective of the inner workings of the system. Given its complexity, we illustrate the overall structure through various logical layers, as shown below:

Figure 1. Agentic AI system architecture

It is important to know that some of the components described here might not yet be implemented in the agentic systems developed at the time of publication, but are very likely to become standard in the near future. As such, they must be considered when drawing a threat landscape that is both solid and future-proof. For example, while tools and agent repositories have not yet been observed in the wild, we are seeing the rapid development of protocols such as Anthropic’s Model Context Protocol (MCP) for tool invocation and interaction, and Google’s Agent to Agent protocol (A2A) for inter-agent communications, which suggests that such repositories could soon become a reality. Therefore, they should not be disregarded when assessing the security of agentic AI systems.

The layers are as follows:

- Data (in yellow): Represents the storage components needed throughout the whole agentic lifecycle, from the training data for models to runtime memory storage.

- Orchestration (in red): Manages actions related to processing, such as activating the computational agents.

- Agent (in purple): Includes tools and agents that perform the AI tasks.

- System (in blue): Encompasses all general support components such as libraries, tenancy, and front-end components for user interaction.

In addition, we highlight some parts in green to differentiate them as being external to the agentic system itself — an important distinction when reasoning upon supply chain threats. These components include third-party libraries, public training datasets, external tools, etc.

A comprehensive breakdown of each layer is provided in the next sections.

I. Data layer

As the name suggests, the data layer encompasses the data components involved in the development, maintenance, or usage of the agentic system. In that regard, it constitutes a critical part of the application that needs to be secured.

Below are its key components, as shown in Figure 1:

A. Custom AI models

The main components hosted in the data layer are the AI models, which are used for the agentic system to operate. An organization can decide to either use publicly available models or train their own Custom AI models, using proprietary training data that might not be available elsewhere.

Models can be used by the orchestrator to generate the planning for a request or by the agents to perform specific tasks, such as translating natural language requests into specific data store queries. Other models can handle tasks such as image recognition, content generation, text-to-speech, and optical character recognition.

B. External Model repositories

Whenever an organization decides to rely upon publicly available AI models, these can be sourced from an external repository, such as Huggingface or NVIDIA NGC. Such repositories now offer a vast choice of readily available AI models for different purposes, saving the cost and time required for an in-house model training. However, this convenience comes with the added risk of depending on external suppliers whose reliability is not necessarily guaranteed.

C. Training data

Training data is utilized in-house either to train new models from scratch or to fine-tune existing foundation models. The latter involves adding specific domain knowledge to the models, tailoring them to perform particular tasks more effectively.

The data used to train the models forms the basic layer of the model's knowledge. It can either come from a knowledge base internal to the organization and not available anywhere else (private training data) or it can come from public datasets available on the internet (public training data)However, this approach has several limitations. Firstly, large language models can generate incorrect or misleading information (known as hallucinations), so they shouldn't be solely relied upon for accurate knowledge. Secondly, training and fine-tuning require significant time and resources, and can only be conducted periodically. This makes it difficult to update the model with new knowledge in a timely manner, which prompts the use of an external memory paired at runtime with the AI model.

D. Memory

To provide AI applications with a reliable and up-to-date source of information, external storage is used. This memory typically consists of a vector store that semantically retrieves information closely related to the query processed by the AI. This setup allows the AI system to depend on a knowledge base that is not only reliable and less prone to errors, but also can be updated quickly and in real time.

Depending on how long the information is stored, there are at least two different types of data store implementations for such a system: long-term memory and short-term memory. Long-term memory is used for storing persistent and general-purpose information that is relevant across the entire application, and for implementing advanced functionalities like self-improvement. In contrast, short-term memory holds information specific to a particular session or user. Some examples of this are user preferences, session data, and previous requests. This data is usually deleted once the user's session ends.

E. Historian

Lastly, there is a component known as historian, which is responsible for storing logging data. This includes error and debug messages related to the application, as well as records of usage activity and other relevant metrics. This data can be essential for monitoring the application's performance and health, and can also be useful for billing purposes.

II. Orchestration layer

Orchestration is a specific functionality of agentic AI systems. This layer comprises components that analyze user requests and plan the necessary steps to fulfill them. The work is then performed by supervised agents. A more detailed view of how the components interact with each other in a realistic agentic deployment is provided in the upcoming section of this article (Workflow example).

An overview of its components is as follows:

A. Planning manager

The planning manager is the foundational component of the layer. This software implements the business logic of the agentic application and is the first component to be invoked upon a user’s request. It defines the workflow — for example linear, reactive, or iterative — and constructs the execution graph. Additionally, it manages the deployment of agents, including their selection criteria and the number needed. It also sets the termination conditions and outlines the communication patterns among agents, such as sequential, concurrent, adversarial, networking, or hierarchical.

While today’s planning managers very much rely on hardcoded workflows defined with frameworks such as LangGraph or Microsoft Autogen, we believe that this paradigm is likely to shift towards fully AI-based planning — relying more and more on a reasoning engine to define both the execution graph and the agents involved.

B. Reasoning engine

The planning manager works side-by-side with the reasoning engine. This engine operates the AI model responsible for translating the prompts originating from the planning manager into execution plans for the agents. It is also responsible for updating the plan based on the intermediate results returned from the agents or possible user adjustments.

At the time of writing, the standard AI models used as reasoning engines are large language models (LLMs) tuned for reasoning. However, as Agentic AI applications continue to evolve and expand into more domains, we can envision the introduction of other AI models that may offer more deterministic performances when needed (e.g., in automotive or industrial domains) or enhanced reasoning capabilities.

C. Agent repository

Agents are normally provided by the framework used to implement agentic applications and are statically defined. For example, Microsoft’s Magentic-One offers agents specialized for scraping web pages or executing code. However, given the diverse needs of real-world applications, we have recently observed a shift towards on-demand agents. In these setups, the agentic application pulls specialized agents as needed.

Therefore, we can assume that agents will be offered a standard interface, much like how software libraries are provided today, and could be invoked at runtime, using a protocol such as Google’s A2A. Future agentic applications will rely on agent repositories offering third-party agents available for integration.

III. Agent layer

While the orchestration layer is responsible for processing the user’s requests, elaborating an execution plan, and activating the agents to fulfill the work, the agent layer contains the components that actually perform the tasks.

A. Agents

The agents are software units responsible for completing individual tasks and returning their results to the orchestrator layer or directly coordinating with one another, depending on the communication pattern defined by the planning manager. Agents can also interact with the external environment, such as internet resources or physical actuators like sensors or cameras. These operations are carried out via so-called tools.

B. Embedded tools

Embedded tools run locally within the agent layer. Some forms of embedded tools include local programs stored within the agentic application, code generated by the agents, compiled and executed in the local environment or domain-specific code generated by the agents and passed to a local interpreter.

C. External tools

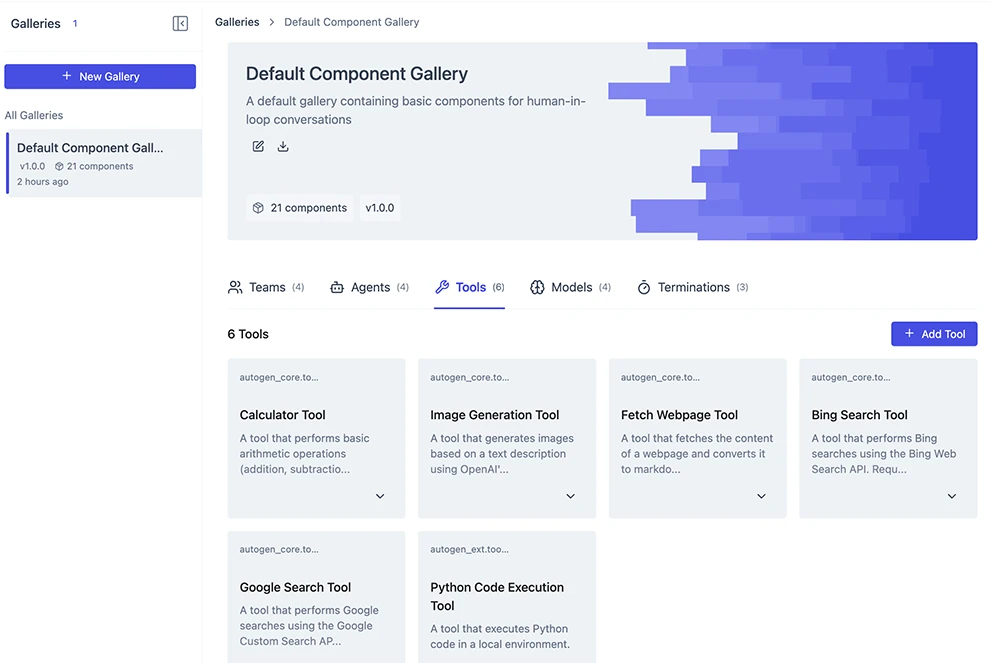

External tools consist of external or third-party services invoked by the agent via APIs or external databases with specialized data that the agent can query. Figure 2 shows an example of tools provided by AutoGen Studio. There are also already standardized protocols like the Model Context Protocol (MCP) aimed at allowing tools to be exposed and accessible by agents.

Figure 2. Tools provided by AutoGen Studio

D. Tool repository

Agents can access the tool repositories to fetch the most appropriate tool for the task at hand. These could be library repositories such as PyPy or npm, or microservice catalogs exposing services described by manifests that the agent can use on-demand, via dedicated protocols like the aforementioned MCP.

IV. System layer

Finally, it is important to remember that, albeit agentic, an AI application still requires regular software components to ensure canonical application functionalities such as user interaction, authentication, and configuration, all of which are organized with the system layer.

A. User Interaction

Whether as a graphical UI, a hardware-augmented UI, a chatbot assistant, or programmatically through an exposed API, agentic applications still require user interaction to determine their goals, set operational requirements, return results, and adjust their plan based on such interaction. In a previous publication, we explored how agentic AI applications could take the form of AI Assistants for consumers, interacting directly with people, and examined their security risks from the user’s perspective.

Apart from this, the following components are also at work in the system layer:

B. Code assets

Agentic applications also require source code, configuration scripts, or other forms of assets, all of which need appropriate DevOps infrastructure in place for CI/CD, source code management, etc. All of this makes for the code assets.

C. Network infrastructure and cloud platform

Finally, a network infrastructure is needed to support the application, along with an appropriate cloud platform to allocate the computational resources to run the different components. This applies whether we consider a local agentic assistant deployed on a regular endpoint, an application running within a company’s internal infrastructure, or a publicly accessible internet service.

V. Workflow example

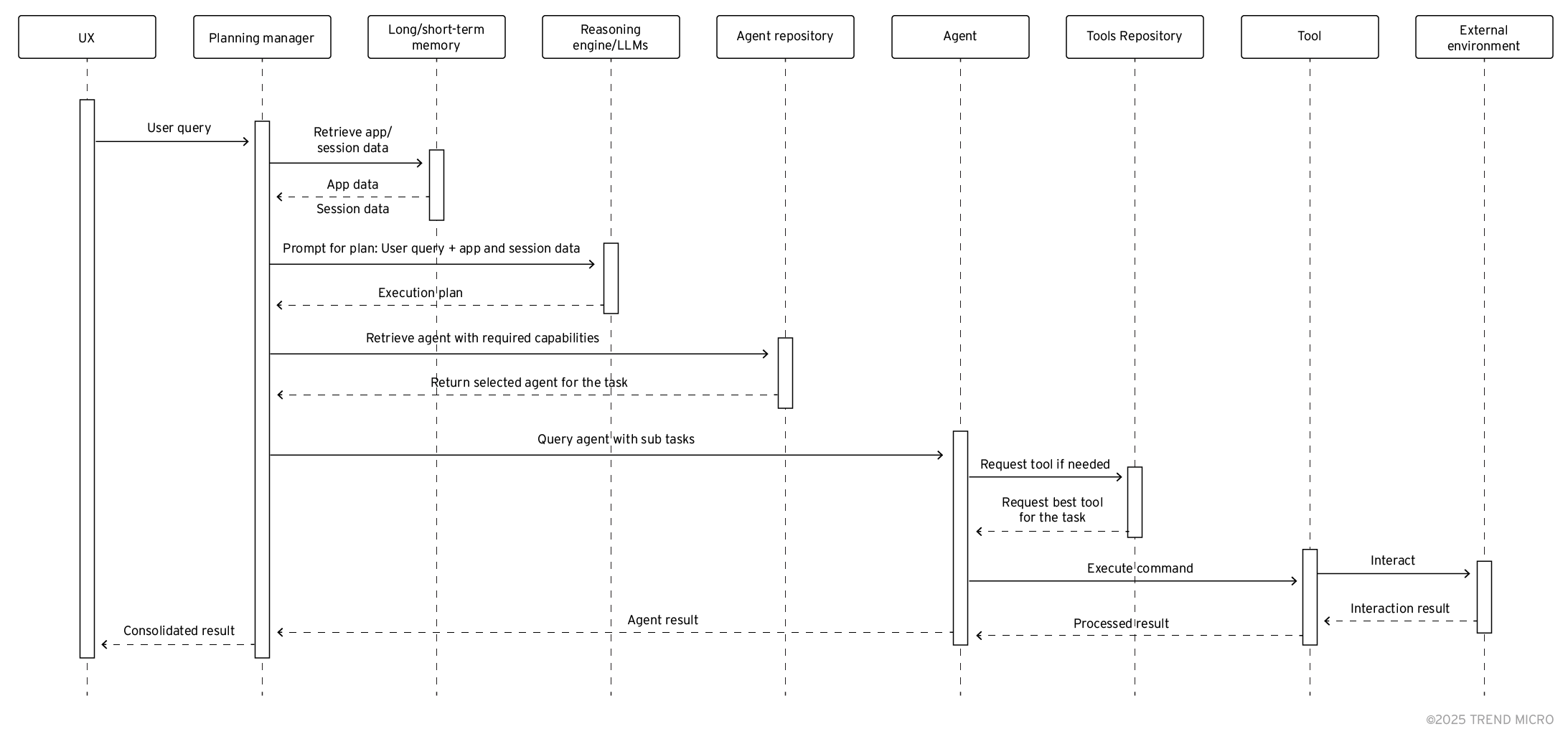

Agentic applications make for a complex system with many moving parts that need to harmoniously go together. In Figure 3, we can see an example of how different components would interact together during a specific workflow.

Figure 3. An example of a workflow within an agentic AI application

In the above case, we have a query originating from a user interacting with the user interface of the application. This is passed to the planning manager, which retrieves any application-related knowledge from the long-term memory, and any session and user-related information from the short-term memory.

With this information, the planning manager builds the prompt and sends it to the reasoning engine, which returns an execution plan. This execution plan is then implemented by the planning manager, which identifies the agents needed to carry out the tasks defined in the execution plan.

Each of the agents receives its instructions and executes the task. If needed, tools are leveraged, and access to the tool repository is requested. The result is returned to the agent, and subsequently to the planning manager, which, after possibly multiple iterations and refinements, consolidates the results and passes them back to the user.

The scope of all of this makes for a broad and complex attack surface, with many different components each with its own peculiar set of risks and stakes. In the next section, we will delve into which classes of risks are impacting each layer and what it means in terms of protecting agentic AI applications.

Threat modeling

As we’ve seen, agentic AI systems rely on complex, multi-layered architectures. Because these layers are interconnected, security risks can emerge at any point and potentially spread throughout the system. Understanding these risks is, therefore, essential. Below, we provide an overview of the key risks each layer faces.

I. Data layer

The data layer is exposed to threats that can compromise data stores, training sets, and models. Attackers may seek to subvert these components to alter the agentic system’s application logic or exploit them to steal sensitive data the system may withhold.

A. Data store poisoning

Data store poisoning is a security threat where an attacker manipulates the data in the agentic system's data stores, for example as a result of a service exposed to the internet or a misconfiguration. This manipulation can have varying impacts depending on whether it affects long-term or short-term memory.

When an attacker successfully poisons long-term memory, they can fundamentally alter the behavior of the application. This might involve changing configuration settings to weaken security protocols, inserting malicious code that gets executed within the application, or corrupting data in a way that subtly skews the application's operations.

On the other hand, poisoning short-term memory can lead to immediate and direct impacts on a user's session. By altering session data, an attacker could take over a user's account, impersonate the user, manipulate transactions, or extract sensitive information during the session. This type of attack is akin to session hijacking, where the attacker gains control of the user's current interaction with the application.

B. Training set poisoning

Unlike direct tampering with the application's operational data (as seen in memory poisoning), this form of attack targets the foundational elements of the AI learning processes — the training datasets. By introducing misleading information into these datasets, an attacker can subtly influence how the AI model learns and behaves.

Since AI models learn to make decisions based on the data they are trained on, introducing inaccuracies can lead to flawed decision-making. These flaws may not be immediately apparent but can manifest in various detrimental ways that have long-term effects, such as misclassification, introduction of biases, insertion of backdoors, and degraded performance.

C. Inference attack

An interesting aspect of models developed internally is that the training data may include proprietary information, as in the case of organizations aiming to develop applications that provide AI functionalities based on the company’s business processes. An attacker who manages to steal such training sets or infer their content through an inference attack could gain access to proprietary and intellectual data.

In this scenario, the attacker queries the model using carefully crafted input data. These queries are designed to probe the model and observe its outputs or behavior based on different inputs. By analyzing the outputs or the behavior of the model in response to these queries, the attacker can infer properties about the model's training data. For instance, if a specific input change consistently alters the output in a predictable manner, the attacker can deduce certain characteristics of the data that trained the model.

D. Model and data exfiltration

When a model is developed internally, another major security risk involves the potential for an attacker to exfiltrate the model. While in an inference attack, the attacker accesses the raw information used to build the model, in this scenario, the attacker steals the resulting model that has been developed using such information.

At this point, the attacker can reuse the model independently, to the extent of operating a similar agentic deployment. This results in a significant competitive disadvantage for the targeted organization, in addition to major concerns related to the leakage of proprietary information. Attackers can target not only the model, but also the long- and short-term memory to exfiltrate data in order to gather further intel on a running application. This is especially concerning when considering that the exposure of such systems on the internet is a notorious problem.

The theft of a model not only exposes the technical inner workings of a company's AI systems but also allows the attacker to potentially replicate the business's services or products. Furthermore, if the model incorporates unique algorithms or proprietary data, its unauthorized use could lead to legal and regulatory implications.

E. Model subversion

In the case of a system relying on models sourced externally, such as from platforms like Hugging Face, there is a risk of supply-chain attacks. These attacks are aimed at hijacking the sourcing process, where an attacker replaces the original (intended) model with their own malicious version. As a result of this attack, the attacker can manipulate the general logic of the targeted application to their advantage, potentially altering its behavior in subtle but harmful ways.

This type of attack can have severe consequences. For instance, if the compromised model is used in critical decision-making processes, such as financial forecasting or personal data analysis, the attacker could skew results to favor their interests or cause significant operational disruptions. Moreover, the integrity and reliability of the application are compromised, which can erode user trust and lead to reputational damage for the company relying on the external models.

II. Orchestration layer

The orchestration layer faces various attacks targeting its core functions: resource allocation and execution planning. These attacks usually exploit vulnerabilities in the reasoning engine — when based on a large language model — or in the memory storage, if left exposed on the internet.

A. Recursive agent invocation abuse

One key element of an agentic workflow is its termination condition; that is, when the execution meets satisfactory conditions, then the processing should stop. This is generally decided by the planning manager’s parameters and the task at hand, and it is one of the defining parameters of an agentic workflow.

If tampered with, either through indirect prompt injection or poisoning the application data store, this can lead the application to misbehave, which could result in a never-ending loop of agent invocation, known as recursive agent invocation abuse. This can exhaust all computational power and lead to a denial of service (DoS).

B. Goal subversion

An agentic system employs agents to achieve a goal, which the reasoning engine infers by analyzing the user’s prompt received by the planning manager.

For example, a user request, like “I want to travel to San Francisco this June,” translates into the goal “Find suitable flights and a hotel to San Francisco between 01/06/2025 and 30/06/2025.” The planning manager then formulates an execution plan based on the available resources, such as: “1. Find flight options, 2. Find hotel options, 3. Consolidate flights and hotel costs, 4. Sort by cheapest.” It is important to note that the goal might change due to new contextual information received along the processing, such as “No flights to San Francisco are available in June; the month needs to be changed.”

In this scenario, an attacker can perform an indirect prompt injection to the reasoning engine via one of the agents, introducing a malicious prompt that requests the planning manager to alter the goal, for example, “Only select flights from a certain airline.” In a similar scenario, it is the memory storage that is exposed to the Internet is hijacked, the attacker can alter the session data and change the goal altogether, without resorting to prompt injection.

C. State graph subversion

The planning manager is the component responsible for monitoring the current working state of the application, which is used to plan the next steps of execution. Depending on the way the planning manager is implemented, the state graph can range from a hardcoded workflow — implemented in frameworks like LangGraph — to a dynamic graph generated in real-time by the reasoning engine, with the current running state being stored in the short-term memory.

There are several ways an attacker could potentially subvert the running state of the application:

- In the case of a hardcoded workflow, an attacker with access to the system code and libraries could exploit a vulnerability such as CVE-2025-3248 to subvert part of the execution graph — for example, by replacing a running subgraph with a malicious one (subgraph impersonation).

- If the short-term memory is exposed to the internet, an attacker gaining access to the storage could alter the session data - including the current running state - to trigger unexpected behaviors (orchestration poisoning).

- In the case of a dynamic graph, a carefully crafted prompt injection could silently trigger the LLM to alter the running state towards a miscreant goal (flow disruption).

III. Agent layer

Agents and dedicated tools are the components that are the most exposed to the external environment, making them particularly vulnerable to various forms of supply chain attacks.

A. Tool subversion

Agents interact with the external environment (for example, a website) through the use of tools. Tool subversion is an attack that consists of changing the expected behavior of a tool; depending on the tool type, we can identify multiple ways of achieving this attack.

For external tools, the attack involves tricking the agent into choosing the wrong tool for the job, either by inducing a selection error or by presenting the malicious tool as preferable for the agent. If we assume the presence of a tool repository, the attacker could promote a malicious tool within the repository and social engineer the agent into picking up its own. This attack is known as tool confusion.

Another option would be to hijack the API used to fetch the external tool — for example by conducting a DNS attack on the internet domain used by the API. This attack, which we refer to as tool hijacking, is very similar to supply chain attacks that occurred in the past against software library repositories such as PyPy and npm.

While a proper tool confusion attack remains to be seen — as present-day agentic AI systems still lack full autonomy in discovering and selecting tools — we might soon see variations of tool hijacking attacks involving malicious MCP servers. With the rapidly increasing availability of open source MCP servers, a developer could carelessly deploy an MCP server for his own system only to later discover it presents malicious behaviors to the agentic application.

Even in case of embedded tools, which require no external requests, subversion may still occur. In this case, a carefully crafted prompt injection may trick the agent into generating and executing malicious code.

B. Agent subversion and related attacks

This attack consists of altering the behavior of an agent, either by subverting the agent itself or by replacing the agent altogether.

Agents relying on LLMs for their work can be subject to indirect prompt injection, which may originate from maliciously crafted inputs or external sources. It can be challenging for LLMs to distinguish which parts of the input constitute the actual query and which parts are hijacked information. As a result, additional instructions could be hidden in the text source. This hidden prompt can be leveraged to change the role of the agent, an attack known as agent role subversion.

In other scenarios, the attacker may trick the planning manager into choosing the wrong agent for a given task, such as by introducing malicious agents into the agent repository that expose more desirable capabilities. This, much like its tool counterpart, is known as agent confusion. Another option consists of replacing a known version of an agent with a malicious one, an attack known as agent hijacking.

C. Cross-agent information poisoning

Depending on the workflow defined by the planning manager, agents may communicate directly with each other to accomplish a task, without the supervision of the orchestration layer. This introduces an additional layer of risk.

A compromised agent could be manipulated to introduce poisoned information targeting another agent it is communicating with, creating a cascading, indirect prompt injection attack. While implementing such an attack typically requires deep knowledge of the agentic application, detecting such a threat necessitates a thorough inspection of all messages exchanged between agents.

D. Adversarial attack on the agent’s AI model

One last threat to this layer involves attacking the AI model used by the agent. Any agent relying on a LLM to process unstructured text — whether it is web content or results from an external datastore — is exposed to possible indirect prompt injection attacks, which can alter its behavior or exfiltrating data from the system.

It is important to note that not all AI models are language models, and not all agents use an LLM. There can be object recognition agents responsible for identifying specific items in a video feed, such as obstacles in automotive systems, people in corporate surveillance systems, or faulty samples in production monitoring systems. These models can be attacked in their capacity to perform tasks: road marks could be introduced to disrupt an automotive AI model's ability to follow the road, or make-up could be used to evade facial recognition systems.

IV. System layer

As agentic applications still require regular software components to ensure canonical functionalities, classical attacks still apply. Here are some of the more classical threats that can affect the productivity of agentic applications that either apply to the system layers alone or also encompass other layers.

A. Misconfiguration

Misconfiguration can result in unauthorized access to sensitive information, compromised software components, or service disruption. Additionally, it could also be a way for attackers to gain access to the other layers.

B. Software vulnerabilities

Software vulnerabilities can be exploited by an attacker to gain unauthorized access, manipulate data, or disrupt the operation of the overall agentic AI system. As the system layer, as well as other layers, might consist of several complex software components installed from various sources, vulnerabilities pose a significant threat to the entire application. This includes supply-chain attacks targeting third-party libraries.

C. Weak authentication

Weak or an altogether lack of authentication makes it easy for an attacker to gain access to internal components, access system logs, and escalate privileges. Depending on the system privileges obtained, an attacker may even alter some data, install modified software, or shut down the entire agentic application

D. DoS

As with any other service hosted on the Internet, an agentic application and its layers, including the system ones, are also susceptible to a distributed DoS (DDoS).

E. Exposed APIs

Exposed APIs can be leveraged by attackers to access confidential information or enable resource exhaustion attacks. Attackers can use these exposed interfaces to inject malicious code, retrieve sensitive data, or disrupt service availability by overwhelming the system with requests. This vulnerability not only compromises the security of the application but also poses a significant risk to data integrity and system stability.

V. Exposure matrix

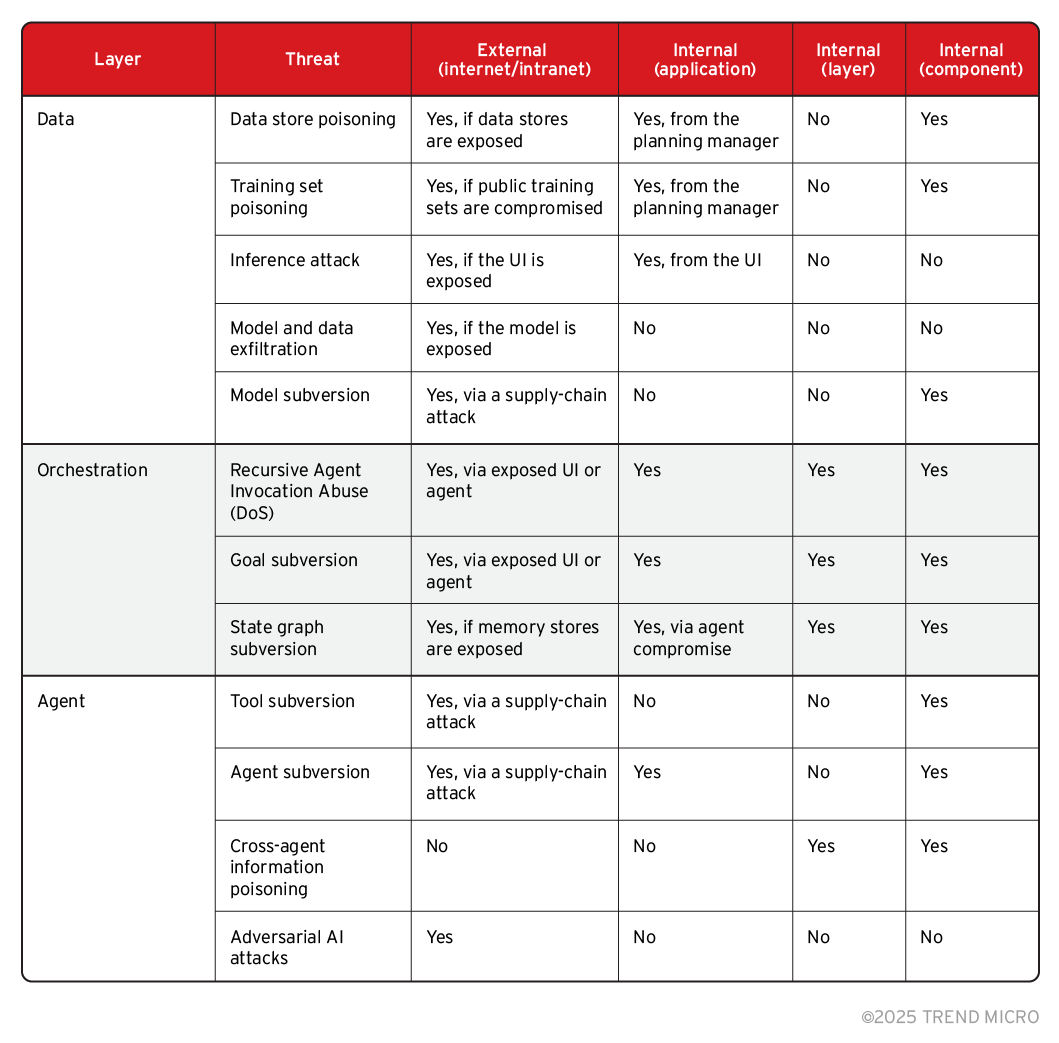

To better categorize these threats, we developed a structured mapping of the conditions required for the different types of attacks to succeed.

The exposure matrix illustrates whether an attack can be carried out by an external actor, or whether it requires control over a specific component, any component within a given layer, or even another layer in the system architecture. By examining these conditions, we can identify which security boundaries are most critical for preventing specific threats, and gain a clearer understanding of how each layer contributes to the system’s overall attack surface.

Table 1. Exposure matrix

Some of the most critical attacks occur when an exposed infrastructure component, such as long- and short-term memory, an unprotected UI endpoint, or an accessible model, is not properly secured.

Data exfiltration is a big focus point throughout the whole data layer, directly linked to the issue of exposed infrastructure. For agentic AI systems, the application data can reveal a lot of intel on the inner workings of the application and might even store proprietary and sensitive information. While addressing the infrastructure exposure certainly helps, there are still inference attacks that can exfiltrate data without necessarily accessing one of the data stores.

Supply chain attacks represent another significant threat across all layers of the system. This is especially problematic given that application developers often have no direct oversight over third-party libraries, tool repositories, or external models.

With today’s agentic applications heavily dependent on LLMs — particularly within the orchestration and agent layers — prompt injection attacks stand out as one of the most prominent and pervasive threat vectors.

Finally, we can observe a dichotomy between orchestration- and agent-level attacks: while orchestration attacks, such as state graph subversion, require a more targeted approach and deeper system insight, agent-focused threats like tool subversion may reach a broader but less targeted pool of victims.

Recommendations

An agentic system has a complex architecture with many moving parts talking to each other, often in non-deterministic ways. This paves the road for a precarious, multifaceted attack surface.

To help face the current and upcoming challenges of securing an agentic AI system, we can divide our recommendations into two kinds: design principles and ad-hoc security solutions.

I. Design principles

Organizations should keep in mind the following design principles for agentic AI systems:

- Component hardening: Each key component of the agentic application must be hardened and protected on its own, and appropriate controls need to be in place to ensure the integrity of the data coming from third party components outside of the company control (e.g., libraries, tool and agent repositories, public models and datasets).

- Layer and component isolation: While early agentic applications tend to blur the line between LLM, external tools, UI, and orchestration, with the technology increasing in complexity, it becomes important to maintain a proper isolation between the different layers and components, limiting the interaction between them to well-defined scopes.

- Secure component communications: In connection with the point above, it is also important to secure component communications, especially in the context of agentic applications powered by large language models. As we showed, prompt injection attacks are still very effective against LLMs and in an agentic application can come from either the UI, with a maliciously crafted request, any agent accessing the internet, or an exposed data storage. Validating communications between components passing around prompts for either agents or the orchestrator will become more and more important in the future.

II. Ad-hoc security solutions

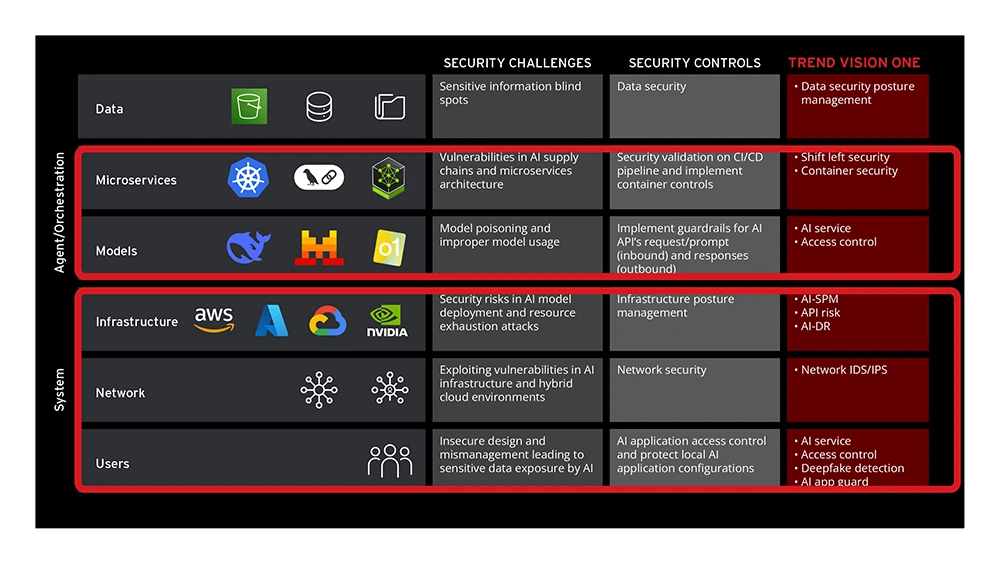

While many security challenges are unique to agentic architectures — and are expected to grow as agentic AI systems become more advanced — implementing robust security controls across every layer of the ecosystem is essential.

Trend Micro protects each of these layers, from the orchestrator and agents to data and system components, ensuring protection across the entire agentic stack.

Figure 4. Areas to secure in an Agentic AI system

The figure above shows how current AI security solutions can help protect the key components of future agentic architectures, while continuing to address legacy risks at the system level.

A. Data Layer: Protect sensitive information

AI systems risk inadvertently exposing sensitive data through model outputs, especially from training data or connected knowledge bases. Weaknesses in how data embeddings are handled can also lead to leakage and manipulated input data could corrupt model behavior.

As a part of Trend Vision One™, Cyber Risk Exposure Management (CREM) for Cloud Data Security Posture Management (DSPM), alongside its AI Security Posture Management (AI-SPM), addresses these threats. These solutions help detect vulnerabilities that could lead to data exposure and inventory AI-related cloud data storage. This allows organizations to better secure sensitive data and protect the integrity of data handling processes like vector databases.

B. Agent and orchestration layers: secure AI components and ensure model integrity

The microservices underpinning AI applications can suffer from supply chain vulnerabilities, where insecure components compromise the system. Additionally, if these services improperly handle outputs from AI models, they can become vulnerable to exploitation.

Trend Vision One™ Container Security counters these risks by scanning container images for threats before deployment; a "Shift Left" security practice. It also enforces security policies for running containers and provides runtime monitoring to detect exploits arising from mishandled AI outputs.

Furthermore, AI models themselves can be targeted. Attackers may use crafted inputs (prompt injection) to manipulate model behavior or cause harmful outputs. Models might also produce outputs that, if not validated, cause issues. Granting models excessive autonomy or access to other systems can lead to unintended consequences if the model is compromised. Uncontrolled use of model resources can also lead to service denial or unexpected costs.

Trend Micro Zero Trust Secure Access (ZTSA) (AI service) protects this layer by inspecting both user prompts and AI responses to filter malicious content and problematic outputs. It also enforces access controls to limit model capabilities and uses rate limiting to prevent resource overconsumption.

C. System layer: safeguarding the foundations, connectivity, and human-AI interactions

The cloud infrastructure supporting AI services can be compromised through its own supply chain vulnerabilities. If AI models are granted excessive permissions to alter infrastructure, it can lead to misconfigurations or breaches. The infrastructure hosting specialized AI databases, like vector databases, also needs robust security.

Trend Micro offers CREM for Cloud (AI-SPM) and AI-DR (XDR for Cloud). AI-SPM provides visibility into AI-related cloud assets, identifying misconfigurations and threats. XDR for Cloud offers broader threat detection and response capabilities for the cloud infrastructure that AI services rely upon.

Furthermore, AI services are exposed to network-level attacks. These can include denial-of-service attacks caused by overwhelming the service with requests, general network intrusions, or the exploitation of any network-exposed vulnerabilities in the AI system's components.

Trend Micro TippingPoint Threat Protection System (network intrusion detection and prevention systems), with its Virtual Patching capabilities, addresses these risks. It shields known vulnerabilities in network-facing components and, as an intrusion detection and prevention system, blocks malicious network traffic.

Finally, interactions between users and AI systems introduce risks. Users might submit malicious inputs to exploit models, or could be targeted by AI-generated responses that leak sensitive data. Users can also be exposed to AI-generated misinformation or other threats like deepfakes, and AI applications on their devices could be compromised.

Trend Micro’s ZTSA AI Service Access and Vision One Endpoint Security (V1ES) with AI App Guard secures this layer. ZTSA AI Service Access filters user inputs and inspects AI outputs to prevent malicious interactions and data leakage. On user devices, V1ES AI App Guard protects AI applications from tampering and malware.

Conclusion

Agentic AI systems, though still in their early stages, are rapidly evolving: becoming more complex, unlocking new markets, and finding diverse use cases. As their adoption accelerates, it is crucial to have a clear understanding of their components and the risks associated with them.

In our previous installment, we provided a clear definition of agentic AI systems and their core features. Our goal was to help navigate the current hype, cut through the noise, and offer a solid foundation for understanding agentic AI. In this article, we delved deeper into the architecture of agentic systems, analyzing both current and future components, such as agent repositories and marketplaces. We also provided a comprehensive overview of the risks tied to each component, as well as effective recommendations for risk mitigation.

By understanding such risks and implementing the right security measures, organizations can confidently move forward with developing and deploying agentic AI systems, addressing both current and future challenges in a secure and responsible manner.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

- The State of Criminal AI: Market Consolidation and Operational Reality

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One