By Nitesh Surana

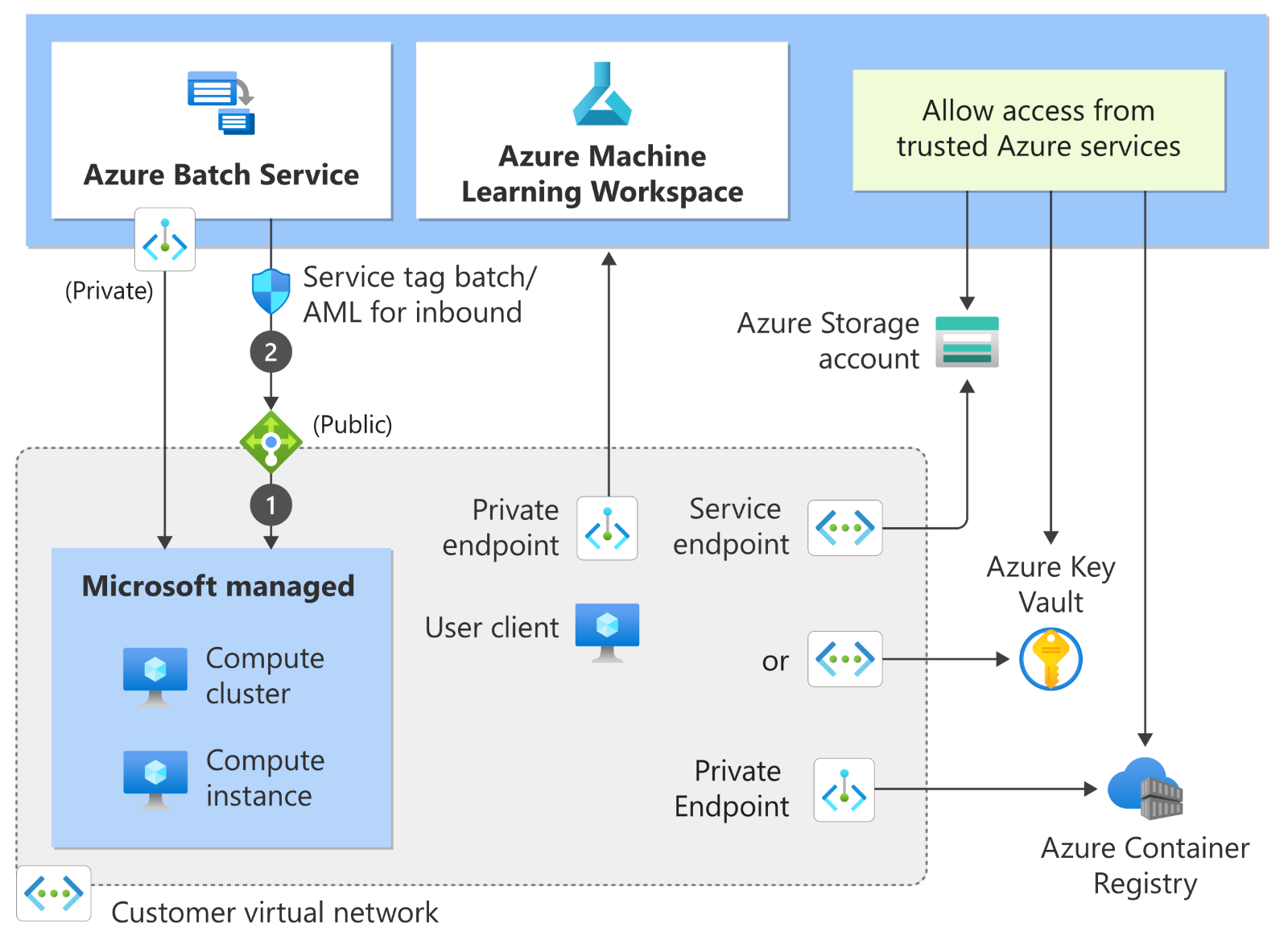

Azure Machine Learning (AML) allows for compute instances (CIs) and compute clusters to be deployed in subnets within vNets. Users can attach the CI to an existing subnet on a vNet at the time of creation of the CI. The vNet must exist in the same region as the AML workspace. This configuration enables users to access the CIs using vNet without needing to make the environment publicly accessible. For securing training environments, Microsoft recommends having AML deployments in vNets.

Figure 1. Securing training environments using vNets in AML service

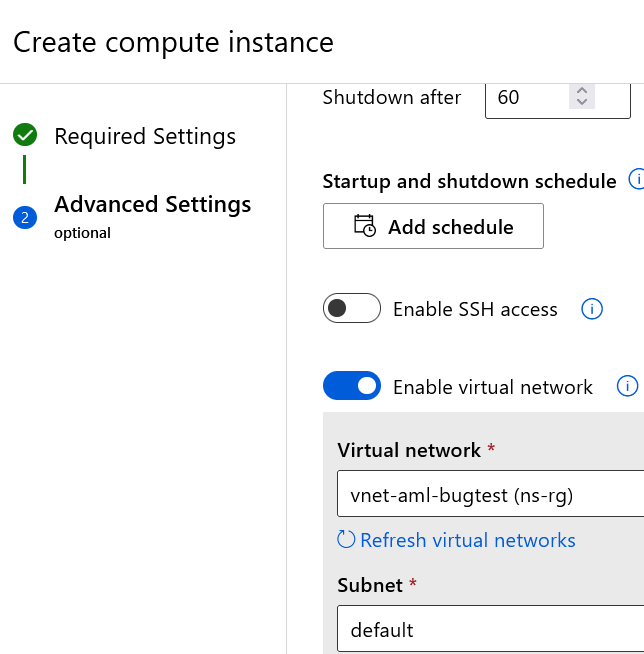

One can create a CI in a vNet, as shown in Figure 2.

Figure 2. Flag to enable vNet while creating a CI

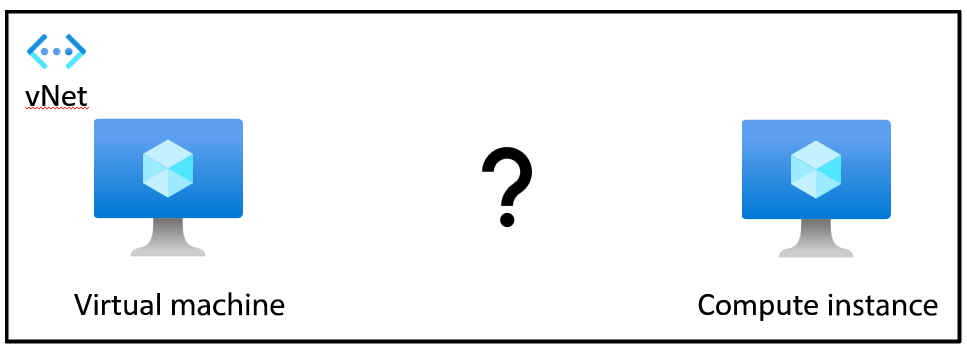

Exploring possible avenues for intrusion from an attacker’s perspective can be a worthwhile exercise, especially when the goal is to keep cloud resources protected. Since the vNet belongs to the user’s subscription, a scenario can be modeled as follows. On a vNet, there exists an Azure VM and a CI. If an attacker can get hold of the Azure VM, what could they possibly do from the Azure VM to the CI?

Figure 3. A vNet environment containing an Azure VM and CI

Our key finding pertains to a scenario where a network-adjacent, attacker-controlled VM exists in the same subnet as the AML CI. In such a case, we found that an attacker could fetch sensitive information about the services being run on CI remotely without needing any authentication. This information could help an attacker perform lateral movement by snooping in on the activities performed on a CI.

Deep Dive

While checking for accessible ports on a CI from an Azure VM, we came across port 46802. Since we had access to the CI, we figured out the underlying process responsible for exposing the port. The process is named “dsimountagent.” AML uses certain agents to manage CIs and these agents run as a service. It’s important to note that the agent is installed on all CIs by default. In our previous blog, we detailed about storage account access key being stored in the configuration files for two agents. The dsimountagent process is from a service named “Azure Batch AI DSI Mounting Agent.” The binary is written in Golang and contains debug symbols as it is not stripped.

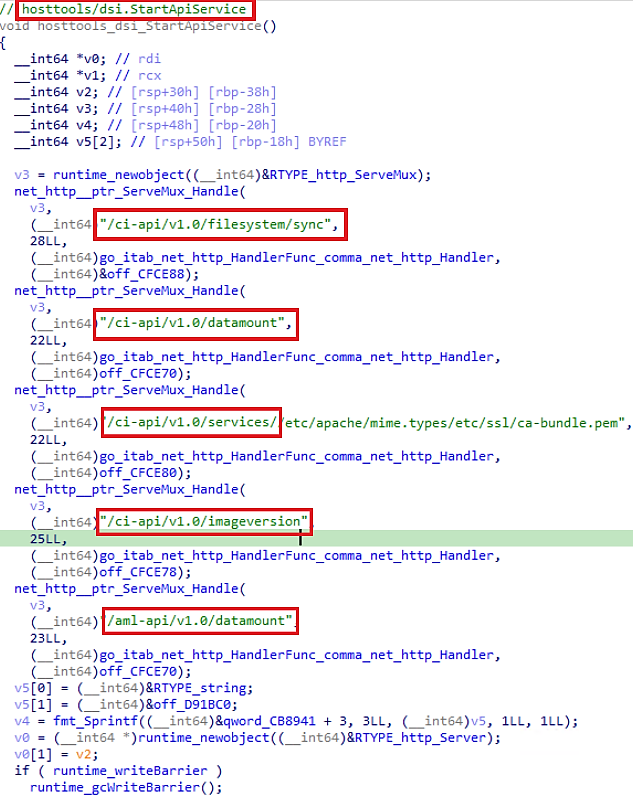

Figure 4. HTTP APIs exposed by “Azure Batch AI DSI Mounting Agent” on a CI

We looked for references to the service or the binary itself and concluded that the agent is not open-source like other agents that have been examined previously, such as WALinuxAgent and Open Management Infrastructure. While exploring the binary using IDA, we came across a function named “dsi.StartApiService” from the “hosttools” package that exposes the aforementioned APIs. We found that no authentication was required to access these APIs. Upon inspecting each endpoint, we found that the following actions could be performed on the CI:

| Exposed HTTP API | Function |

|---|---|

| /ci-api/v1.0/filesystem/sync | Execute sync command on a file |

| /{ci,aml}-api/v1.0/datamount | Execute the mount operation to remount the Azure File Share |

| /ci-api/v1.0/imageversion | View the CI’s OS image version |

| /ci-api/v1.0/services/ | Check the status of any systemd services |

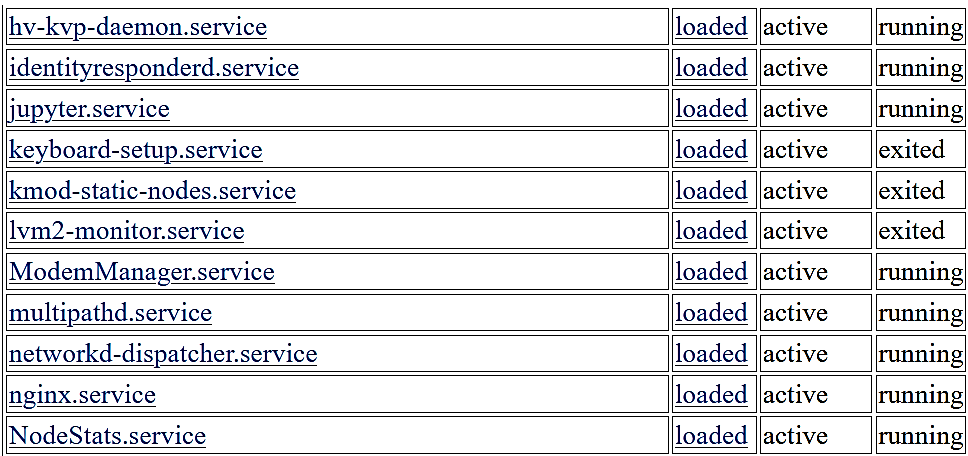

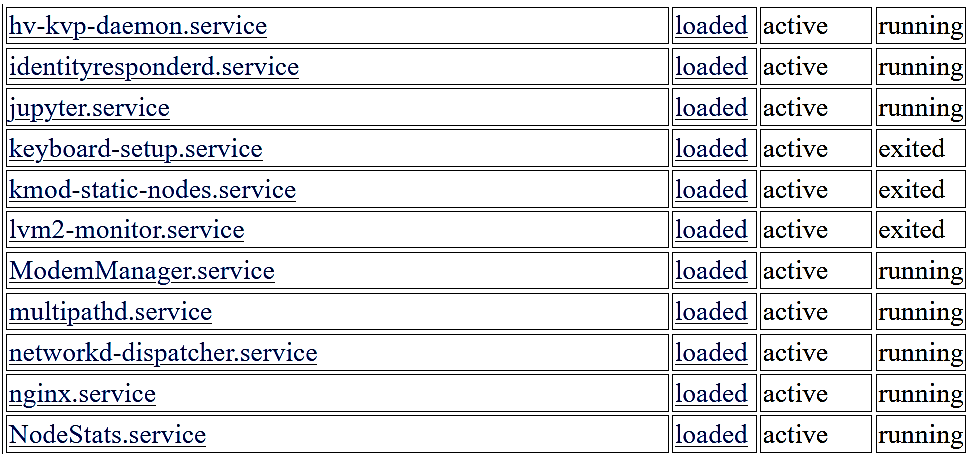

After checking the above endpoints, the “services” endpoint was particularly interesting. By querying the services API /ci-api/v1.0/services/, an attacker could view the list and status of the installed services on a CI.

Figure 5. List and status of installed services on the CI

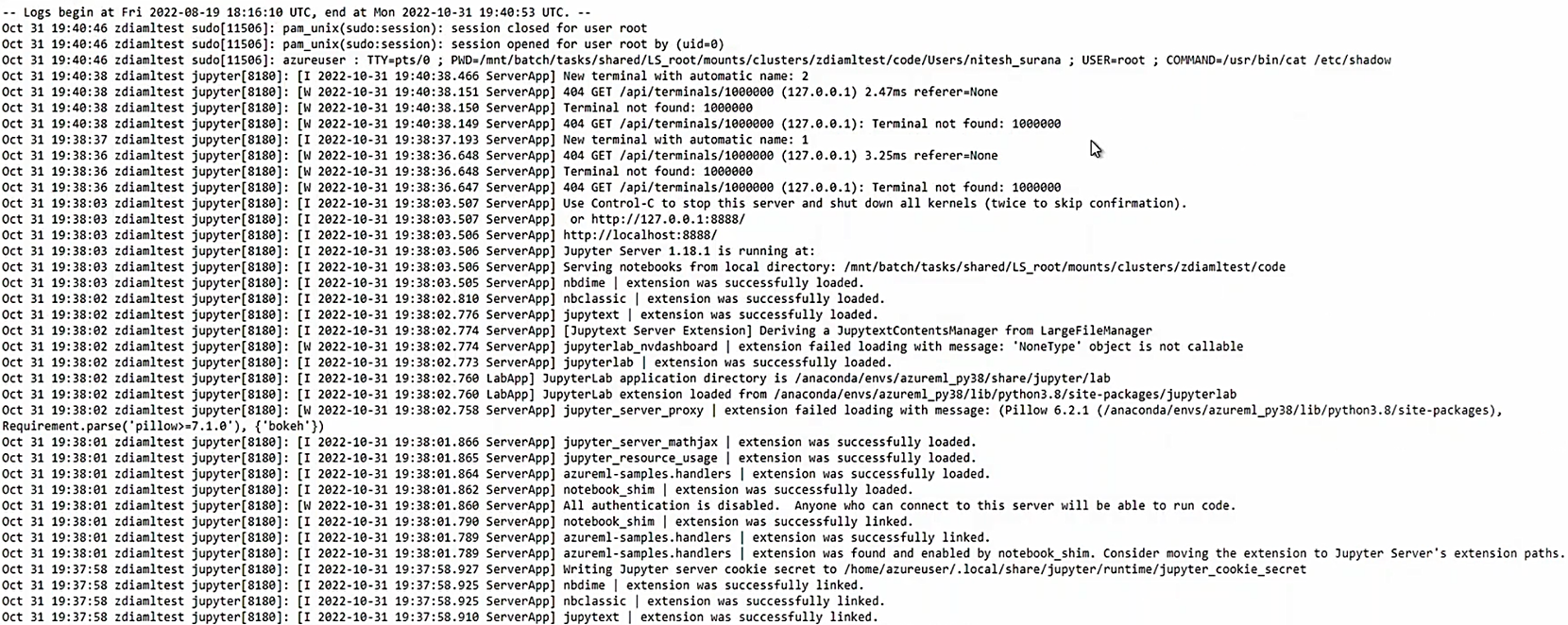

Additionally, we could view the service logs by querying /ci-api/v1.0/services/

Service logs aren’t quite interesting unless there is some sensitive information being logged into them. At the time of writing, we had a simple information disclosure vulnerability at our disposal. These service logs can aid an adversary to better understand the environment before planning and working on their next phase of attack. The next section describes one such avenue that shows the impact of this information disclosure bug in AML deployments.

Spying the Scientist

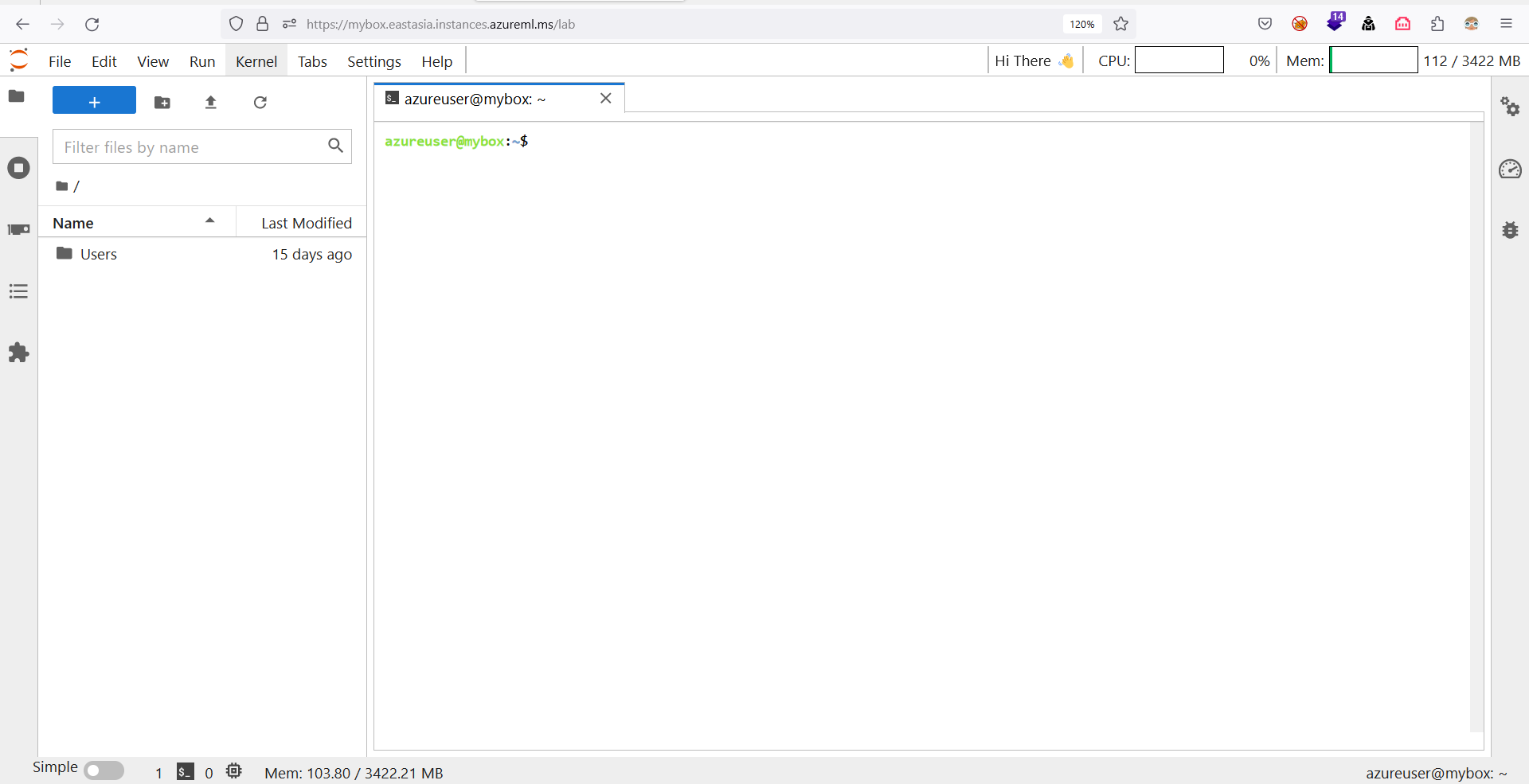

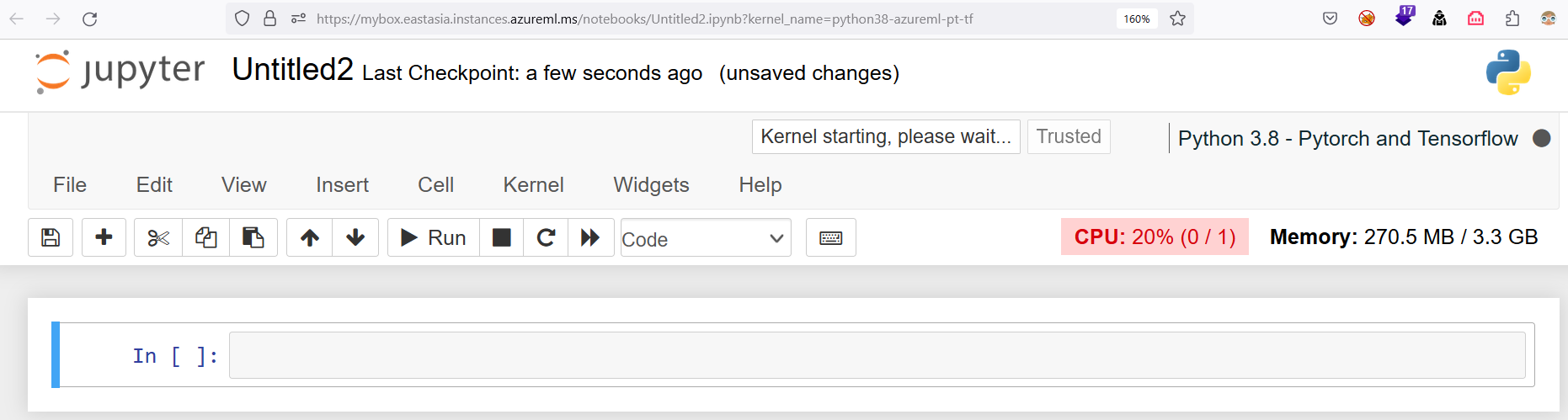

While exploring the list of installed services on a CI, we found that Jupyter is installed as a service. Using Jupyter, users can access the CI from within their browser without having to install or configure anything.

Figure 6. Using JupyterLab to access CI

Figure 7. Using Jupyter notebook to access CI

This is notably true for all AML CIs (see Figure 8).

Figure 8. Jupyter installed as a systemd service on AML CIs

Figure 9. Viewing Jupyter service logs from Azure VM on CI. Click photo to enlarge.

Jupyter logs are interesting to observe. We noticed that the commands executed as sudo using the Jupyter-based terminal (Jupyter Notebook, JupyterLab, browser-embedded terminal) get logged in the service logs. Now, if the data scientist or developer uses any Jupyter component and runs commands containing secrets, passwords, tokens, or credentials in the command line, an attacker with control over a VM in the same subnet in the vNet can easily gain unauthorized access to the commands, which could eventually lead to a more serious compromise and possible lateral movement within the AML environment. Additionally, these logs could be used to figure out when a terminal is created or deleted, the command line parameters supplied to the Jupyter server, loaded extensions.

Here's a demonstration of exploitation of this vulnerability:

Figure 10. The MLSee vulnerability designated as CVE-2023-28312

We coined this vulnerability as MLSee (as an attacker could see the actions performed by a user on their CI) and reported to Microsoft through Trend Micro’s Zero Day Initiative. The vulnerability was fixed, assigned an important severity information disclosure and tracked under CVE-2023-28312. After the vulnerability was fixed, we found that the service doesn’t listen on all interfaces and can only be reached by the nginx proxy that listens on port 44224.

Conclusion

Researchers have been reporting vulnerabilities in the agents used by cloud providers for quite some time. These secret agents are installed on resources in the customer’s environment without the customer being aware of them. However, the vulnerability we described seems to be of a different nature. The agent named “dsimountagent” is installed across all CIs and is managed by Microsoft. CIs are Microsoft-managed resources as well, and they don’t lie in the user’s subscription.

Now, picture a scenario where a compromise happens wherein an attacker abuses this information disclosure vulnerability in an intrusion attempt or an attack chain. When we think of the concept of shared responsibility of securing cloud environments, the lines seem quite blurry. There would be no cloud native logs generated if MLSee was exploited. Thus, end users wouldn’t have the chance to detect this vulnerability in the first place.

As various features are added to these secret agents, the attack surface also increases. Microsoft advocates the use of vNets in AML in their best practice guide. At times, modeling attack scenarios in secure configurations can uncover vulnerabilities in the service itself. The risks posed by the features in the agents that the CSP uses should be accounted for by threat modeling these agents better and considering possible avenues of attacks.

In today's complex and dynamic cloud environments, the principle of zero trust has emerged as a fundamental approach to cybersecurity. The essence of zero trust lies in the verification of every access request, regardless of the source's origin or location. This strategy fosters an environment where assumed trust is replaced by constant verification to minimize the attack surface and potential damage in case of a breach.

However, even within a zero-trust framework, certain vulnerabilities can still arise. This is highlighted by the scenario where an attacker on a particular VM in a subnet can gain unauthorized visibility into sensitive information, such as commands executed on a compute instance. This occurrence underscores the importance of why a stronger zero trust implementation should not only extend to external access attempts but also to lateral movement scenarios within an environment.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One