In the past, traditional security models have relied on perimeter defenses to protect critical assets. However, with the rise of cloud computing and internet of things (IoT) devices, perimeters have evolved, the attack surface has changed, and new security challenges have emerged. The zero trust security model, which brings together key cybersecurity principles that collectively improve an organization’s overall information security posture, is becoming increasingly popular, especially in and for cloud-native environments.

What is zero trust security?

Zero trust is a security approach that assumes that everything and everyone is a potential threat. It means that a network never trusts anything by default, and instead, grants access on a case-by-case basis. The zero trust model operates with the “assume breach” security principle, a mindset that expects a breach will happen or has already happened. This security model also uses authorization and role-based access control (RBAC). When properly implemented, RBAC can help determine users’ and devices’ identities (regardless of their location or if they have been previously verified) and access level to protect data from potential attackers.

Because there are many potential attack vectors in the cloud, it is essential for organizations that rely on cloud services to have a proactive and comprehensive approach to security.

Malicious actors can take advantage of small yet integral pieces of information that are leaked or important features that are misconfigured by either the developer or the cloud service provider (CSP) in a multi-service environment. In the past year alone, our research team noted several cloud security issues, including how malicious actors typosquatted and abused legitimate tools to steal Amazon Elastic Compute Cloud (EC2) Workloads credentials, took advantage of environment variable secrets, and deployed binaries containing hardcoded shell scripts designed to steal AWS credentials. And though these concerns are not labeled as vulnerabilities, the impact of these security issues should not be underestimated as cybercriminals can use it for lateral movement.

How does zero trust security work?

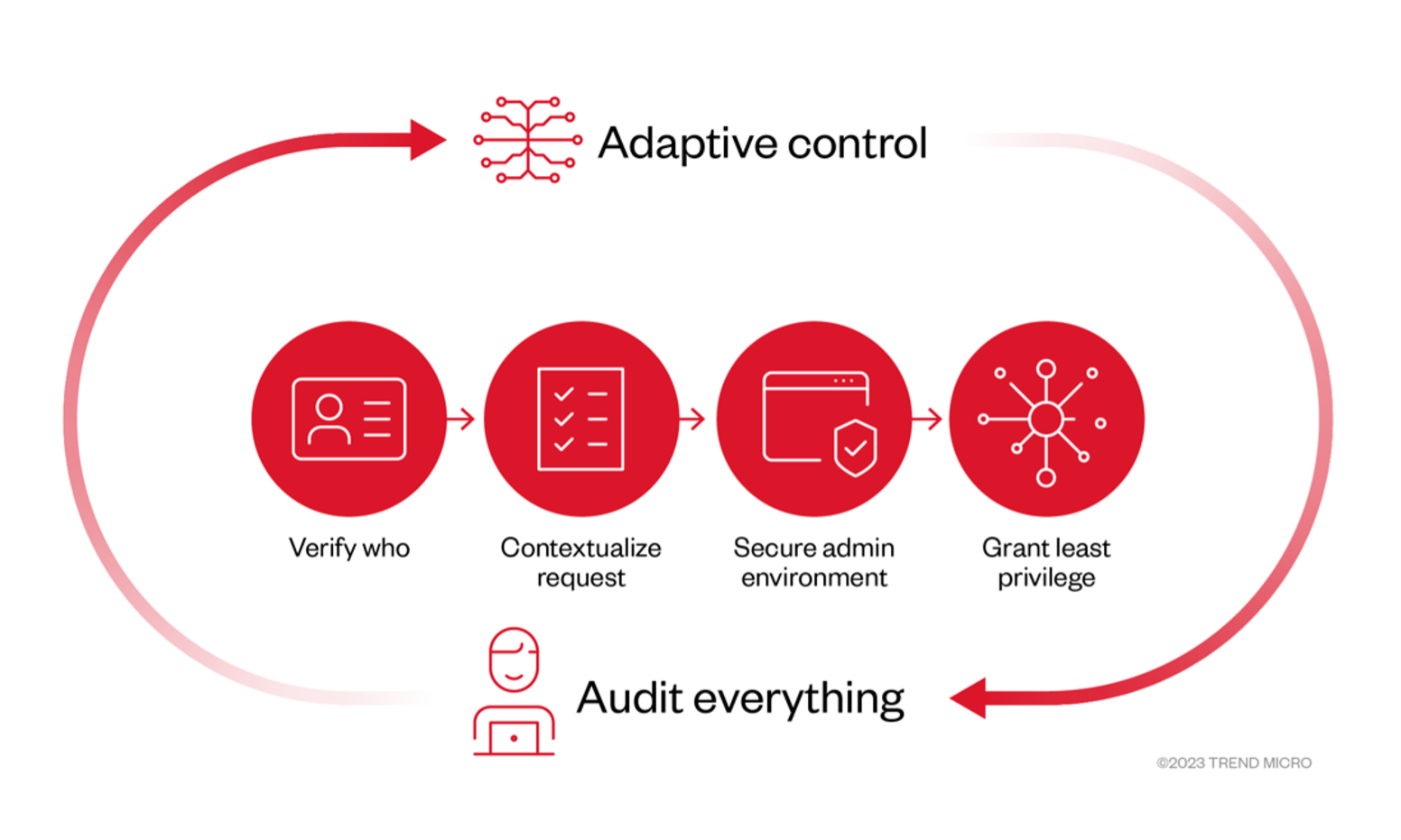

Zero trust security is based on four key principles: identity verification, access control, continuous monitoring, and the principle of least privilege.

Figure 1. The four key principles of zero trust

Identity verification

Organizations that use identity verification techniques in their operations do not assume that a user or a device is who or what they claim to be just because they have a username and a password. Instead, additional verification, such as smart card or biometrics, is required. By verifying the identity of users and devices, organizations can prevent unauthorized access and reduce the risk of data breaches.

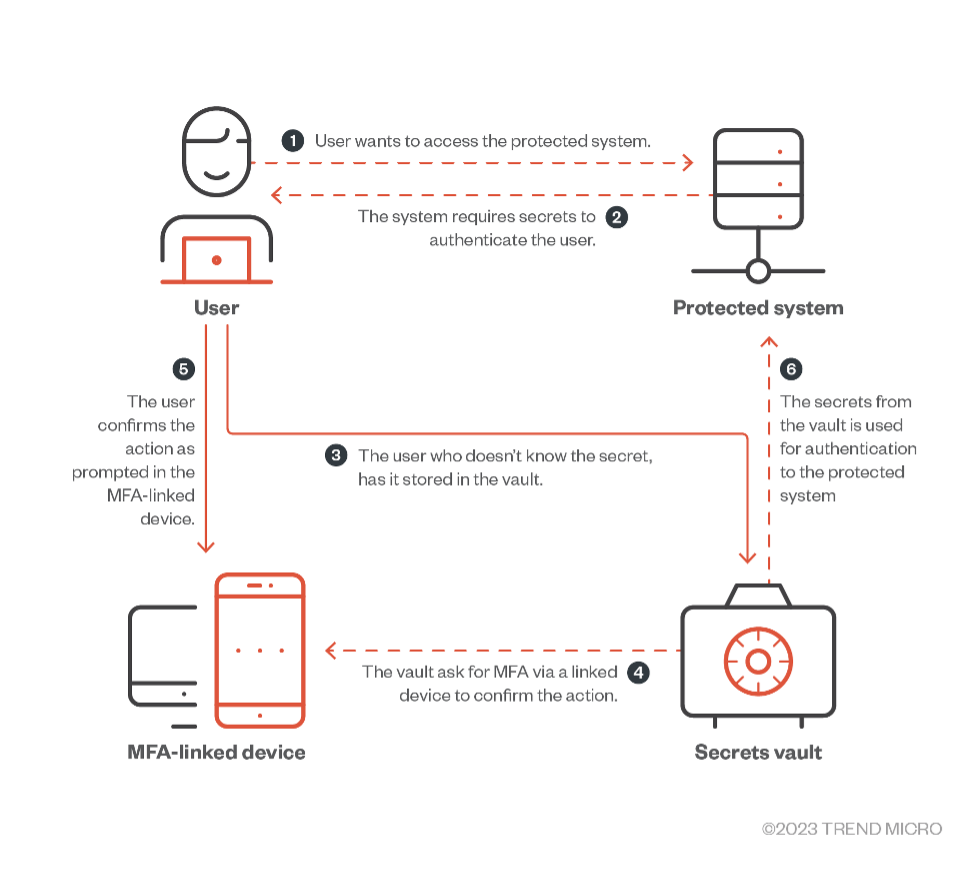

One key component of identity verification is multi-factor authentication (MFA), which requires users to provide two or more forms of authentication before access is granted. In the cloud, MFA can be used to verify the identity of users who are accessing cloud-native applications such as web-based or mobile apps. By requiring multiple forms of authentication, MFA prevents unauthorized access when a user's static password is compromised.

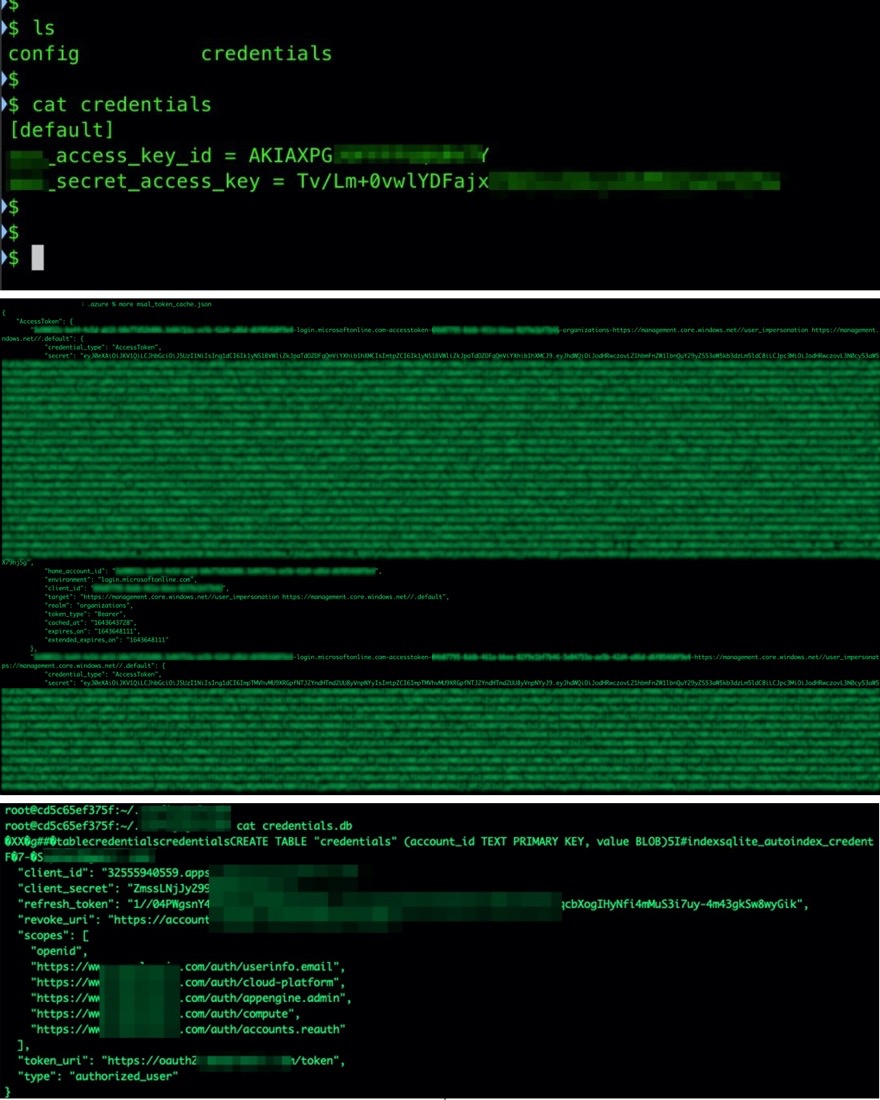

Based on previous analyses, most real-life attacks on cloud-native environments involve stealing authentication secrets for persistence. Malicious actors usually do this by exploiting an application that contains secrets in plain text inside files or environment variables. The exploitation can start by querying the service API from the cloud service provider (CSP) side or through a developer’s machine that uses any sort of local integration with the CSP, such as an integrated development environment (IDE), frameworks, or orchestrators. If multifactor authentication (MFA) is not set, malicious actors would only need to compromise one link in the chain to move forward with an attack.

Figure 2. An MFA-protected system

Source: Secure Secrets: Managing Authentication Credentials

Access control

Organizations that apply access control, the second principle of zero-trust security, do not assume that a user or a device should automatically have access to a particular resource just because they are in the network. Instead, organizations enforce strict controls on who can access what based on factors such as role, responsibility, and permission. By controlling access to critical resources, organizations can prevent the risk of, or, at the very least, minimize the scope of cyberattacks and data breaches.

RBAC, a method of access control that assigns permissions based on a user's role, responsibility, and job function, falls under this security principle. In the cloud, RBAC can be used to enforce least-privilege access to cloud-native applications, containers, and microservices. By assigning permissions based on roles, RBAC helps ensure that only authorized users can access sensitive data or critical resources. It’s important to note, though, that regardless of the environment, organizations have the responsibility of minimizing the number of roles.

Continuous monitoring

Continuous monitoring means that organizations do not readily assume that everything is okay just because all the right controls have been set up. Instead, organizations regularly monitor all network activity, including user behavior and network traffic, to detect anomalies and potential threats and respond to security incidents before they become major breaches.

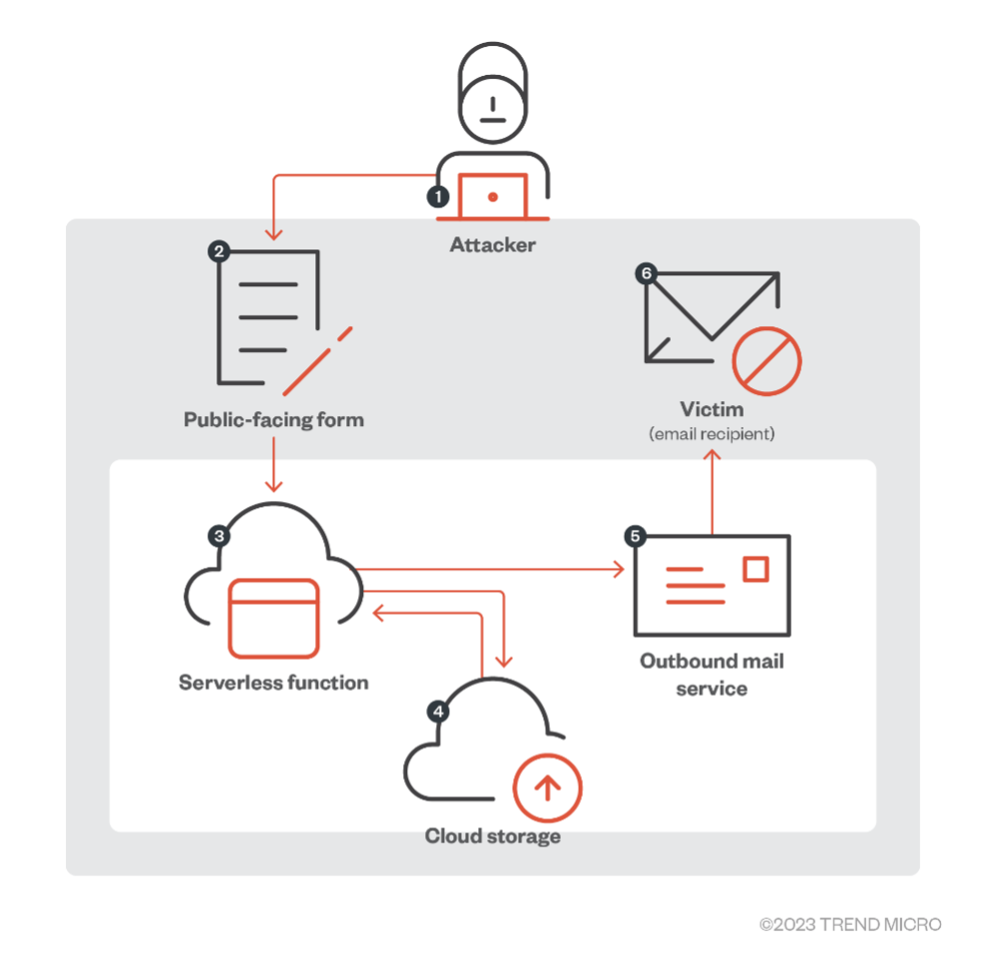

It is critical in a cloud native environment because it provides real-time visibility into all components, including containers, microservices, and APIs. In our investigation of real-world attacks on cloud native environments, we saw that traditional defenses cannot be applied. This is true for cases like serverless services, where the operating system is not managed by the users themselves, so they can’t install security solutions or manage container logs.

If a company uses serverless infrastructure to offer email services to its customers, an attacker can compromise the company by signing up for the service, creating an email account, and sending malware-laden emails to victims. Because the environment is 100% serverless, the company won’t be able to install traditional antivirus solutions because it does not maintain or secure endpoints or servers in the serverless infrastructure. Because cloud-native environments are dynamic and highly distributed, attacks usually generate less or atypical traces, which make tracking and securing each component challenging.

Figure 3. How an attacker can abuse a company that offers email services via a serverless infrastructure

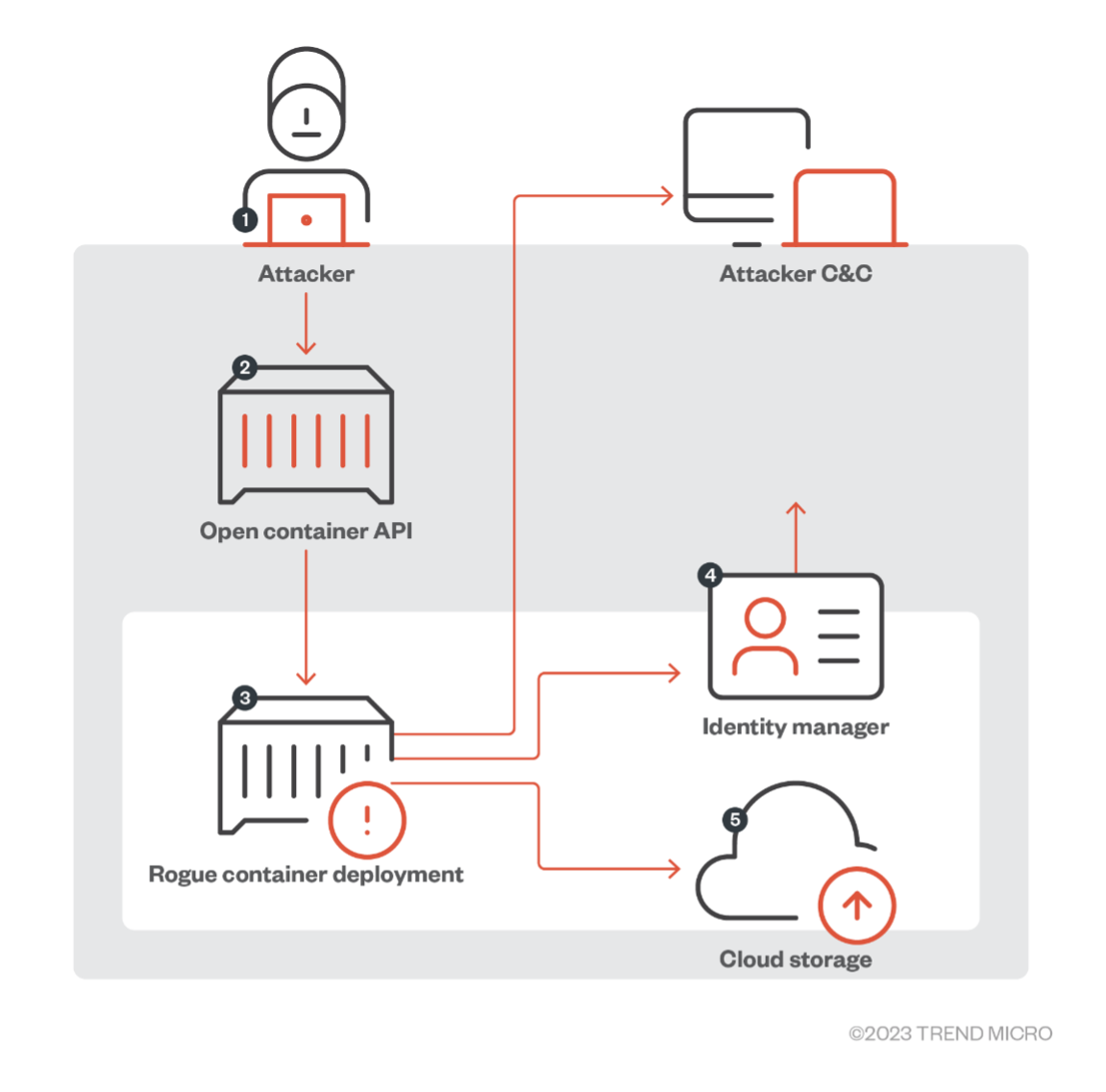

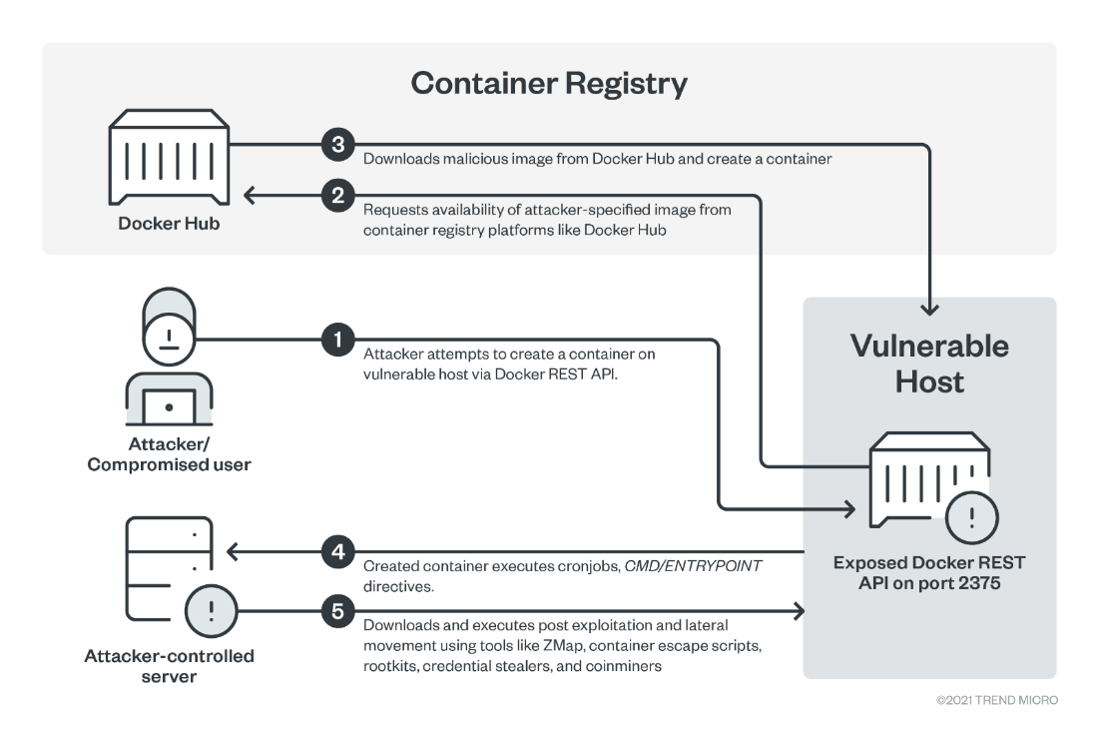

Another example of a challenging real-life attack on cloud-native environments is when an attacker uses an organization’s open container API to deploy a malicious container. From there, the malicious actor would gain access to the CSP’s internal API to gather more information about the organization’s account.

Figure 4. How an attacker can gather information on a victim organization via a rogue container deployment

In both cases, traditional security implementations are hard to put in place. Zero trust, which involves the continuous monitoring of and visibility into all components within an environment, can help address these threats. XDR solutions are ideal for continuous monitoring because they integrate data from multiple sources, including cloud logs, networks, and cloud environments.

The principle of least privilege

When it comes to cloud security, one of the most important security practices is least privilege access. This means that users and services should only have access to the resources and permissions they really need to perform their specific tasks. By limiting access to the minimum necessary, organizations can reduce the risk of unauthorized access that could lead to attacks and data breaches.

In the cloud, implementing the principle of least privilege can be especially challenging due to the complexity of cloud infrastructure. That's where Cloud Security Posture Management (CSPM) solutions come in. CSPM solutions enable organizations to identify vulnerabilities and misconfigurations that could lead to security issues. Think of it like a security guard for your cloud infrastructure.

To implement a zero trust security approach, organizations would need to start adopting the principle of least privilege. This means that all users and systems are treated as potential threats until proven otherwise. All access requests should be thoroughly vetted and authenticated before being granted. As part of a good defense strategy, all access requests should be logged and audited as these can help trigger the identification of suspicious activities.

The least privilege implementation can be used to minimize the impact of these two attack types:

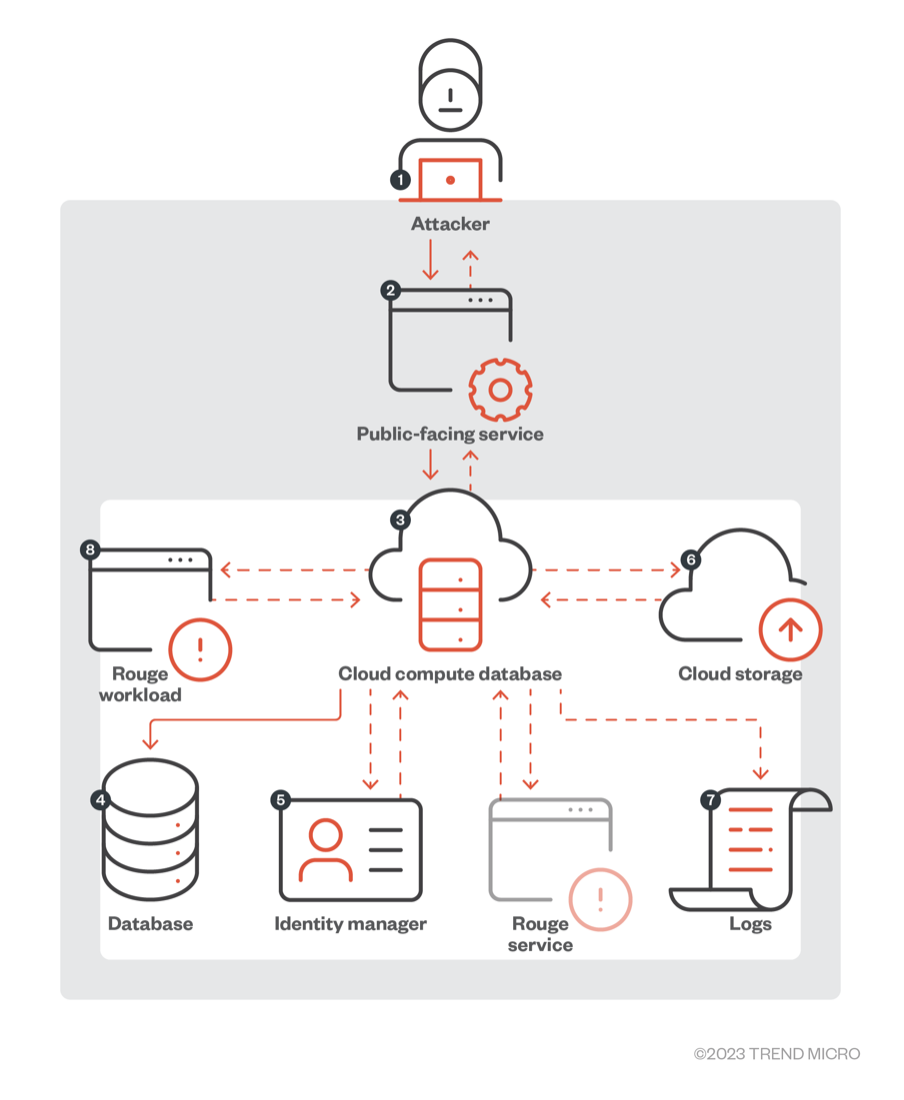

Privilege escalation within a cloud-native service

An attacker could exploit a public-facing service via an initial compromise, then use the service as a pivot for the next steps of the attack. In practical terms, an attacker could get inside a workload, find credentials in environment variables, and use those credentials to query the user management service for other credentials that could grant more privilege. When successful, the attacker can then change configurations, start new rogue services, and remove or disable the ability to monitor actions made inside the compromised service.

Figure 5. How an attacker can exploit a public-facing service

Lateral movement for a data breach

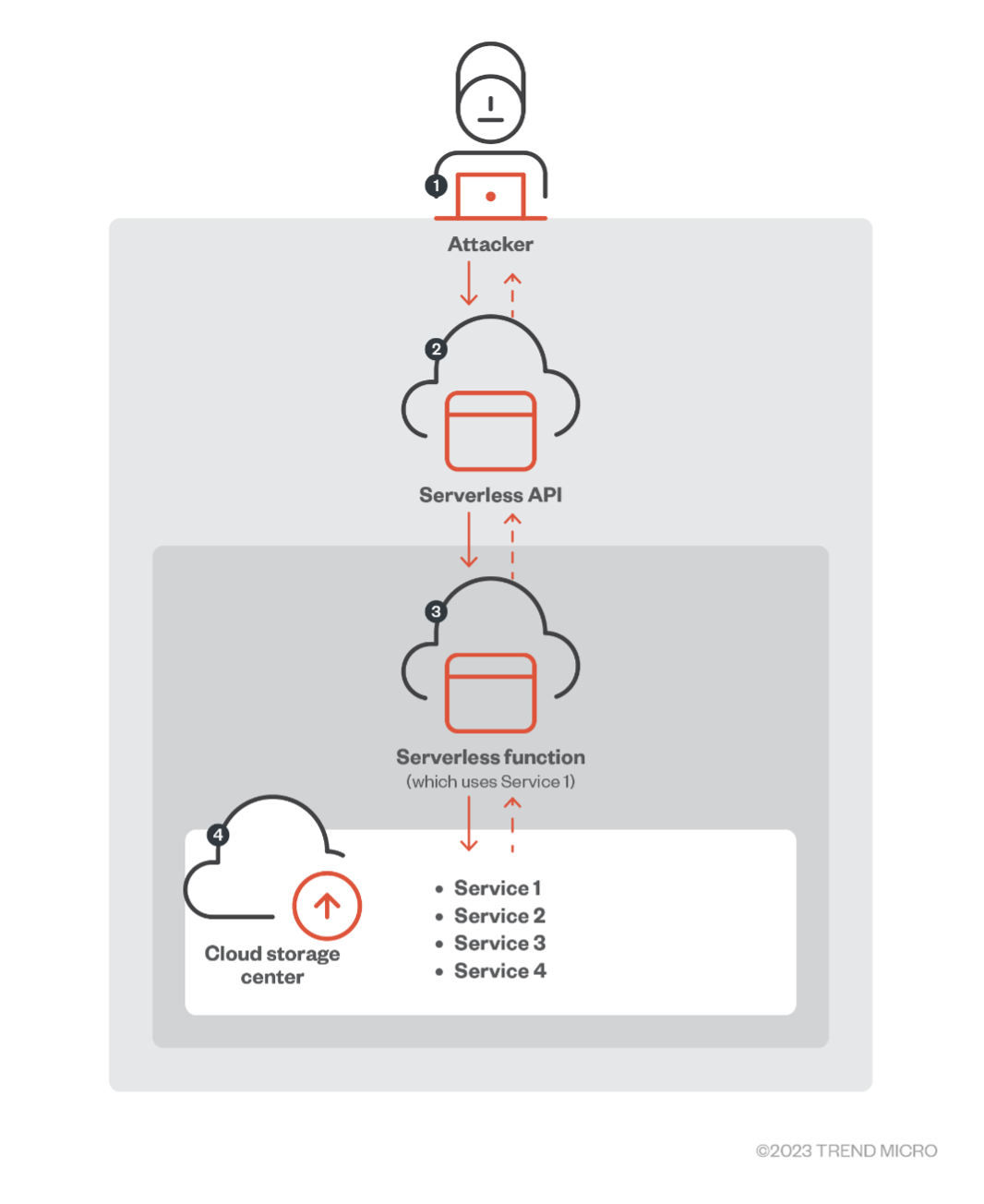

An attacker could exploit a serverless application using the internal API system of the CSP to check its permissions. When that the serverless application has read and write permissions on its cloud storage services, the attacker could upload a command-line interface (CLI) tool that not only allows the querying of the application’s permission status but also the dumping or changing of cloud storage services.

Figure 6. How malicious actors can abuse a serverless application for lateral movement

In both cases, it would matter if the service and the user that originated the tokens have reduced privileges among other services.

Implementing zero trust security in cloud-native environments

As previously mentioned, traditional security models aren’t always as effective when it comes to the cloud’s dynamic and distributed nature. However, organizations can use several strategies to implement zero trust security in the cloud:

Start with a strong identity foundation

A strong identity foundation is the first step to implementing zero trust security. Organizations need to ensure that they have a robust identity verification process, such as multi-factor authentication, in place. Where it makes sense, RBAC should also be used to assign permissions based on a user's role and responsibility. If a project offers different levels of access, whether through pages, forms, or APIs, the access should also be designed to be secure and restricted. Assigned permissions and access levels should be regularly reviewed and updated.

This level of detail should be implemented at every step of a project lifecycle, and it should start with providing granular access to all involved team members within the CSP. Earlier this year, we reported on how malicious actors are attacking supply chains by targeting developers.

We also previously talked about how cybercriminals can target authentication secrets that are improperly stored. In case a secret is inadvertently leaked, the implementation of identity rules together with the other security best practices can limit the extent of an attack. This is because users are only given access to a portion of the resources, which prevents lateral movement.

Figure 7. Examples of credential storage methods for Amazon Web Services or AWS (top), Microsoft Azure (middle), and Google Cloud Platform or GCP (bottom)

Source: Trend Micro

Use encryption to protect sensitive data

It’s essential for organizations to make sure that sensitive data is encrypted both at rest and in transit. Encryption algorithms that are appropriate for the data’s level of sensitivity should be used, and encryption policies should be regularly reviewed and updated to ensure that they're still effective. All types of data should be assessed for their respective sensitivity levels, and all sensitive data should be encrypted.

Monitor network traffic and user activity

XDR tools can help organizations monitor network traffic and user activity. These tools can help security teams look for anomalies and potential threats and allow them to quickly investigate suspicious activities. This is especially true when dealing with cloud-native projects or when using cloud services where logs might not be as accessible. Prior to the development of any project, organizations must be on top of all logging and monitoring tasks to guarantee that confidential details are not leaked.

When it comes to network traffic, it’s important to keep an eye out for attacks that might have been happening for an extended period and have a direct impact on the company’s finances. Based on our discovery and observation of several hacking teams that specifically target cloud and cloud-native environments, once they find a weakness, they won’t leave empty-handed, regardless of their attacks’ level of sophistication and end goal.

We have observed that a majority of these attacks follow the same steps:

- Identifying a public-facing service

- Identifying a weakness, such as a misconfiguration or a vulnerability

- Exploiting the identified weakness

- Depending on the system exploited, starting a malicious process or container that is a cryptocurrency miner

- Further exploration or exploitation

By closely monitoring the network, these malicious activities can be identified and alerts can be generated, especially since the protocols and pools used by cryptocurrency miners are widely known. And given the resource consumption needed to mine these days, a quick response is crucial to avoiding substantial financial loss.

Organizations should also adhere to industry-wide best practices, including subscribing to the MITRE Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) framework, a globally accessible knowledge base that provides security guidance.

Figure 8. How malicious actors abuse cloud and cloud-native environments to mine cryptocurrency

Source: Compromised Docker Hub Accounts Abused for Cryptomining Linked to TeamTNT

Implement network segmentation

The following are effective ways to implement network segmentation in cloud-native environments:

Virtual private cloud (VPC) and subnets

Most CSPs provide a VPC service that allows the creation of a virtual network in the cloud. Within the VPC, organizations can create subnets and use network access control lists (ACLs) and security groups to control traffic between subnets. For example, an organization can have one subnet for their web application servers and another for their database servers. The organization can then restrict traffic between the two subnets to only what is necessary.

Microsegmentation

Microsegmentation is a method that allows network segmentation down to the individual workload or application level. This security technique gives organizations a better level of control over network traffic and access. For example, organizations can use microsegmentation to stop the traffic between two specific workloads that have different levels of sensitivity.

It's worth noting that implementing network segmentation in a cloud-native environment can be challenging. If an organization has an application that uses serverless functions, applying network segmentation can be difficult to do without negatively affecting the serverless application’s performance. Unfortunately, attackers could use a serverless function as a point of entry to gain access to a cloud account, move laterally across the network, and gain access to sensitive data. To prevent this type of attack, organizations can implement network segmentation by placing serverless functions in their own separate VPC. They can then only allow necessary traffic to and from the VPC to keep it secure. Organizations are recommended to use a combination of techniques, such as VPCs, Kubernetes network policies, and microsegmentation, to effectively implement network segmentation.

Conclusion and security recommendations

Cloud environments offer many benefits, including scalability, flexibility, and cost savings. However, they also present unique security challenges that must be addressed to remain protected against cyberthreats.

To mitigate the risks associated with cloud-native security threats, it's important to adopt a comprehensive strategy that incorporates all aspects of cloud security. This strategy should include implementing zero trust security, using cloud-native security tools, securing access, and implementing network segmentation.

In addition, organizations should know vulnerabilities are not the only security concern in the cloud-native world. Cloud environments are also vulnerable to other types of attacks and risks, such as phishing, social engineering, misconfigurations, and data leaks. Thus, it's crucial for organizations to have security measures in place that address all types of threats and potential weaknesses.

Finally, it's essential to recognize that security in the cloud is a shared responsibility. CSPs are responsible for securing the underlying infrastructure, while customers are responsible for securing their own resources and data. It's important for organizations to have an in-depth understanding of the appropriate measures they should take to secure the operational tasks for which they are responsible.

By following these best practices and adopting a comprehensive approach to cloud security, organizations can ensure that their cloud-native environments have strengthened resilience and the ability to withstand cyber threats.

TheTrend Micro Apex One™ solution offers threat detection, response, and investigation within a single agent. Automated threat detection and response provide protection against an ever-growing variety of threats, including fileless and ransomware. An advanced endpoint detection and response (EDR) toolset, strong security information and event management (SIEM) integration, and an open application programming interface (API) set provide actionable insights, expanded investigative capabilities, and centralized visibility across the network.

Trend Micro Cloud One™ – Endpoint Security and Workload Security protect endpoints, servers, and cloud workloads through unified visibility, management, and role-based access control. These services provide specialized security optimized for your diverse endpoint and cloud environments, which eliminate the cost and complexity of multiple point solutions. Meanwhile, the Trend Micro Cloud One™ – Network Security solution goes beyond traditional intrusion prevention system (IPS) capabilities, and includes virtual patching and post-compromise detection and disruption as part of a powerful hybrid cloud security platform.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

- Threat Attribution Framework: How TrendAI™ Applies Structure Over Speculation

- AI Skills as an Emerging Attack Surface in Critical Sectors: Enhanced Capabilities, New Risks

- They Don’t Build the Gun, They Sell the Bullets: An Update on the State of Criminal AI

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One