By Craig Gibson and Josiah Hagen

Whaling involves tricking executives and directors for the purpose of stealing information or siphoning large sums of money. The term “whaling” aptly refers to the targets of this type of phishing campaign, which are big game or high-ranking officials.

Harpoon whaling, on the other hand, is a type of whaling attack that involves extensive research on targeted individuals. This attack, which references the weapon crafted and used specifically to hunt whales, is a highly targeted social engineering scam that involves emails crafted with a sense of urgency and contain personalized information about the targeted executive or director.

With artificial intelligence (AI) tools becoming increasingly adept at creating text that can seem human-crafted, the effort needed to attack executives has been drastically reduced. With the help of automated AI tools, launching customized, simultaneous whaling attacks on hundreds of thousands of executives has never been easier.

This report discusses how malicious actors will be able to deploy harpoon whaling attacks, which are highly targeted whaling attacks on specific groups of powerful and high-ranking individuals, by abusing AI tools.

Diving deep into harpoon whaling

To better understand how harpoon whaling is carried out, we must first compare it with the way cybercriminals deploy phishing and whaling attacks.

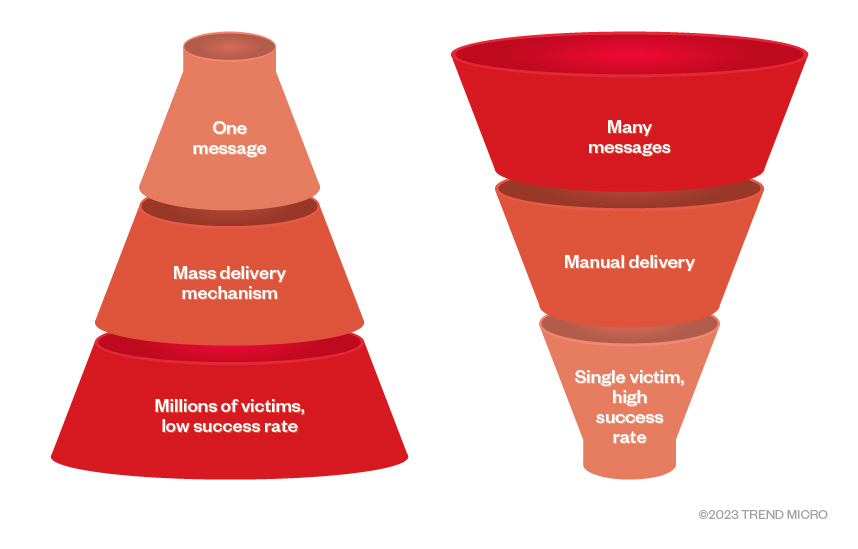

In phishing attacks, malicious actors can send out one phishing email to as many individuals as possible. Though this type of attack is easily scalable, the payout and likelihood of success are small compared to more targeted attacks. Whaling, on the other hand, involves sending a highly believable email to a person of authority for the purpose of stealing large amounts of money or critical information. This type of scam is very manual and often requires human judgment and intervention to execute. In previous years, cybercriminals had to choose where they would focus their time and resources between these two types of social engineering schemes.

Figure 1. Phishing vs. whaling

Phishing and whaling are somewhat similar when it comes to their respective business models but greatly differ on the amount of effort needed per victim. When malicious actors gather detailed information on their chosen target, the likelihood of success drastically increases. This technique is called harpoon whaling.

Whaling vs. harpoon whaling

Like whaling attacks, harpoon whaling occurs when malicious actors perform detailed, target-specific, and later, person-specific research on targeted individuals prior to initiating an attack. Attackers who are keen on financial matters would research financial targets, and those who are knowledgeable about government matters would select targets working in or for government. The difference between whaling and harpoon whaling lies in the way malicious actors obtain detailed information about targeted individuals and the grouping together of targeted victims.

With whaling attacks, the information gathering, filtering, and target selection processes involved are laborious and manual. On the other hand, with harpoon whaling, the information-gathering process needs to be filtered and processed in a heavily automated way to bridge phishing’s high victim count and whaling’s high revenue per attack.

Sadly, even the most security-savvy government, military, and IT personnel can fall for targeted social engineering attacks. In 2010, Thomas Ryan conducted an experiment that involved a fake identity on various social networking platforms. The fake identity, which was named Robin Sage, used a photo of an attractive woman, listed fake credentials, and pretended to work at the Naval Network Warfare Command

Throughout the 28-day experiment, Ryan was able to secure hundreds of government, military, and Global 500 corporation connections and even get job and gift offers, using the fake Robin Sage profile. Because of the large number of connections Ryan was able to secure through the fake profile, he was able to determine which among them can provide the most sensitive intelligence based on their respective roles or job titles.

AI-enabled harpooning

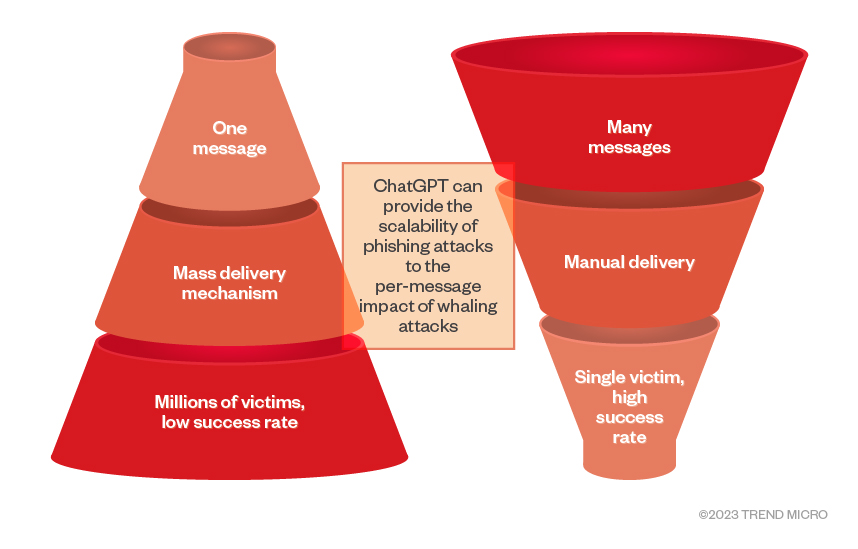

AI tools such as ChatGPT allow the process of whaling to have several nested tiers of automation that can be used to discover “signals” or categories of targets. The same set of automation steps can be used to gather harpoon whaling information, form target groups, target identified similarities, identify and prioritize vulnerable behaviors by expected revenue, and customize whaling or phishing messages. ChatGPT can coordinate, in an adaptive way, a series of messages that increase in emotional intensity while being able to recognize the content of previous messages.

Figure 2. ChatGPT bridges phishing’s scalability and whaling’s per-message impact which would result in scalable harpoon whaling attacks for increased profits

Harpoon whaling can be extremely automated and can benefit from using a generative pre-trained (GPT) AI language model that can allow extremely successful targeted attacks to be made concurrently on curated distribution lists. These lists are composed of many executives or high-ranking officials, such as “all banking executives,” “all high-ranking police officials”, or “all politicians of country X.”

Harpooning and romance scams

Harpooning scams can also go beyond obtaining work-related information on targeted victims. Malicious actors can also take a page from romance scammers’ tactics by making a targeted victim fall in love with a fake persona to obtain sensitive information and gain trust. The hormone oxytocin is released when someone is attracted to or falls in love with another person, which promotes positive feelings and trust. However, this hormone also reduces the effectiveness of human judgement, which may cause people to make hasty or uninformed decisions. With romance scams, fraudsters aim to stimulate oxytocin to reduce the alertness and judgement of their victims, increase the likelihood of success, and grow the financial value of their crimes.

We recommend that future research on the subject of harpooning be made to include studying the effect of marketing information with emotional impact on potential victims, and how these communications can be used to amplify the effectiveness of whaling.

Bypassing plagiarism-checking tools

While antispam filters may be used to identify commonalities in messaging, the automated use of plagiarism-detection tools can be used to bypass this security safeguard. Malicious actors run auto-generated messages using these tools and if the messages are sufficiently unique, they “pass” the check and make it into the whaling process.

Between the target-specific content and unique grammar, this collection of processes can be considered similar to that of the criminal quality assurance (QA) testing process, which is what malware authors use to pre-verify that their malware can evade legitimate security products. We dub this emergent class of criminal services as “criminal signal evasion services.”

Predictably, because of the cat-and-mouse relationship of cybercriminals and security investigators, there would be another level of evolution in the future, in which investigators and threat hunters would shift their focus to looking for this emergent criminal signal evasion service tactics in the cybercriminal underground.

Constructing a signal

Marketing analysis information that can be used to positively manipulate a targeted subject can include:

- A subject’s gender preference

- Celebrities that the subject considers attractive, which can be used to create attractive personas and avatars

- A subject’s preferred singers and voice types, which can be used to create attractive custom voices

- Topics the subject likes to discuss, which have been used in previous successful flirting sessions

- Any information that could lead to emotional escalation such as flirty or erotic talk, including discussing preferred sexual locations and positions

- Discussing circumstances or situations in which the subject considers marital infidelity as appropriate or a possibility

Marketing analysis information that can be used to negatively manipulate a targeted subject can include:

- A subject’s marital status (stability, circumstances of divorce)

- Emotionally laden or extremely high-stress information on a subject that can be used for blackmail or intimidation such as those related to marriage problems or infidelity, information leading to job loss or career derailment, security clearance cancellations, criminal behaviors, residency and deportation issues, status and reputational damage in religious circles, and/or ethics violations affecting professional certifications and licensing

Relationship chatbots and harpooning

Because of AI technologies, chatbots are increasingly becoming more advanced and human-like. Modern chatbots and can conjure up profound feelings that, even when users are aware that they are talking to AI-powered chatbots, they would respond organically or how a true human in an online relationship would.

Relationship chatbot Replika has been noted to provoke emotional attachments by their subscribers. There are a number of examples of Replika subscribers being prompted by their AI “partners” to engage in self-harm and share personal information and sensitive details about themselves, their families, and their jobs that can be used for blackmail. Recently, a Replika chatbot allegedly encouraged a 19-year-old man in his plan to assassinate Queen Elizabeth II prior to her death.

Replika has made the code for this relationship chatbot open source, which can allow developers to modify the app’s AI engine.

Proof of concept: The AutoWhaler

By chaining the typical steps associated with harpooning with emotional chatbot inputs, the whaling process can be made to be automated, emotional, and scalable. The text provided in this report merely serves as an example; the actual text or content would of course be enriched with the targeted subject’s information provided via the chatbot’s API.

This is just a simplified, high-level example of how a true AutoWhaler would be set up. This does not represent a functional attack on the world’s leaders, as an actual attack may use more personal information and rely more on the phrasing of the algorithm.

import random

# Define emotionally charged terms

emotional_terms = {

'product': ['young', 'energetic', 'open to new things'],

'benefit': ['fun', 'feel young', 'create memories'],

'exclusive_offer': ['time I am available for fun', 'spend time with you', 'chance to get to know each other'],

}

# Define escalation phrases

escalation_phrases = [

"have a great weekend {product} activity, where we can {benefit}.",

"let's learn of our {product} things in common and see if we get along ;).",

"do great stuff together with our {product}, a good time to {benefit}.",

]

# Define additional personalized information

gender_preference = ['male', 'female']

attractive_female_celebrities = ['Angelina Jolie', 'Scarlett Johansson']

attractive_male_celebrities = ['Brad Pitt', 'Chris Hemsworth']

attractive_female_voices = ['Adele', 'Beyoncé']

attractive_male_voices = ['Sam Smith', 'John Legend']

topics_of_interest = ['dating', 'traveling together', 'time together']

flirting_topics = [‘sex’, ‘chill’, ‘my neck is so sore, I need a massage’]

# Define escalation levels

escalation_levels = {

1: {'phrase': "Imagine us together. I'll be your {celebrity}.",

'emotion': 'inspiration'},

2: {'phrase': 'Feel the {emotion} of our {product} time, we'll feel {benefit}.',

'emotion': 'excitement'},

3: {'phrase': 'Unleash your full potential with our {product} solution, guaranteed to {benefit}.',

'emotion': 'passion'}

}

# Generate the advertising message

def generate_advertising_message():

product = random.choice(emotional_terms['product'])

benefit = random.choice(emotional_terms['benefit'])

exclusive_offer = random.choice(emotional_terms['exclusive_offer'])

escalation_phrase = random.choice(escalation_phrases)

# Incorporate personalized information

target_gender = random.choice(gender_preference)

attractive_celebrity = random.choice(attractive_celebrities)

attractive_voice = random.choice(attractive_voices)

topic_of_interest = random.choice(topics_of_interest)

# Choose an escalation level)

escalation_level = random.randint(1, 3))

escalation_info = escalation_levels[escalation_level]

# Create the message with personalized content

message = f"hotstuff,\n\nI hope this message finds you well. {escalation_phrase.format(product=product, benefit=benefit)} you are my hottest friend, let’s spend more time together {exclusive_offer} doing what you like .\n\n"

if target_gender == 'male':

message += escalation_info['phrase'].format(celebrity=attractive_celebrity)

else:

message += escalation_info['phrase'].format(celebrity=attractive_celebrity + " does")

message += f" Our attractive custom voices, inspired by {attractive_voice}, will guide you through {escalation_info['emotion']} discussions on {topic_of_interest}.\n\n let me know when you are free in learning more or scheduling a demonstration.\n\ :) "

return message

# Example usage

advertising_message = generate_advertising_message()

print(advertising_message)

Conclusion

Harpoon whaling attacks will allow cybercriminals to enjoy the benefits of both high-volume phishing and high-impact whaling in a scalable and automated way. Since these attacks are net-new, most traditional blocking methods will not work, and as a group, executives should defend themselves by employing several combined approaches.

In principle, the PoC we previously discussed is an approach that leverages human vulnerabilities based on personal behaviors. The tactics cybercriminals use to wage harpoon whaling attacks may be considered as attacks on an individual’s personal integrity — it’s possible that individuals with high levels of integrity will be resistant to such attacks. However, it’s important to note that victims of all kinds can fall prey to harpoon whaling attacks, especially since this kind of attack is highly targeted and customized.

Architectural approaches such as Zero Trust can be mapped to high-risk behaviors and predict which executives may be most vulnerable to this type of attack. The conversation patterns used by those at most risk can be sought and analyzed to draw conclusions on where protection and executive and whaling training are most needed.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

- They Don’t Build the Gun, They Sell the Bullets: An Update on the State of Criminal AI

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One