A1. Agency: The ability to perform actions on behalf of the user.

A1.2 – Action chaining. The DA is able to chain together actions from one command.

By Salvatore Gariuolo and Rainer Vosseler

Key Takeaways:

- The newly released ChatGPT agent offers upgraded capabilities, and, with this, security challenges that traditional assistants never had to face.

- The agent has a heightened potential for manipulation and is more susceptible to privacy risks, leaving organizations exposed to unintended actions and possible data leakage.

- Organizations and individuals planning to leverage the agent’s capabilities should maintain active user supervision to help manage these risks.

- Trend Micro’s Digital Assistant Framework offers a clear way to map these new capabilities to the risks they introduce - helping the reader better assess the impact of OpenAI’s latest assistant.

OpenAI’s introduction of ChatGPT agent marks a quiet yet significant shift in what artificial intelligence (AI) systems can do. Unlike earlier assistants, which focused on answering questions or generating media, this new agent goes further. It can take actions on behalf of users: managing calendars, sending emails, running code, and interacting with external applications.

Simply put, this new assistant doesn’t just assist— it acts.

This evolution brings clear benefits. It makes digital assistants (DAs) more versatile and capable of handling tasks across systems and apps. But it also introduces new risks, as AI begins to act autonomously, interacting directly with the services that power our digital lives.

This blog focuses on two topics. First, we examine what OpenAI has released and map out the capabilities of the new agent using Trend Micro’s Digital Assistant Framework, introduced in our earlier research. Then, by comparing these capabilities to those of the earlier ChatGPT assistant, we show how much has changed and what risks that leap forward introduces.

These risks are neither theoretical nor distant. OpenAI’s new agent is already live, and it changes the threat landscape in ways that demand our attention.

OpenAI’s ChatGPT Agent: An Assistant That Acts

The new ChatGPT agent isn’t just another version of a chatbot. It’s an assistant that can now act autonomously, carrying out complex, multi-step tasks from start to finish - which brings it closer to Agentic AI.

It can plan and book travel, check calendars to brief users on upcoming client meetings, and even create editable presentations. It does all these using a virtual computer that lets it navigate websites, generate and execute code, and synthesize information, seamlessly blending reasoning and action in ways that previous assistants could not.

Therefore, users are no longer passive recipients of answers; they actively collaborate with the agent, while approving key actions and taking back control when needed.

The agent understands both written and spoken input, responds with text-based outputs and visual content, and is available on portable devices such as smartphones, making it accessible whenever needed. It brings together the strengths of prior specialized tools (web interaction, deep research, and conversational fluency) into a unified system that acts on the user's behalf.

Under the hood, the agent demonstrates advanced planning and reasoning capabilities: it can adapt to unfamiliar tasks, learning along the way. It builds a working understanding of the user over time, learning preferences, and context.

And because it integrates directly with external services like email, digital calendars, and other web platforms, it operates as part of a wider digital ecosystem - not just answering questions but executing tasks across real-world applications.

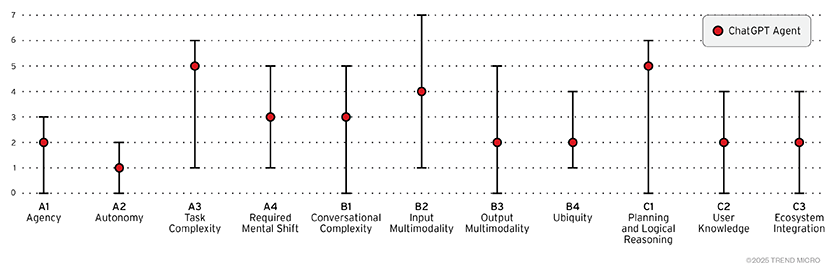

Mapping ChatGPT Agent’s Capabilities Using Trend Micro’s Digital Assistant Framework

To recap the capabilities of OpenAI’s new assistant and present them in a clear, easy-to-visualize way, we now turn to Trend Micro’s Digital Assistant Framework: a tool designed to evaluate the distinctive capabilities of DAs.

The framework was first introduced in our December 2024 article. Since then, we’ve updated the Agency (A1) capability by splitting it into two: agency and autonomy. The levels of autonomy are defined as follows:

A2. Autonomy

The autonomy capability is defined as the DA’s ability to execute decisions without human input.

- A2.0 – Reactive.The DA only acts on user requests.

- A2.1 – Supervised work.The DA operates under supervision, requiring explicit user confirmation.

- A2.2 – Full autonomy.The DA manages tasks independently, without user oversight.

The interactive media below shows how the new ChatGPT agent maps to the framework, highlighting its key features and levels of advancement. To see the description of each capability and the current levels for the ChatGPT agent, hover or click on the pins.

Figure 1. Mapping ChatGPT Agent’s Capabilities to Trend Micro’s Digital Assistant Framework

Capabilities and Risks: Understanding the Leap Forward

The leap in capability brought by OpenAI’s new agent doesn’t come without cost. As the assistant becomes more autonomous and more deeply integrated into our digital ecosystem, the risks grow alongside the benefits.

To understand these risks clearly, we first need to look at what’s changed: how the new agent builds on earlier versions of ChatGPT, and how these added capabilities open the door to new challenges, ones that traditional assistants never had to face.

Autonomy (A2) Capability: Associated Risks

Earlier versions of ChatGPT already showed some degree of agency as they chose how best to fulfil a user’s request. But those assistants remained fundamentally reactive; that is, they waited for explicit instructions before taking action.

The new agent builds on that foundation but takes a clear step forward in autonomy (A2). It doesn’t just act on the user’s behalf— it does so proactively, managing tasks with minimal supervision rather than requiring constant direction.

As we explained in our Agentic AI research, this is precisely the kind of shift that sets apart assistants from truly agentic systems. While traditional assistants like Gemini remain reactive, the new ChatGPT agent begins to cross that threshold, taking initiative and managing tasks with minimal input.

But that increase in autonomy introduces new risks, risks that earlier versions of ChatGPT were never exposed to. Because the system now operates with less user oversight, malicious actors could manipulate its actions in ways users might not immediately notice, making it easier for the agent to work against the user’s intentions.

Task Complexity (A3) Capability: Associated Risks

This danger is amplified by the complexity of tasks (A3) that the agent can perform. Unlike previous versions, which were limited to giving instructions, the new agent takes concrete actions on behalf of its user: some of which can lead to irreversible consequences, such as deleting files unintentionally, emailing the wrong person, or ordering unintended items.

To mitigate these risks, OpenAI has implemented a series of safeguards: the agent asks for explicit user confirmation before critical actions with real-world consequences, like making a purchase; requires active supervision for certain sensitive tasks, like sending out an email; and blocks high-risk operations, like bank transfers. While the agent could technically operate unsupervised, doing so would put users and organizations at serious risk.

That’s because the agent can now be exploited in ways even OpenAI cannot fully anticipate. While previous versions of ChatGPT followed simpler and more predictable chains of thought, the new agent can autonomously learn new domains and reason within them. As a result, it can exhibit complex behaviours even in entirely unfamiliar scenarios.

Planning and Logical Reasoning (C1) Capability: Associated Risks

However, that same ability to plan and reason (C1) across unfamiliar domains makes the agent more vulnerable to prompt injections and other subtle manipulations that can influence its decisions. For instance, an attacker could embed a malicious prompt within a webpage the agent visits - hidden in text or metadata - steering its actions toward unintended outcomes.

That’s why it can’t be stressed enough that keeping the agent under user supervision remains essential to ensuring safety as agentic AI continues to evolve. Still, having a human in the loop doesn’t eliminate all risks. Repeated prompts can result in consent fatigue, leading users to approve actions reflexively.

User Knowledge (C2) and Ecosystem Integration (C3) Capabilities: Associated Risks

Another advance in the agent’s capabilities lies in its deeper knowledge of the user (C2). Unlike ChatGPT, which holds only static information such as language preference and location, the new agent learns continuously through interaction. It detects patterns in user behaviour and preferences, adapting over time to better meet individual needs.

At the same time, it integrates far more seamlessly with the user’s digital ecosystem (C3). Earlier assistants could only interact with specific tools and often required users to manually bridge services. Now, the new ChatGPT agent can natively combine multiple sources of information in a single workflow. For example, it might scan the user’s calendar for availability, check recent emails for relevant updates, and suggest follow-up actions – all without relying on external tools to bridge the gap.

At first glance, this shift might seem incremental. But the implications are far-reaching, especially when it comes to privacy. If compromised, the agent could reveal what it has learned about its user. And because the agent connects directly to the broader digital ecosystem, a breach could expose highly sensitive information from connected accounts and logged-in websites.

To address this, OpenAI has introduced tighter privacy controls: users can clear browsing history and log out of active sessions. The agent also avoids storing sensitive inputs like passwords when operating in autonomous mode. Still, as the agent grows more capable and more deeply embedded, it becomes vital for users to limit its access to what’s truly necessary, striking a careful balance between convenience and control.

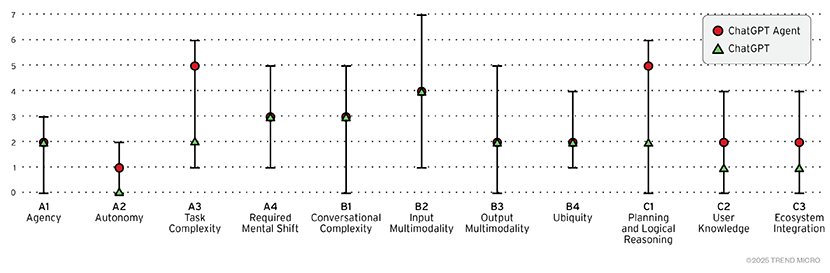

The image below illustrates the shift in capabilities, using our framework to compare OpenAI’s new agent to ChatGPT. What stands out is how even small advances in capabilities (such as autonomy, user knowledge, and ecosystem integration) have the potential to dramatically reshape the risk landscape.

Figure 2. Comparing ChatGPT Agent’s Capabilities to those of the earlier versions of ChatGPT

It’s important to note that both also share a range of challenges, as shared in our prior research.

Conclusion

With its expanded capabilities, the new ChatGPT agent is more than an upgraded assistant –it is poised to become a trusted companion and a constant presence in the user’s digital life. And while many of its advancements might appear incremental, even small gains in capability can introduce new risks, reshaping how we interact with DAs and the safeguards we must adopt to protect them.

This blog has focused on the new risks introduced by OpenAI’s latest agent, particularly the potential for manipulation, as the assistant becomes more autonomous and capable of handling increasingly complex tasks. Equally concerning are the emerging privacy risks, as the agent gains deeper knowledge of the user and broader access to their digital ecosystem.

Despite the agent’s increased autonomy, organizations are advised to maintain active user supervision over the tool, especially for sensitive operations. This can help in detecting irregularities that might result from manipulation and other threats.

For a stronger and more informed security approach, Trend Micro's Digital Assistant Framework proves to be a valuable tool - not only for consumer-facing tools but also for the growing class of agentic systems emerging in enterprise settings. As AI continues to evolve and become an integral part of our lives, this framework serves as an essential lens for understanding the balance between new capabilities and the risks that come with them.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Artículos Recientes

- They Don’t Build the Gun, They Sell the Bullets: An Update on the State of Criminal AI

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One