The use of brand names, product images, and any likenesses in the replication of the AI-enabled assembly line is solely for educational and illustrative purposes. There is no intention to harm, disparage, or misrepresent any brand or product. Any resemblance to actual products or brands is purely coincidental and not intentional. All referenced trademarks and images remain the property of their respective owners.

By Roel Reyes, Numaan Huq, and Salvatore Gariuolo

Generative AI offers a wide range of capabilities that ideally are used for good, but the reality is that fraudsters are also using them to make old scams faster, smarter, and more convincing than ever. GenAI eradicates what used to be a barrier of entry for criminals to successfully bait victims into a scam: in the past, scams were sloppy and therefore easy to spot. They contained spelling mistakes and were ungrammatical, used clumsy websites that looked obviously fake, and were conducted through awkward phone calls. Now, GenAI can produce convincing photos of products with branding, flawless language, realistic voices, and lifelike videos in minutes. This means anyone with basic computer skills can launch scalable scams at scale with messages, images, and videos that look real enough to make even the most vigilant internet users second-guess what they’re looking at.

These threats are no longer mere speculation: Trend™ Research has seen in its continuous monitoring of the threat landscape that cybercriminals are using AI to supercharge scam operations, making them easier to run and harder to detect. These scams erode digital trust: as scammers victimize more customers, brand confidence could potentially be affected, which in turn impacts customer loyalty. The generation of fake products and promotions based on authentic brands might also cause reputational damage.

This article reveals how criminals use GenAI to produce text, images, video, and voices that, when combined, can become a well-coordinated and automated scam operation through the replication and investigation of an AI-powered scam assembly line. The next section shows examples of how AI supercharges cybercriminal activity. Select to expand to learn more.

Phishing made perfect: AI text generation

In phishing scams, the hardest part for scammers isn’t creating a fake website, it’s getting victims to visit it. AI now helps cybercriminals write the bait that makes people click the suspicious link. Modern AI tools create messages in seconds that read like they came from a trusted source. They can:

- Match the tone and style of a real company

- Avoid spelling or grammar mistakes

- Translate into any language with natural phrasing

- Personalize the content so it’s aimed directly at victims who have been previously profiled

Once the suspicious link is clicked, victims are directed to an AI-generated fake website that complete with cloned logos, layouts, and even interactive elements, so it feels exactly like the real thing. Cybercriminals have been observed to use vibe coding platform site builders to clone log-in portals in as little as 30 seconds, before they are hosted on legitimate infrastructure. Just a few years ago, scams with fake websites required cybercriminals to have web design and development skills, but today, they can generate dozens of convincing sites in minutes using AI.

For victims, the result is the same as before: stolen details, but the speed and polish allowed by AI make them far more likely to fall for it.

Faces and products that don’t exist: AI image generation

Generative AI can create pictures so convincing they could pass for official brand photos, complete with spotless packaging, perfect lighting, and carefully staged scenes. This technology can be used for legitimate purposes, but scammers have figured out how to use it to their advantage. They can make fake products or even imaginary people look completely real, setting up elaborate online schemes to fool unsuspecting online users. Cybercriminals have been observed to:

- Showcase counterfeit goods with flawless packaging, lighting, and stolen brand identities that look legitimate.

- Create fake romantic partners for online dating scams, where the fake romantic partner can’t be reverse-searched to find a real person.

Scammers have been observed to create fake products, complete with fake customer reviews that are also written by AI so victims are convinced to pay for non-existent products. There is also evidence that cybercriminals use GenAI to create romantic personas online who talk to victims with AI-generated messages that are flawless and emotionally engaging that quickly build trust. Once the victim is emotionally invested, the scammer fabricates an emergency, such as sudden travel or medical expenses, and requests financial help — turning an AI-powered illusion into real financial loss.

Smoke and mirrors: AI video generation

Where images still leave room for doubt, video might be able to seal the deal. AI generation can now produce videos where lookalikes of influencers, salespeople, or even celebrities speak directly to you as if they were real. With lifelike blinking, natural speech, realistic gestures, on faces that are often familiar to the victim, these videos can run as ads, appear in private messages, or be part of a larger scam campaign involving fake websites and cloned voices. Fraudsters use AI-generated video to:

- Fake social media endorsements for products.

- Imitate well-known people for cryptocurrency investment scams.

- Create “virtual kidnapping” scenarios where a loved one appears to be speaking under duress.

News outlets have reported instances of scammers using AI-generated influencer videos to promote fake giveaways and products. Researchers have also found clusters of AI-created influencers on TikTok pushing deceptive wellness products realistic enough to fool thousands of viewers before platforms intervened. According to the 2024 Trend Research report about the new AI criminal toolset, advances in AI-generated video and deepfakes make highly convincing footage that are cheaper, easier, and faster to produce for social engineering scams. These tools are rapidly improving and can be misused to deceive and defraud both individuals and organizations.

Unignorable impersonation: AI-enabled voice cloning

Voice cloning has evolved so much that someone can copy a voice from just a few seconds of audio. The reference could be a clip from a podcast, a short video on social media, or a voicemail, which is enough for advanced tools to recreate tone, accent, and speaking style almost perfectly. While this technology can be used for entertainment and accessibility, it is now also used by scammers as a new weapon. Voice cloning can be used to:

- Mimic voices familiar to a victim to convince them to do something, like share sensitive company information to someone posing as the victim’s boss.

- Combine mimicked voices with AI-generated faces and stolen personal details to create convincing video calls and video messages for more sophisticated scams.

- Merge AI-generated deepfake voices with large language models to carry out “virtual kidnapping” scams where victims receive calls that convincingly mimic the terrified voice of a loved one, demanding a ransom, and preying on the victim’s fear and panic.

There has already been a reported case where a scammer impersonated a CEO using a publicly available photo and AI-cloned voice to instruct employees to transfer funds for an urgent confidential transaction. AI voice cloning is more than a data privacy concern because it attacks fundamental human instincts: a familiar voice can trigger strong emotions, which is exactly what makes this type of scam so believable. As voice cloning grows faster, cheaper, and increasingly lifelike, the danger can no longer be ignored. Combating this threat requires heightened public awareness, reliable verification steps, and stronger digital security measures that no individual or organization can afford to neglect.

How the AI scam factory comes together

Scams have been around for centuries, but until recently, pulling off a convincing large-scale scheme required a full team of skilled people that required individuals with a background in web design, copywriting, graphics creation, and audio or video editing. Fraudsters had to coordinate multiple steps and work with different experts just to make their scams believable.

Today, AI and automation have changed the game completely. With accessible AI tools and simple automation platforms, one person can create a polished, high-quality scam in just hours, and often at very little cost.

With only a few prompts, scammers can:

- Create realistic product photos that look professionally shot.

- Clone voices and produce videos that feel authentic.

- Build professional-looking fake websites in minutes.

- Write highly targeted messages for any audience.

- Generate fake paperwork, such as IDs or certifications.

These pieces can be combined seamlessly: an image can be inserted into a video, paired with a cloned voice, and pushed across social media and websites all at once. Automation connects the steps, so the whole process runs almost entirely on its own.

The danger isn’t the technology itself, but in the way it enables speed and quality. With AI lowering the effort required, businesses and individuals must increasingly be more vigilant against all forms of scams, from the classic phishing emails to the more sophisticated impostor romance scams and merchandise scams. Trend Micro telemetry from June to September 2025 detected romance impostor scams the most, accounting for over three-quarters of reported incidents, while merchandise scams rank second.

| Scam Category Name | Monthly Count | Percentage |

|---|---|---|

| Romance Impostor Scam | 346,206 | 77.27% |

| Merchandise Scam | 73,635 | 16.43% |

| Other Trusted Party Scam | 21,885 | 4.88% |

| Business Imported Scam | 4,778 | 1.07% |

| Financial Institution Impostor Scam | 822 | 0.18% |

Table 1. The detections of different kinds of scams from June to September 2025 from Trend Micro telemetry

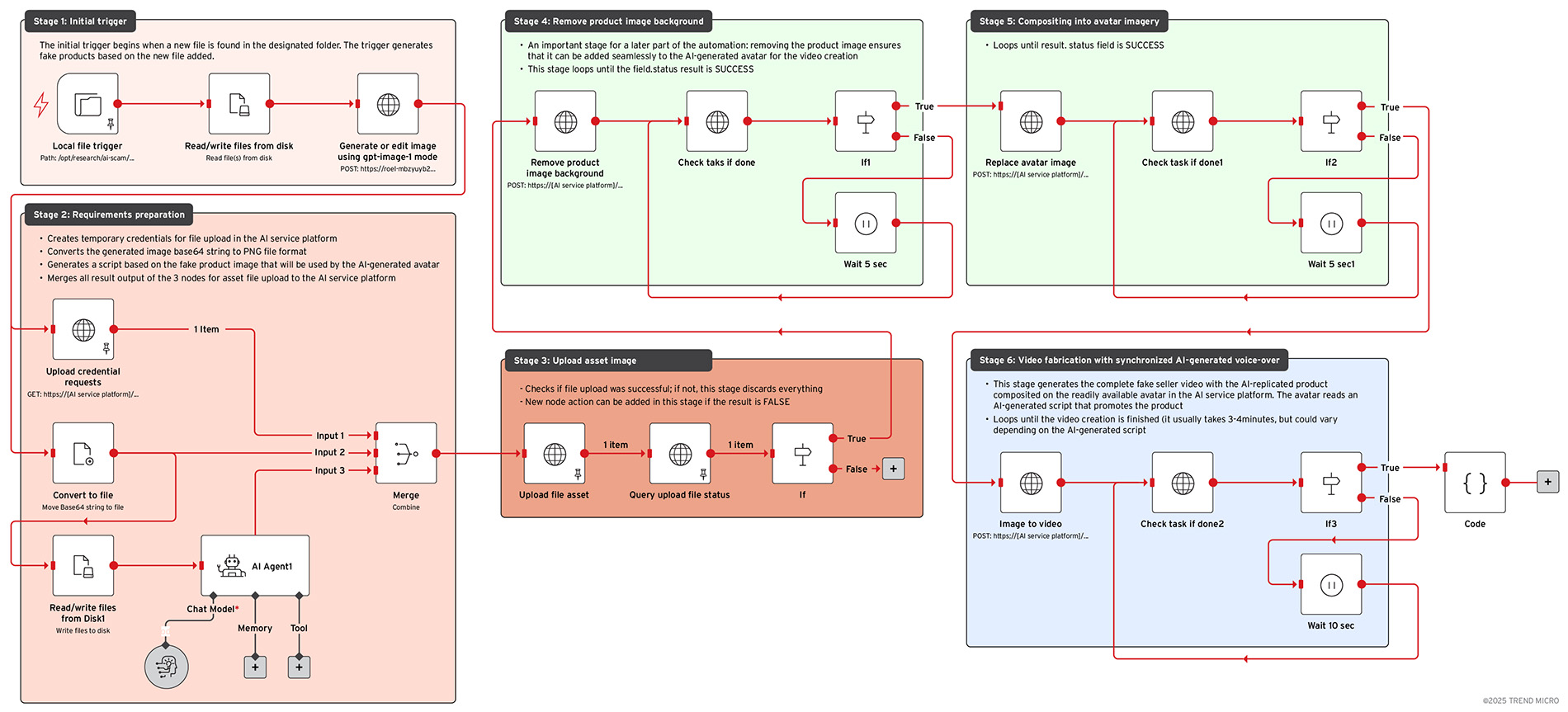

The AI-powered scam assembly line

Figure 1. The n8n workflow that Trend Research set up for its test

To see this in action, Trend Research tested n8n, an open-source automation platform that links different apps and services and allows individuals without requiring advanced coding skills to build their own mini software system from ready-made blocks, and then listens for certain events, processes data, and then triggers actions automatically. The simulation that Trend Research created attempts to replicate the kind of set up a real-world threat actor might use but was designed purely for research purposes and did not use any illicit or harmful content.

In the test, we set up an agentic workflow: a chain of AI agents where each tool handles a task, then passes the result to the next one, like stages in an assembly line. This process brought together several commercial services including image generation and editing, text-to-speech, and AI service platforms that offer readily available avatars and videos. With only minimal human input, the process ran smoothly from start to finish, producing realistic, ready-to-use content.

Stage 1: Initial trigger

The process begins with a Local File Trigger node set to watch a dedicated folder. It activates whenever a new image is added. In the test set up, these images typically show genuine merchandise, but here they are used as starting material for creating high value “limited edition” fake product visuals.

When the trigger detects a new file, the image is sent directly to a Read/Write Files node, which converts it into binary form for further editing. This binary data is then processed through Microsoft Azure’s image editing model.

The call to the model includes a carefully written prompt telling it to reproduce the original image but modify the design so it looks like a “limited edition” luxury item aimed at consumers aged 20 to 45 years old, with a spending range between US$300 and 2,000. The prompt specifically avoids using the actual phrase “limited edition” so that the result appears convincing without obvious wording that might draw suspicion.

Stage 2: Requirements preparation

The second stage focuses on preparing everything needed before creating and processing the fake image. The workflow requests temporary upload credentials through an authenticated HTTP call.

Figure 2. An image of a real product (left), and the AI-generated fake tote bag (right) that was derived from the real product’s image

Once the image has been converted from a base64 string into a file, another language model uses it to generate a marketing script. This script will be used in the final stage of the workflow when producing the promotional video. All the prepared materials are then brought together using a Merge node.

Stage 3: Upload asset Image

The upload credential request node provides metadata containing a temporary location where the file can be sent. Once the base64 string is converted into binary form, it is uploaded to this temporary destination. A validation step then checks the upload status to confirm that the file was successfully received. If the check indicates a failure, the workflow ends immediately. This simple safeguard prevents unnecessary processing on assets that did not upload correctly.

Stage 4: Remove product image background

Once the uploaded file has been validated, it goes through an automated process where the background in the image is removed; this step is essential because it prepares the image for later compositing with an avatar to create a fake promotional image or video.

The workflow then uses a polling loop, controlled by paired If and Wait nodes, to check the job’s status until it reports “success.” This same polling approach is used in several later tasks, which shows the workflow is built to handle asynchronous cloud rendering jobs smoothly and efficiently.

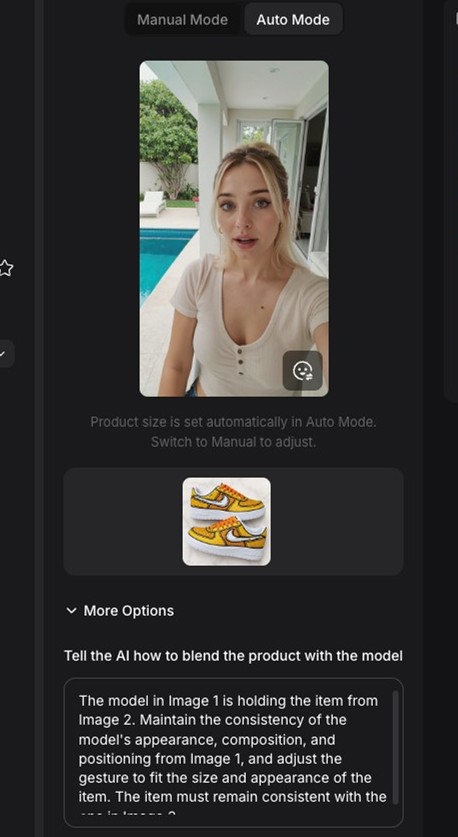

Stage 5: Compositing into avatar imagery

After the background in the image has been removed, the cleaned product image is placed into a stock avatar photograph. The instructions specify keeping the composition, pose, and gesture of the person exactly as in the original photo, while replacing the object they are holding with the fake product generated earlier. The guidance is detailed enough to preserve fine visual elements and avoid noticeable editing artifacts.

Figure 3. Once the background of the fake product is removed, it can be superimposed on one of the AI service platform’s readily available avatars of an influencer and make it show that they are promoting it

As with previous steps, the workflow uses success polling to monitor progress until the avatar replacement task is fully completed.

Stage 6: Video fabrication with synchronized AI-generated voice-over

Once the avatar composition is complete, the workflow moves on to create a fully animated promotional video. The key inputs at this stage are the ID of the composited avatar image and a text-to-speech script written to appeal to potential buyers.

The voice script comes from stage two of the workflow, generated by an OpenAI GPT-5-chat node under clear guidelines. These rules require the description to be enticing; target audiences aged 40 or younger, limit the length to 500 characters, and output only the voice-over text without any extra material.

This script is combined with a specified voice profile to produce AI-generated speech that is synchronized with the avatar footage. The video creation task is then monitored using success polling until it is fully completed.

Figure 4. A completely AI-generated video with an AI-generated fake product, an avatar of a model or influencer readily available for use in the AI service platform, and a digitally generated voice. Select to play.

The finished assets could be used in several harmful ways; it could be posted as a fake advertisement on social media, listed on deceptive online stores, or sent in large scam email campaigns. Each channel helps make the fake content look real and reach victims faster.

This workflow shows how scammers can now use generative AI and low-code automation to quickly produce highly convincing fake products. Because the system is modular, they can easily swap prompts, images, or avatar templates to create hundreds of unique product variations within a few hours. And because commercial cloud services are used for the rendering, they get professional-grade results while hiding their tracks.

This means a single person with free or low-cost tools can now run a convincing and scalable scam campaign, something that once took months and a team of specialists.

There are already reports of the real-world impact of the AI-powered scam assembly line. Recent reports highlight that counterfeit operations are increasingly being powered by automation and AI. Fraud networks now use AI-driven bots to manage counterfeit listings, automate customer interactions, and even mimic authentic seller behavior across multiple online marketplaces and social platforms. There have also been reports about how automated tools allow counterfeiters to publish hundreds of fake product listings simultaneously, track legitimate seller prices, and adjust their offers in real time to stay competitive. Today’s counterfeit networks have reached a new level of sophistication, powered by advanced digital tools, automation, and large-scale production capabilities.

Figure 5. A photo of a real pair of Nike Air Force 1 Custom shoes in Aqua Pool Blue (left), as featured in a legitimate eBay listing, and a fake copy of the same product with a different colorway (right) generated by AI using an LLM and the AI assembly line discussed in the previous section

As an example, Trend Research recreated the sneakers featured on the left of Figure 5, which is a legitimate product that can be found on a popular online shopping platform. The image on the right side is the AI-generated recreation. Law enforcement agencies have reported cases of such AI-generated recreations of legitimate products online with steep “too good to be true” discounts up to 70% off that aim to lure victims.

This digital trickery extends beyond images, because AI-driven writing tools now also produce believable five-star reviews, complete with regional slang and natural phrasing, making them nearly indistinguishable from genuine customer feedback. These well-crafted reviews help counterfeit sellers quickly gain trust and push buyers toward impulsive purchases.

Together, these technologies create a self-sustaining fraud ecosystem, one that operates around the clock, scheduling posts, promoting deals during peak shopping hours, and responding instantly to buyers, all without human oversight.

Inasmuch as cybercriminals strive to create more convincing scams, they still rely on human error so victims can fall for their social engineering tactics. A report as early as 2023 revealed that while 84% of consumers expressed concern over AI-driven fraud during the holiday shopping season, 67% still admitted they would buy from unfamiliar websites offering seemingly irresistible deals. There remains a disconnect between consumer awareness and behavior that underscores how fraudsters exploit human trust and can now do so more efficiently and more convincingly with AI.

Beyond convincing images of fake products and AI-generated fake reviews, threat actors can now take social engineering to the next level with AI-generated avatars of fake influencers endorsing a fake product in an AI-generated video.

Figure 6. A screenshot of the AI platform that shows the source images that will be combined in the direction of the prompt, which generates the video in figure 7.

Figure 7. A completely AI-generated video with an AI-generated fake product, an avatar of a model or influencer readily available for use in the AI service platform, and a digitally generated voice. Select to play.

The 2024 Trend Research report explores how deepfakes and other AI tools can be repurposed for criminal use. Cybercriminals can exploit AI-generated marketing avatars to create fully fabricated online shops and promotional campaigns. The fake online shops can churn out product videos featuring lifelike synthetic personalities that promote the fake product, interact with comments, and engage with followers, all without any real person behind the account.

Trend Research cautions about how this approach erases the boundary between genuine advertising and automated deceit, enabling scammers to rapidly deploy convincing fake merchandise promotions across multiple platforms at once.

Generative AI has given scammers powerful new tools, making their schemes faster, smarter, and harder to detect. With the ability to create convincing images, lifelike voices, and complex fake listings in minutes, fraudsters can now craft scams that look and feel real. Staying safe and keeping risk at bay now extends beyond awareness and requires smart habits that can keep up with these evolving threats.

While some brands are embedding invisible watermarks or cryptographic signatures into their media so authenticity is easier to prove, educating people remains one of the strongest defenses: the sooner a scam is recognized, the sooner it can be shut down.

Security teams must step up and maximize available tools in the market to screen for deepfakes, synthetic videos, and unusual patterns online, and equip employees and users with the same tools to minimize the risk that stems from human error.

Trend Micro Deepfake Inspector is a free tool that can scan for AI-face-swapping scams, and alerts users in real-time about potential impersonations. It can also help verify if a party on a live video conversation is using deepfake technology, alerting users that the person or persons with whom they are conversing may not be who they appear to be.

Trend Micro ScamCheck is an AI-powered defense that combats AI-powered threats: it offers multiple features that doesn’t just spot common red flags and scams but also performs a deeper analysis of the content to find the less obvious signs of online schemes. It blocks spam and scam texts and calls to cut the noise and reduce the risk and predicts potential scams too to keep users one step ahead of cybercriminals. It does the work for users by blocking unsafe websites and filtering out ads that potentially could be scams. It also aids users by simplifying identification, requiring only a screenshot of a suspicious advertisement, website, or message to identify if it is a scam.

As tools to counter AI‑generated scams continue to evolve and improve, it’s equally important to strengthen personal awareness and instill constant vigilance; these tools are only as strong as the users who put them to use. Developing practical habits that keep pace with the evolution of modern scams can help you stay protected. We recommend the following best practices and smart habits to stay safe:

- Scrutinize before you trust. Always double-check URLs, email addresses, caller IDs, and product details before acting. If something feels perfect or “too good to be true,” stop and take a moment to investigate.

- Analyze reviews and listings carefully. Fake reviews are often polished but impersonal. Use available tools to detect AI-generated text and pay attention to feedback from verified buyers and realistic feedback rather than generic comments.

- Stay alert during peak seasons. Scammers know when people shop the most: holidays, sales events, and donation drives are prime times for fraud. Watch for unusual spikes in heavily discounted listings or overly positive reviews during these periods.

- Control what you share. Keep personal details off unfamiliar sites and avoid oversharing on social media. Even small facts, like where you work or travel, can be stitched together to build a scam targeting you.

- Raise the alarm and report. If you see suspicious sites, products, or signs of a coordinated scam, report them immediately. Flagging these early helps platforms and investigators take them down before they spread to more victims.

While AI might make scams more convincing, it also creates a certain level of predictability once an individual knows how to spot the signs. By adding a short pause before trusting something online, guarding personal information, and paying attention to small details, blocking AI-powered threats before they can cause harm is possible and doable.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Artículos Recientes

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One