Artificial intelligence (AI) is reshaping how organizations innovate and compete, but it’s also introducing new risks that can’t be ignored. From prompt injection attacks and data leaks to deepfake fraud and supply chain vulnerabilities, the threats are evolving as quickly as the technology itself. In fact, recent research found that nearly half of adversarial tests against large language models (LLMs) managed to bypass safety controls, making AI security a board-level concern.

Why AI security should be your priority

Embedding security into AI projects from the very beginning isn’t just about avoiding threats, it’s about unlocking real business value:

- Accelerate innovations, lower risk: Secure design prevents costly breaches and project delays while maintaining a competitive edge.

- Build trust and meet regulations: Transparent, well-governed AI supports compliance with new laws, such as the EU AI Act and ISO/IEC 42001:2023, while also strengthening stakeholder confidence.

- Reduce costs: Preventive security measures are far less expensive than recovering from a major incident or regulatory fine.

Practical steps for AI security

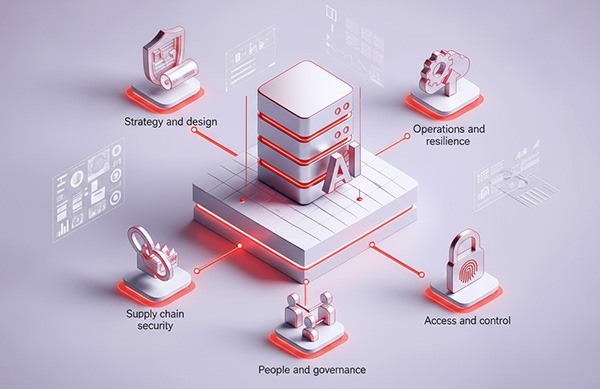

The document highlights a clear set of do’s and don’ts across five key domains:

Strategy and design

Integrate threat modeling, compliance mapping, and zero trust principles from the outset. Don’t build AI without oversight or “kill switches.”

Operations and resilience

Use red/blue teaming, validate all inputs, secure vector databases, and keep track of model/data lineage.

Supply chain security

Maintain a software bill of materials (SBOM) for all models, datasets, and libraries. Avoid using unverified components.

People and governance

Implement workforce training on AI risks, enforce clear usage policies, and practice human-in-the-loop oversight for critical decisions.

Access and control

Apply least privilege access, multifactor authentication, and monitor for excessive agency in AI agents.

Stay ahead of emerging threats

New risks are emerging all the time, including indirect prompt injection, poisoned training data, deepfake voice and video fraud, model theft, and unsanctioned “shadow AI” adoption. The document recommends:

- Continuous threat intelligence and regular reviews of AI risks and policies.

- Guardrails and prompt injection defences.

- Data protection by design and supply chain assurance.

- Deepfake resilience and shadow AI controls.

Five actions to get started

- Inventory all AI tools in use, including shadow AI.

- Implement multifactor authentication for all AI system access.

- Schedule AI security training for development teams.

- Review and document your AI model supply chain (SBOM).

- Contact Trend Micro to start an AI risk assessment.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

HttpContext.GetGlobalResourceObject("ES","RecentPosts")%>

- They Don’t Build the Gun, They Sell the Bullets: An Update on the State of Criminal AI

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One