Intelligence artificielle (IA)

AI-Powered App Exposes User Data, Creates Risk of Supply Chain Attacks

Trend™ Research’s analysis of Wondershare RepairIt reveals how the AI-driven app exposed sensitive user data due to unsecure cloud storage practices and hardcoded credentials, creating risks of model tampering and supply chain attacks.

Key takeaways

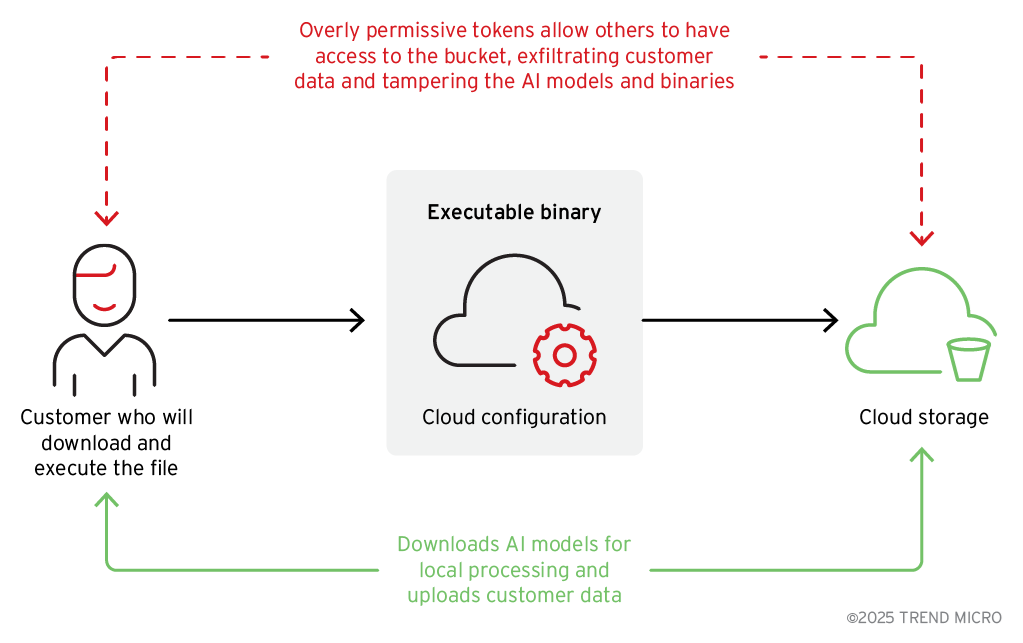

- An AI-powered application for enhancing images and videos named Wondershare RepairIt may have inadvertently contradicted its privacy policy by collecting and retaining sensitive user photos. Poor Development, Security, and Operations (DevSecOps) practices allowed overly permissive cloud access tokens to be embedded in the application’s code.

- The hardcoded cloud credentials in the application’s binary enabled both read and write access to sensitive cloud storage. The exposed cloud storage contained not only user data but also AI models, software binaries, container images, and company source code.

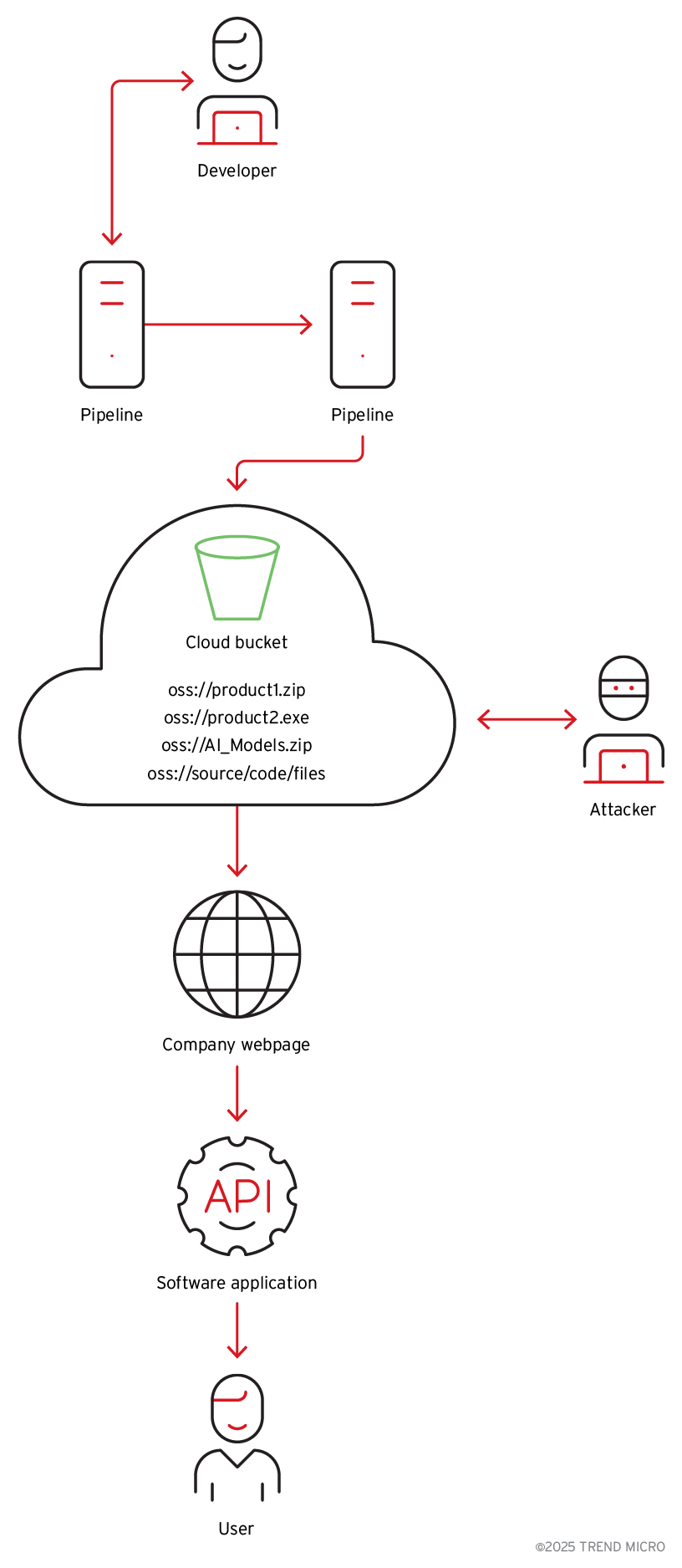

- Attackers can leverage the compromised access to manipulate AI models or executable files, conducting sophisticated supply chain attacks. Such an attack could distribute malicious payloads to legitimate users through vendor-signed software updates or AI model downloads.

Consistency between company privacy policies and actual data handling practices – particularly with AI-powered applications – and the integrity of AI model deployment are both critical security considerations for organizations in the age of AI. Trend™ Research has identified a case where Wondershare RepairIt, an AI photo editing application, contradicted its privacy policy by collecting, storing, and, due to weak Development, Security, and Operations (DevSecOps) practices, inadvertently leaking private user data.

The application explicitly states that user data will not be stored, as seen in Figure 1. Its website states this as well. However, we observed that sensitive user photos were retained and subsequently exposed because of security oversights.

Our analysis found that poor DevSecOps practices led to an overly permissive cloud access token being embedded within the application’s source code. This token exposed sensitive information stored in the cloud storage bucket. Furthermore, the data was stored without encryption; this made it accessible to anyone with basic technical knowledge, who could subsequently download and exploit it against the organization.

It is not unusual the developers to ignore security standards and embed their over-permissive cloud credentials directly into the code, as we have observed in previous research.

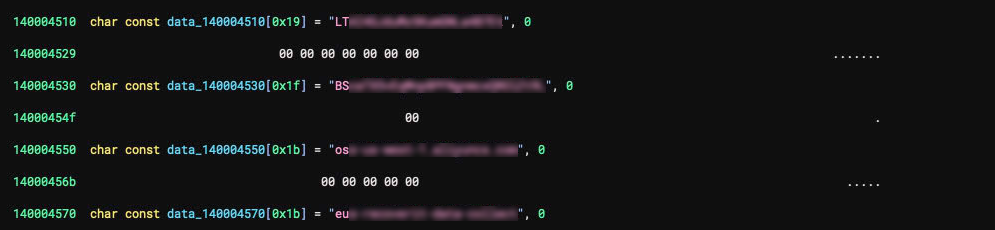

In our case, we found the credentials in the compiled binary executable (Figure 2). While this approach may seem convenient, simplifying user experience and backend processing, it exposes the organization to significant vulnerability if not implemented correctly.

Securing the overall architecture is crucial: Establishing clear purposes for storage, defining access controls, and ensuring only necessary permissions are granted to credentials help prevent disastrous scenarios where attackers download the compiled binaries, analyze them, and exploit them for purposes unrelated to their intended functionality.

While it is a common practice to hardcode a cloud storage access token with write permissions directly into the binaries, it is used for application logs or metric collection – and more importantly, most implementations strictly restrict token permissions. For example, while data can be written to cloud storage, it cannot be retrieved.

It’s unclear whether the code was written by a developer or an AI coding agent that provided a vulnerable code snippet. Regardless, organizations need to exercise extra caution when utilizing cloud services. A single access token leakage has often disastrous consequences: For example, these can allow threat actors to insert malicious code into distributed software binaries, potentially initiating a supply chain attack.

We have made proactive efforts to contact the vendor through the Trend Zero Day Initiative™ (Trend ZDI), although we have yet to receive a response. These vulnerabilities were disclosed to the vendor in April. The final draft of this blog entry was also shared with the vendor prior to publication.

The initial disclosure of the vulnerabilities was made through Trend ZDI. The vulnerabilities have been assigned CVE-2025-10643, CVE-2025-10644, and disclosed on September 17.

Binary analysis: Credential exposure and data leakage

The discovery began with the downloaded binary – a client application widely promoted on the company’s official website as a robust, user-friendly tool for repairing damaged images and videos using patented techniques and AI as a core engine (Figure 3).

The binary analysis showed the application uses a cloud storage account with hardcoded credentials. The storage account was not only used to download AI models and application data; we found that the account also contained multiple signed application executables developed by the company. It also had sensitive customer data (Figure 4), all accessible due to the cloud object storage identifiers (URLs and API endpoints), a secret access ID and key, and defined bucket names present in the binary.

Further analysis proved that the credentials that were granted read and write access to the bucket were also hardcoded in the binary. The same cloud storage holds AI models, container images, binaries for other products from the same company, scripts and source code, and customer data (such as videos and pictures).

Private data exposure: The first critical issue

We found that the unsecure storage service stores customer uploaded data dating back two years prior to this research, raising significant privacy concerns and regulatory implications, particularly under the European Union’s (EU) General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA) in the US, or similar frameworks. This data leakage included thousands of unencrypted personal images uploaded by customers that were sensitive in nature and intended for AI-driven enhancement (Figure 6).

Exposure of such data not only poses an immediate risk of regulatory fines, reputational damage, and loss of competitive advantage due to intellectual property theft, but at the same time allows threat actors to potentially launch a targeted attack against the company and its customers.

The supply chain issue: Manipulating AI models

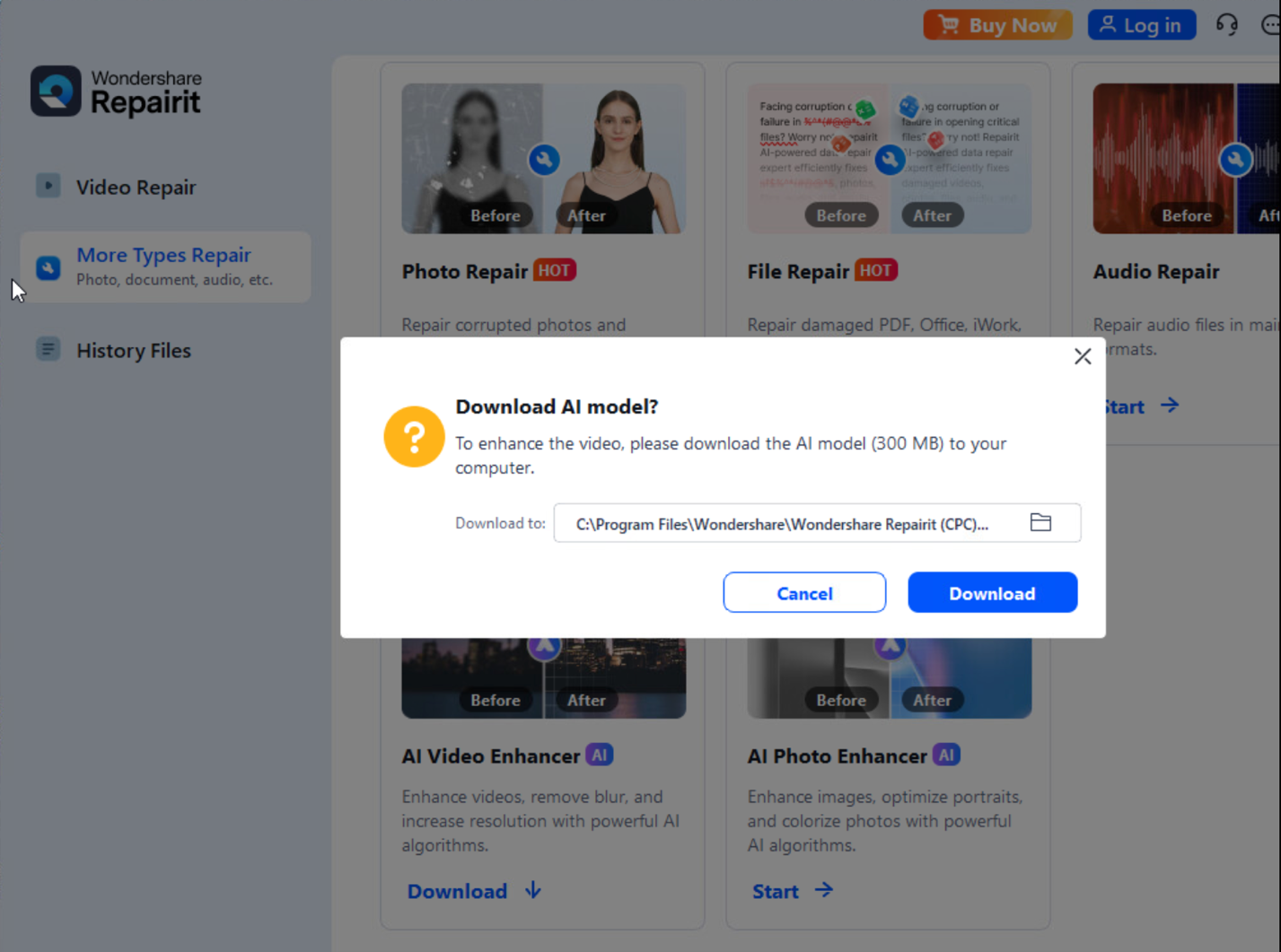

When users interact with Wondershare Repairit, they are prompted via a pop-up to download AI models directly from the cloud storage bucket to enable local services (Figure 7). The binary is configured with the specific bucket address and the name of the AI model zip file to be downloaded (Figure 8).

Perhaps even more concerning than the exposure of customer data alone is the potential for a sophisticated AI supply chain attack. Because the binary automatically retrieves and executes AI models from the unsecure cloud storage, attackers could modify these models or their configurations and infect users unknowingly (Figure 9), as in other cases we covered before.

This opens the door for various attack execution scenarios, in which malicious actors could:

- Replace legitimate AI models or configuration files within the cloud storage.

- Modify software executables and launch supply chain attacks against its customers.

- Compromise models to execute arbitrary code, establish persistent backdoors, or exfiltrate more customer information silently.

Real-world impact and severity

The severity of such a scenario cannot be overstated, as a supply chain attack of this nature could impact a large number of users worldwide, delivering malicious payloads through legitimate vendor-signed binaries.

Historical precedent and lessons

Incidents such as the SolarWinds Orion attack or the ASUS ShadowHammer attack illustrate the catastrophic potential of compromised binaries delivered through legitimate supply chain channels. This scenario with Wondershare RepairIt reflects the same risks, amplified by widespread reliance on AI models executed locally (Figures 10 and 11).

Broader implications: Beyond data breaches and AI attacks

Apart from direct customer data exposure and AI model manipulation, several additional critical implications emerge:

- Intellectual property theft. Competitors gaining access to proprietary models and source code could reverse-engineer advanced algorithms, significantly harming the company’s market leadership and economic advantage.

- Regulatory and legal fallout. Under GDPR and similar privacy frameworks, exposed customer data could lead to immense fines, lawsuits, and mandatory disclosures, severely damaging trust and financial stability. A recent high-profile case illustrating these risks involved TikTok, which faced a €530 million penalty from the EU in May due to violations of data privacy regulations.

- Erosion of consumer trust. Security breaches erode consumer confidence dramatically. Trust is hard-earned but easily lost, potentially leading to widespread customer abandonment and substantial long-term economic impact.

- Vendor liability and insurance implications. The financial and operational liability of such breaches extends beyond direct penalties. Insurance claims, loss of vendor agreements, and subsequent vendor blacklisting significantly compound financial damages.

Conclusion

The need for constant innovations fuels an organization’s rush to get new features to market and maintain competitiveness, but they might not foresee the new, unknown ways these features could be used or how their functionality may change in the future. This explains how important security implications may be overlooked. That is why it is crucial to implement a strong security process throughout one’s organization, including the CD/CI pipeline.

Transparency regarding data usage and processing practices is imperative not only to maintain user trust in AI-powered solutions, but to comply with an evolving regulatory landscape. Companies must bridge the gap between policy and practice by ensuring that their actual operations align with published privacy statements. Continuous review and improvement of security protocols are essential to keep pace with evolving AI development and deployment risks. Only through disciplined governance and security-by-design principles can organizations safeguard both proprietary technologies and customer trust.

Security recommendations

Organizations can proactively prevent security issues like those discussed in this blog entry by leveraging the Artifact Scanner within Trend Vision One™ Code Security. This comprehensive scanning capability enables pre-runtime detection of vulnerabilities, malware, and secrets in artifacts – including container images, binary files, and source code – that empowers security teams to identify and remediate issues before they impact production environments.

Adhering to established security best practices is essential for safeguarding software development environments, playing a critical role in preventing the types of serious attack scenarios described in this blog entry. To mitigate potential risks, defenders should apply security protocols such as:

- Implement fine-grained access token permissions. This minimizes the risk of lateral movement and privilege escalation by ensuring each token is limited strictly to only necessary actions.

- Split storage services per use case. By assigning different storage services to specific functions or teams, organizations can better control access and limit the exposure of sensitive data if a particular service is compromised.

- Separate customer data from the software supply chain. Keeping customer data isolated from development tools and processes prevents unintended access or leaks, safeguarding personal and sensitive information from supply chain risks.

- Monitor the usage of storage services and access tokens. Regular monitoring helps quickly identify anomalies, potential breaches, and unauthorized access so that remediation can be done with minimal impact.

- Incorporate DevSecOps standards into the CI/CD pipeline when using cloud services within software products. Integrating security checks and controls early in the development process ensures vulnerabilities are caught and mitigated before reaching production.

- Provide secure code snippets and establish secure defaults to support developers and AI in building secure applications. Making secure choices the default and providing vetted examples helps prevent the introduction of vulnerabilities due to oversight or lack of expertise.

- Follow security best practices throughout the development and deployment lifecycle. This includes practices such as regular patching, vulnerability management, and foundational security hygiene to ensure ongoing protection.