Exploiting AI: How Cybercriminals Misuse and Abuse AI and ML

Download “Malicious Uses and Abuses of Artificial Intelligence”

Download “Malicious Uses and Abuses of Artificial Intelligence”

Trend Micro, United Nations Interregional Crime and Justice Research Institute (UNICRI), and Europol

Artificial intelligence (AI) is swiftly fueling the development of a more dynamic world. AI, a subfield of computer science that is interconnected with other disciplines, promises greater efficiency and higher levels of automation and autonomy. Simply put, it is a dual-use technology at the heart of the fourth industrial revolution. Together with machine learning (ML) — a subfield of AI that analyzes large volumes of data to find patterns via algorithms — enterprises, organizations, and governments are able to perform impressive feats that ultimately drive innovation and better business.

The use of both AI and ML in business is rampant. In fact, 37% of businesses and organizations have already integrated AI in some form within their systems and processes in 2020. With tools powered by these technologies, enterprises are able to better predict customers’ buying behaviors that contribute to increased revenues. Some enterprises, with the help of ML- and AI-powered tools, are able to build highly profitable businesses such as Amazon, which reached a trillion-dollar business valuation in 2018.

While AI and ML can support businesses, critical infrastructures, and industries as well as help solve some of society’s biggest challenges (including the Covid-19 pandemic), these technologies can also enable a wide range of digital, physical, and political threats to surface. For enterprises and individual users alike to remain protected from malicious actors who are out to misuse and abuse AI, the risks and potential malicious exploitations of AI systems need to be identified and understood.

In our research paper “Malicious Uses and Abuses of Artificial Intelligence,” a joint effort among Trend Micro, the United Nations Interregional Crime and Justice Research Institute (UNICRI), and Europol, we discuss not only the present state of the malicious uses and abuses of AI and ML technologies, but also the plausible future scenarios in which cybercriminals might abuse these technologies for ill gain.

AI and ML Misuses and Abuses at Present

The features that make AI and ML systems integral to businesses — such as providing automated predictions by analyzing large volumes of data and discovering patterns that arise — are the very same features that cybercriminals misuse and abuse for ill gain.

Deepfakes

One of the more popular abuses of AI are deepfakes, which involve the use of AI techniques to craft or manipulate audio and visual content for these to appear authentic. A combination of “deep learning” and “fake media,” deepfakes are perfectly suited for use in future disinformation campaigns because they are difficult to immediately differentiate from legitimate content, even with the use of technological solutions. Because of the wide use of the internet and social media, deepfakes can reach millions of individuals in different parts of the world at unprecedented speeds.

Deepfakes have great potential to distort reality for many individuals for nefarious purposes. An example of this is an alleged deepfake video that features a Malaysian political aide engaging in sexual relations with a cabinet minister. The video, which was released in 2019, also calls for the cabinet minister to be investigated for alleged corruption. Notably, as a result of this video’s release, the coalition government was destabilized, thus also proving the possible political ramifications of deepfakes. Meanwhile, another example involves a UK-based energy firm that was duped into transferring nearly 200,000 British pounds (approximately US$260,000 as of writing) to a Hungarian bank account after a malicious individual used deepfake audio technology to impersonate the voice of the firm’s CEO in order to authorize the payments.

Because of the potential malicious use of AI-powered deepfakes, it is therefore imperative for people to understand how realistic these can seem and just how they can be used maliciously. Ironically, deepfakes can be a useful tool for educating people on their possible misuses. In 2018, Buzzfeed worked together with actor and director Jordan Peele to create a deepfake video of former US president Barack Obama with the aim of raising awareness about the potential harm that deepfakes can wreak and how important it is to exercise caution before believing internet posts — including realistic-looking videos.

AI-Supported Password Guessing

Cybercriminals are employing ML to improve algorithms for guessing users’ passwords. More traditional approaches, such as HashCat and John the Ripper, already exist and compare different variations to the password hash in order to successfully identify the password that corresponds to the hash. With the use of neural networks and Generative Adversarial Networks (GANs), however, cybercriminals would be able to analyze vast password datasets and generate password variations that fit the statistical distribution. In the future, this will lead to more accurate and targeted password guesses and higher chances for profit.

On an underground forum post from February 2020, we found a GitHub repository that features a password analysis tool with the capability to parse through 1.4 billion credentials and generate password variation rules.

Human Impersonation on Social Networking Platforms

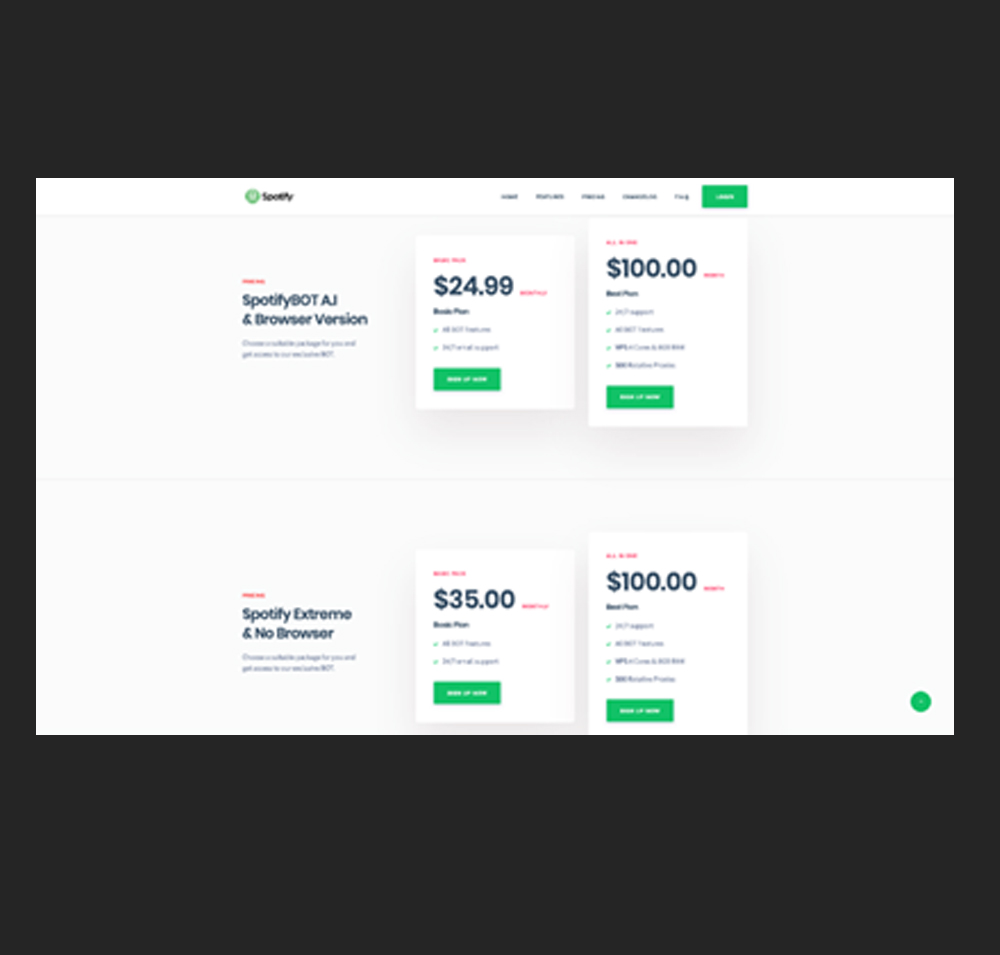

Cybercriminals are also abusing AI to imitate human behavior. For example, they are able to successfully dupe bot detection systems on social media platforms such as Spotify by mimicking human-like usage patterns. Through this AI-supported impersonation, cybercriminals can then monetize the malicious system to generate fraudulent streams and traffic for a specific artist.

An AI-supported Spotify bot on a forum called nulled[.]to claims to have the capability to mimic several Spotify users simultaneously. To avoid detection, it makes use of multiple proxies. This bot increases streaming counts (and subsequently, monetization) for specific songs. To further evade detection, it also creates playlists with other songs that follow human-like musical tastes rather than playlists with random songs, as the latter might hint at bot-like behavior.

A discussion on a forum called blackhatworld[.]com discusses the possibility of creating an Instagram bot that would be able to create fake accounts, generate likes, and run follow-backs. It is possible that the AI technology applied in this bot can also imitate natural user movements such as selecting and dragging.

An underground forum discussion on Instagram bots

AI-Supported Hacking

Cybercriminals are also weaponizing AI frameworks for hacking vulnerable hosts. For instance, we saw a Torum user who expressed interest in the use of DeepExploit, an ML-enabled penetration testing tool. Additionally, the same user wanted to know how they could let DeepExploit interface with Metasploit, a penetration testing platform for information-gathering, crafting, and exploit-testing tasks.

A user on a darknet forum inquiring about the use of DeepExploit

We saw a discussion thread on rstforums[.]com pertaining to “PWnagotchi 1.0.0,” a tool that was originally developed for Wi-Fi hacking through de-authentication attacks. PWnagotchi 1.0.0 uses a neural network model to improve its hacking performance via a gamification strategy: When the system successfully de-authenticates Wi-Fi credentials, it gets rewarded and learns to autonomously improve its operation.

A description post for PWnagotchi 1.0.0

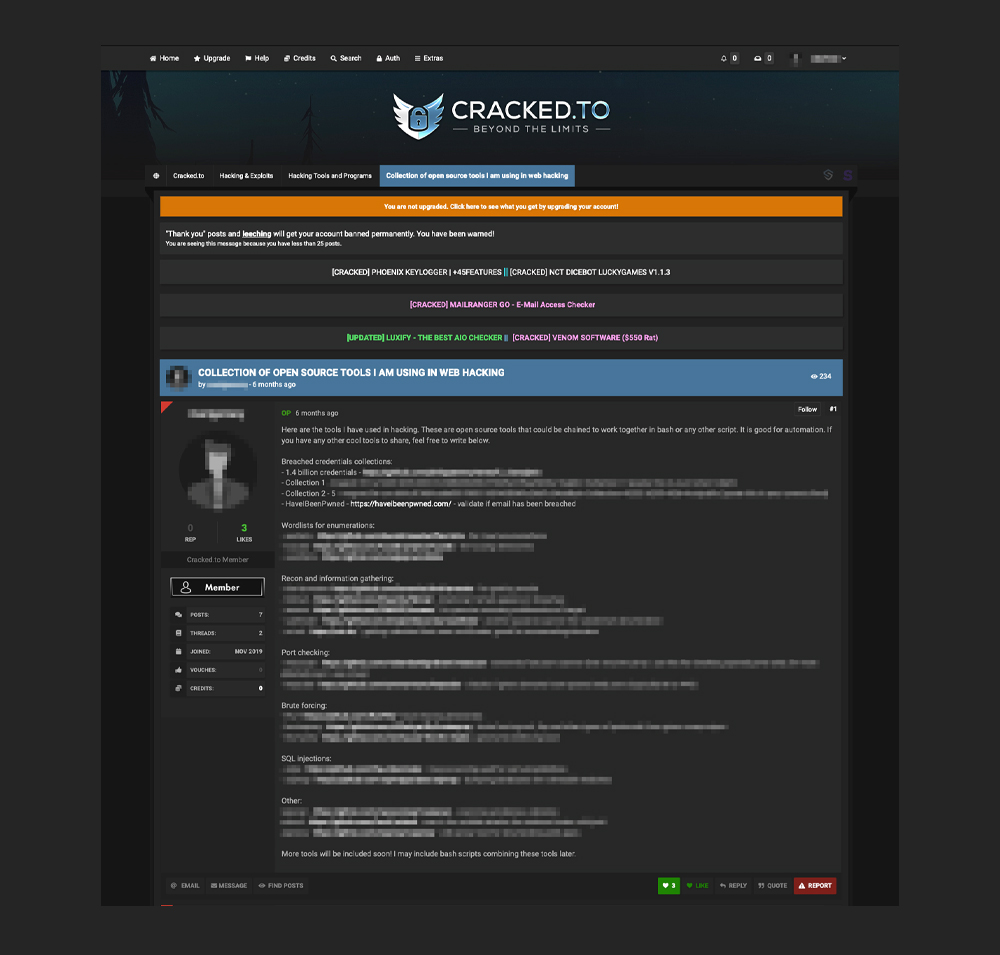

Aside from these, we also saw a post listing a collection of open-source hacking tools on cracked[.]to. Among these tools is an AI-based software that can analyze a large dataset of passwords retrieved from data leaks. The software ensures that it enhances its password-guessing capability by training a GAN to learn how people tend to alter and update passwords, such as changing “hello123” to “h@llo123,” and then to “h@llo!23.”

AI and ML Misuses and Abuses in the Future

We expect to see criminals exploiting AI in various ways in the future. It is highly likely that cybercriminals will turn to AI with the goal of enhancing the scope and scale of their attacks, evading detection, and abusing AI both as an attack vector and an attack surface.

We foresee that criminals will use AI in order to carry out malicious activities to victimize organizations via social engineering tactics. Through the use of AI, cybercriminals can automate the first steps of an attack through content generation, improve business intelligence gathering, and speed up the detection rate at which both potential victims and business processes are compromised. This can lead to faster and more accurate defrauding of businesses through various attacks, including phishing and business email compromise (BEC) scams.

AI can also be abused to manipulate cryptocurrency trading practices. For example, we saw a discussion on a blackhatworld[.]com forum post that talks about AI-powered bots that can learn successful trading strategies from historic data in order to develop better predictions and trades.

Aside from these, AI could also be used to harm or inflict physical damage on individuals in the future. In fact, AI-powered facial recognition drones carrying a gram of explosive are currently being developed. These drones, which are designed to resemble small birds or insects to look inconspicuous, can be used for micro-targeted or single-person bombings and can be operated via cellular internet.

AI and ML technologies have many positive use cases, including visual perception, speech recognition, language translations, pattern-extraction, and decision-making functions in different fields and industries. However, these technologies are also being abused for criminal and malicious purposes. This is why it remains urgent to gain an understanding of the capabilities, scenarios, and attack vectors that demonstrate how these technologies are being exploited. By working toward such an understanding, we can be better prepared to protect systems, devices, and the general public from advanced attacks and abuses.

More information about the technology behind deepfakes, other misuses and abuses of ML- and AI-powered technologies, and our prediction of how these technologies could be abused in the future can be found in our research paper.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One