Download Leaked Today, Exploited for Life: How Social Media Biometric Patterns Affect Your Future

Download Leaked Today, Exploited for Life: How Social Media Biometric Patterns Affect Your Future

Posting on social media has become ubiquitous not just for people but also for businesses and governments around the world. For ordinary users, it is a tool for sharing personal moments and thoughts, while for some professionals, it has become a way to make money. For companies and organizations, it has become an avenue for advertising and sharing updates. In recent years, the quality of public posts, especially those containing images and videos, has gone up considerably and continues to improve.

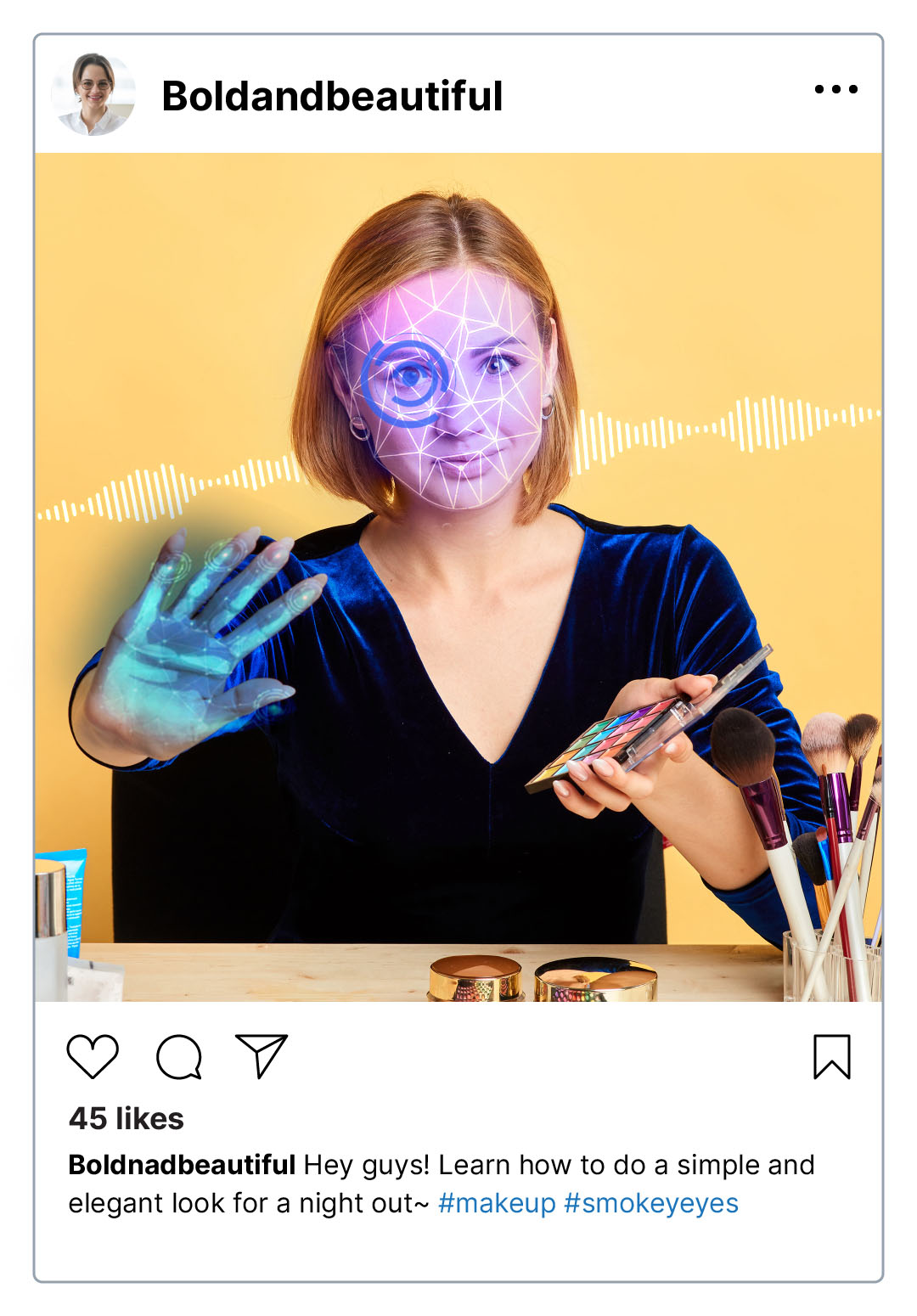

Unfortunately, by sharing personal media content in high resolution, we also unintentionally expose sensitive biometric patterns. This is particularly dangerous because people are not aware of what they are exposing. Through social media trends and popular challenges — such as makeup challenges, crafting videos, building videos, and singing challenges, among others — individuals could be revealing unalterable personal data that can be used against them. We recreated typical social media posts below to show the risks of such exposure.

A #makeup video reveals: face, iris, ears, voice and palm.

Typically, content creators use top-of-the-line cameras and lighting equipment, while many lifestyle and beauty professionals rely on close-up shots. These types of videos can reveal many different biometric patterns.

What can malicious actors do with a high-resolution video of your face?

- Use your face and voice pattern to create a deepfake persona

- Take over an account that requires voice authentication (for example, pronouncing a phrase from a list or saying a random phrase for authentication)

- Take over an account that uses facial recognition for authentication

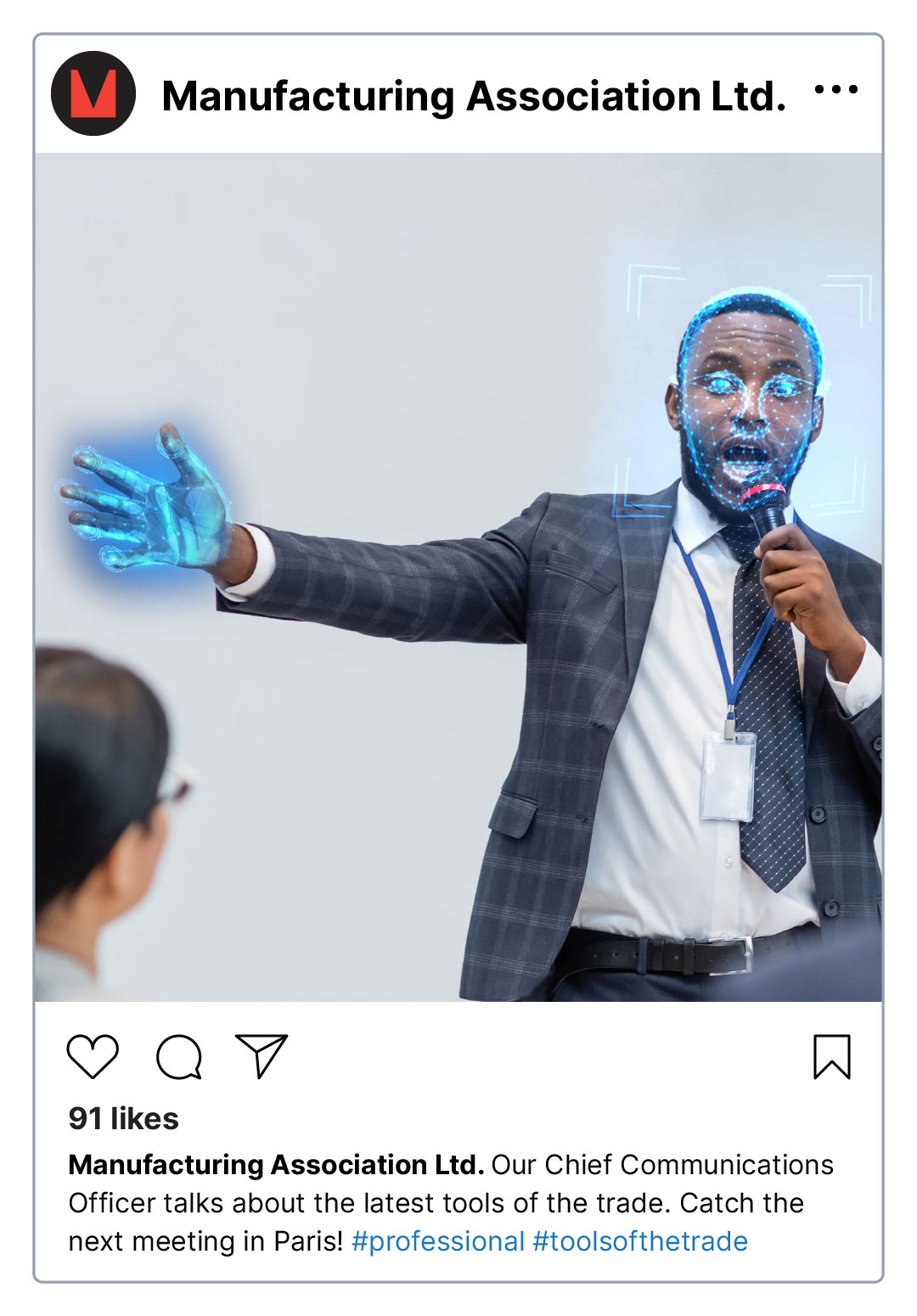

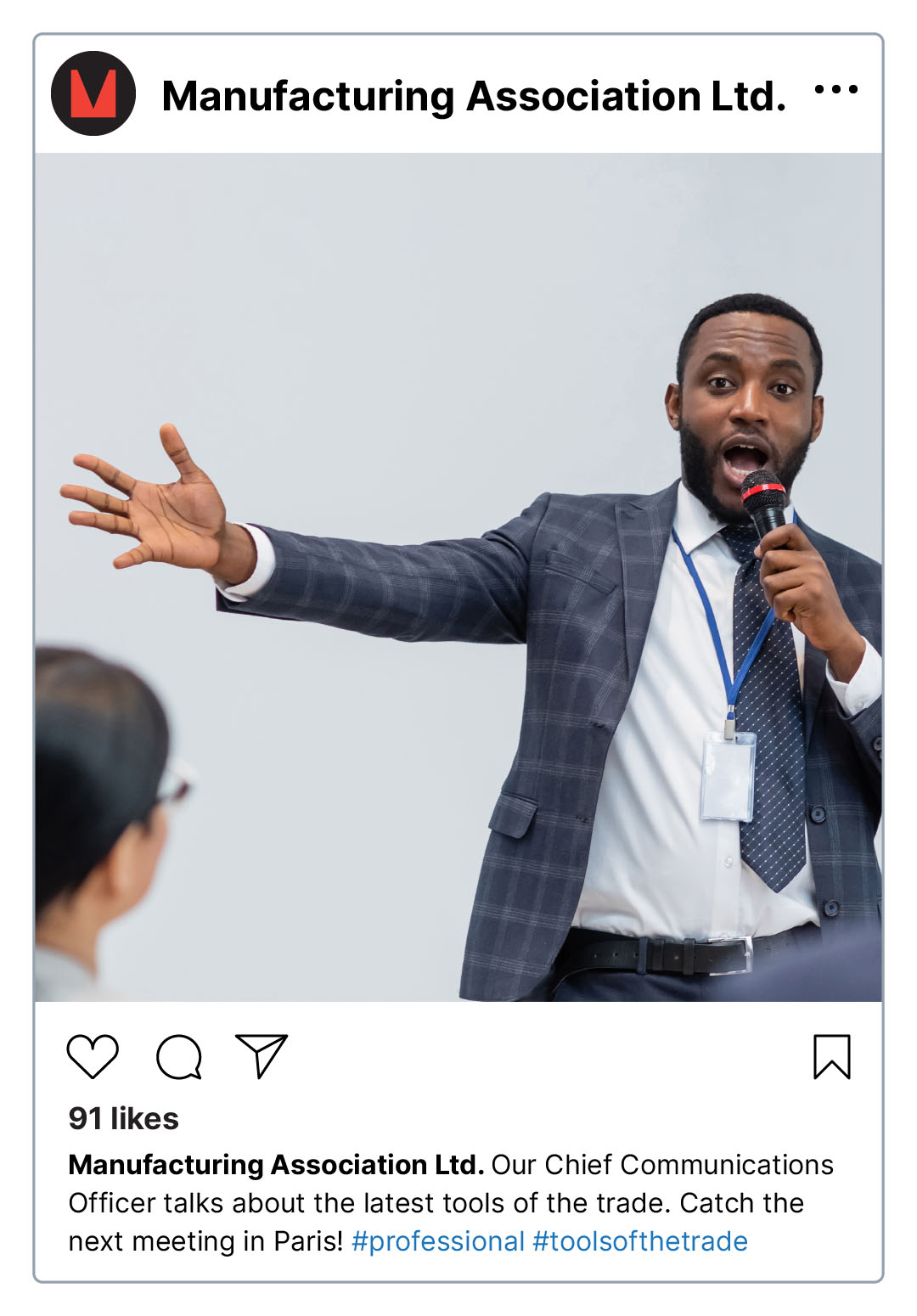

A #professional image reveals an individual’s face, ear shape, and fingerprints.

High-quality pictures make it easier for malicious actors to grab biometric features used for verification and identification.

Threat actors can magnify parts of high-resolution photos, like those used in professional events, to get workable biometric data. For instance, on the image on the left, we can clearly see fingerprints exposed. Ear shape is another example. While not often used for authentication, it can be used to match persons with CCTV camera footage.

Metadata on professional images can also give more information about a target, increasing the probability of a successful attack. Aside from social media, images are often released on corporate portals or news sites, and these images usually have specific details about the subjects in the photos.

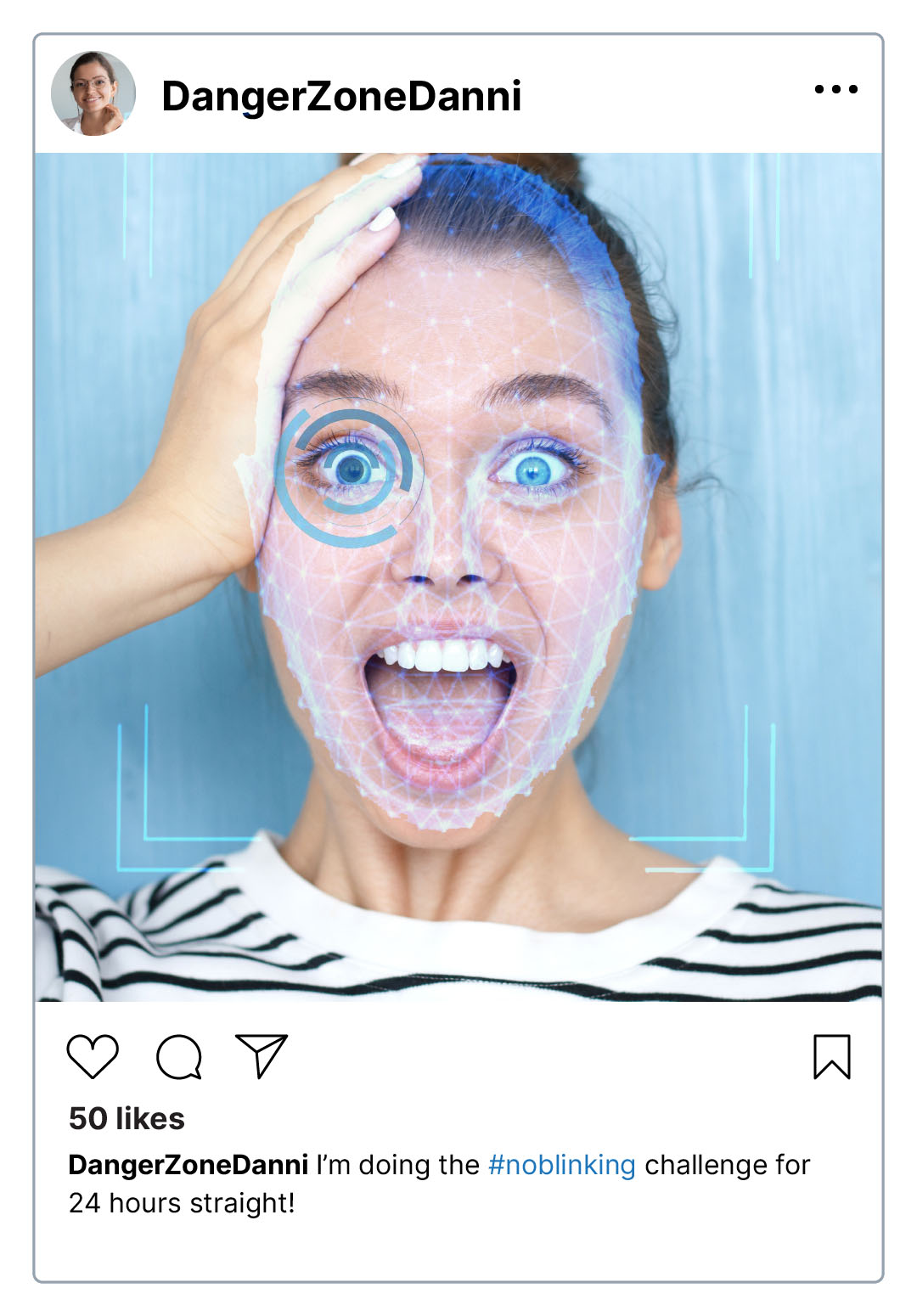

The #noblinking challenge exposes the eye shape, iris, facial features.

Biometric technologies play a much more important role in authentication compared to a decade ago. Many devices and online accounts are verified with face and eye scanners.

Using images from social media, an attacker could do any of the following:

- Open a device stolen from the victim using biometric authentication

- Enroll the person in platforms that use biometric features for accounts

- Impersonate a victim and use their personal or corporate accounts

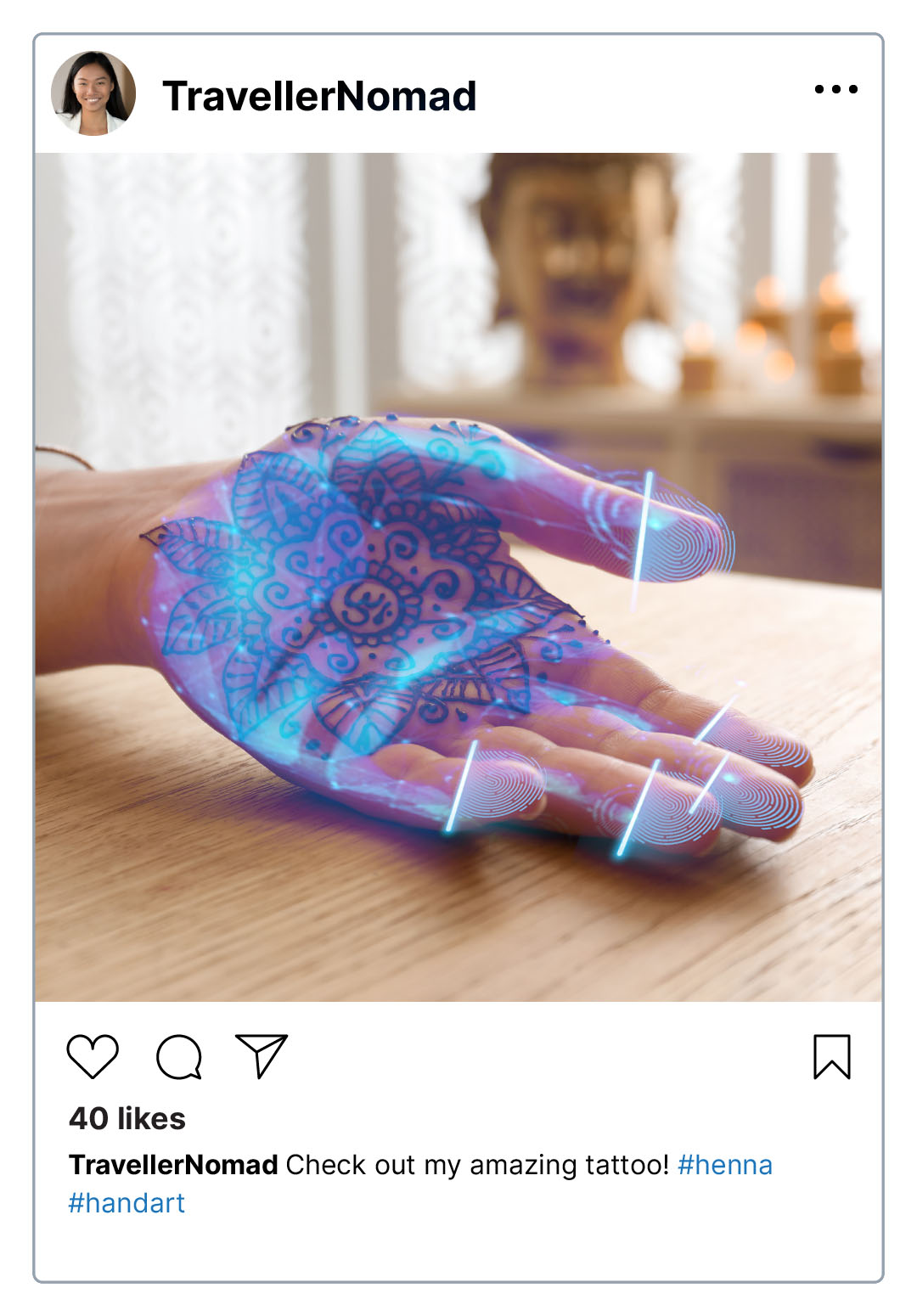

The #handart tag shows fingerprints and hand shape.

Phone cameras and handheld blogging cameras are rapidly evolving and improving, with HD or 4K videos becoming the norm for social media posters. Unfortunately, some of the devices used by normal individuals are good enough for criminals to capture usable fingerprints.

This is particularly dangerous because fingerprints have become the go-to verification for many devices (from personal smartphones to company laptops) and accounts. In some countries, you can even use fingerprints to pay for goods and services.

This personal data is publicly available, and in many cases, actively promoted to reach as big an audience as possible. In the case of celebrities and professionals, millions of people will see these videos and images. However, the owners of these biometric features have very limited control over distribution. They don’t know who has accessed the data, how the content was used, or how long the data will be retained.

Biometric data has long been used for security, from crime investigation and forensics to accessing protected buildings. But now the use of biometrics has become mainstream, with hundreds of millions of people using facial recognition and fingerprint scanners daily. This means that the same millions of people could also be affected by vulnerabilities and weaknesses in the technologies and processes that use their biometric data patterns. Aside from the scenarios listed above, here are some of the risks of social media biometric exposure:

- Messaging and impersonation scams. Using biometric data and information from social media, someone could contact and scam a victim’s friends and family.

- Business email compromise. Attackers can use deepfakes in calls and impersonate coworkers or business partners for money transfers.

- Creating new accounts. Criminals can use exposed data to bypass identity verification services and create accounts in banks and financial institutions, possibly even government services. They could use these for their own malicious activities.

- Blackmail. Using the biometric data exposed on social media, attackers can create more effective material for extortion.

- Disinformation. Malicious actors can use fakes of famous personalities to manipulate public opinion. These schemes can certainly have financial, political, and even reputational repercussions.

- Taking over devices. Attackers can steal or take control of internet-of-things devices or other gadgets that use voice or face recognition.

Aside from biometric patterns, there are unique non-biometric features that could be used to identify or profile people. These can include non-detachable (or nearly non-detachable) features like birthmarks or tattoos, as well as detachable features like clothes and accessories. These features could be used to profile social status, ethnicity, and age. Criminals could also use the presence of designer clothes, sunglasses, hats, and bags on exposed media to prioritize attacks on and crimes against people who exposed such content.

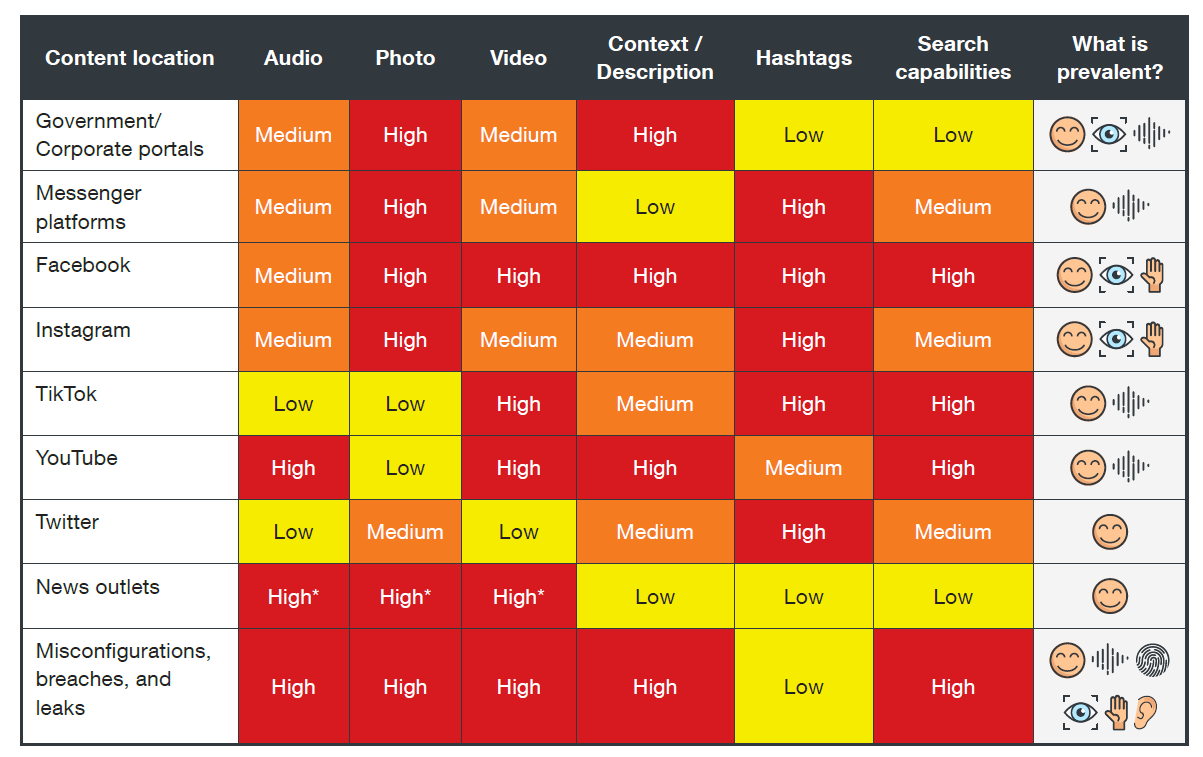

Where can these biometrics be seen?

Biometrics are exposed on many different platforms and through many different media types. We see these patterns publicly on messaging apps (such as group chats on Telegram), social media platforms, government and corporate portals, as well as news and media outlets.

What can we do?

One of the problems with biometric data is that, unlike a password, once it is exposed, it is nearly impossible to change. How can we get a new iris pattern or fingerprint? These are lifelong passwords, and once exposed to the public, an attacker can use them five or even 10 years from now. It is therefore important to know what personal biometric information has already been or could be exposed, and how the publication of such content could affect you.

For ordinary users, we suggest using less-exposed biometric patterns (such as fingerprints) to authenticate or verify sensitive accounts. It would also be better to lower the quality of media published online, or even blur certain features. For organizations that use biometric patterns, there are three basic factors for verification: something that the user has, something that the user knows, and something that the user is. When used together with very specific “somethings” defined per account, these factors can still be effective for authentication and verification. Lastly, multifactor authentication (MFA) should be standard for any organization handling sensitive data and information.

To learn more about this threat and how to prevent attacks, read our report “Leaked Today, Exploited for Life: How Social Media Biometric Patterns Affect Your Future”.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One