Intelligenza artificiale (IA)

Introducing ÆSIR: Finding Zero-Day Vulnerabilities at the Speed of AI

TrendAI™’s ÆSIR platform combines AI automation with expert oversight to discover zero-day vulnerabilities in AI infrastructure – 21 CVEs across NVIDIA, Tencent, and MLflow since mid-2025.

Executive summary

TrendAI™ introduces ÆSIR, an AI-empowered security research platform that combines advanced automation with human expertise to proactively identify and remediate zero-day vulnerabilities in foundational AI infrastructure. Since mid-2025, ÆSIR has uncovered 21 critical CVEs across industry-leading platforms including NVIDIA, Tencent, MLflow, and MCP tooling, which underscores ÆSIR’s ability to operate at the scale and speed required to protect the rapidly evolving AI ecosystem.

The platform leverages two core components: MIMIR for real-time threat intelligence and FENRIR for zero-day vulnerability discovery. Together, these enable TrendAI™ to scan massive codebases in hours, prioritize the highest-impact vulnerabilities, and ensure robust, continuous protection for customers. Powered by ÆSIR, TrendAI™’s responsible disclosure process sees to it that all vulnerabilities are not only reported but also fully remediated, including patch bypass verification, to further strengthen customers' defenses.

ÆSIR closes the gap between the speed of AI development and the pace of security research: By combining machine-speed automation with our researchers’ expert oversight, it helps TrendAI™ experts deliver rapid, high-quality vulnerability discovery and lifecycle management for critical AI infrastructure.

Every step involves human direction of ÆSIR's AI resources: While AI agents accelerate codebase analysis, human experts direct research, validate ÆSIR findings, and manage disclosure of discovered vulnerabilities: Researchers investigate issues flagged by ÆSIR, assess their real-world impact, and drive responsible vendor coordination. This way, patch effectiveness is verified by both AI and human analysis to ensure full remediation and identify any bypasses.

TrendAI™ unveils ÆSIR

"AI factories will be the critical infrastructure of the 21st century." – Jensen Huang, CES 2025

To secure the future, TrendAI™ unveils ÆSIR (AI-Enhanced Security Intelligence & Research): It supercharges TrendAI™ analysts, researchers, and threat researchers with AI agents that operate at machine speed – scanning codebases in hours instead of weeks, correlating threat intelligence across thousands of sources, and surfacing the highest-priority targets for human and agentic investigation, all while creating protections for TrendAI™ customers.

ÆSIR is our answer to questions every security professional should be asking:

- Who secures the AI that will power the next generation of compute?

- What is the future of AI and humans in vulnerability research?

The answer involves AI itself, but not the way you might think.

The scale of the challenge

In 2025, more than 48,000 CVEs were published – a 38% increase from 2023. The scale of vulnerabilities continues to rise in our increasingly connected world. Huang himself acknowledged the acceleration at the GTC 2025 event: "The computation we need at this point is easily 100 times more than we thought we needed." At the same time, global AI spending is projected to reach US$1.5 trillion in 2025 and exceed US$2 trillion by 2026, according to the World Economic Forum. Enterprise spending on generative AI alone jumped from US$11.5 billion in 2024 to US$37 billion in 2025, increasing by 3.2 times in a single year. This explosion of AI investment and the computation needed to supply the AI ecosystem creates attack surfaces faster than traditional security research can address – beyond even the increased number of bugs in more traditional software.

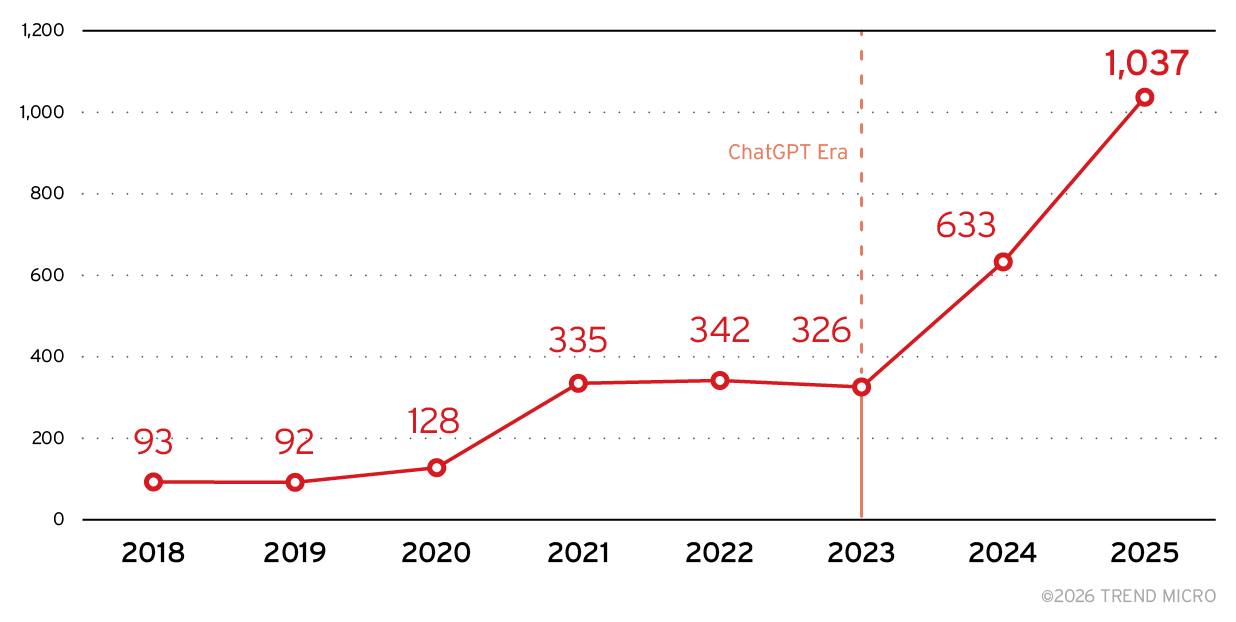

The surge in AI-specific vulnerabilities tells an even more dramatic story. Analysis of 2,986 AI CVEs verified by large language models (LLM) reveals an unmistakable inflection point (Figure 1). From 2018 to 2022, AI vulnerabilities grew at a steady but manageable pace, with roughly 50 CVEs in 2018 climbing to approximately 275 by 2022.

Then came the ChatGPT era, and the mainstreaming of LLMs triggered an exponential acceleration beginning in late 2022. AI CVEs jumped from around 300 in 2023 to over 450 in 2024, and reached over 1,000 in 2025 – a 70% year-over-year increase that shows no signs of slowing. The correlation is clear: as AI systems move from research labs into production environments, their vulnerabilities move from theoretical concerns to active threats.

The cybersecurity implications are profound. The AI cybersecurity market reached US$26.29 billion in 2024 and is projected to hit US$109.33 billion by 2032. The question remains: who's finding the vulnerabilities in the AI systems themselves?

ÆSIR: Securing AI at the speed of AI

ÆSIR represents TrendAI™'s strategic investment in agentic security research.

The platform consists of two specialized components – MIMIR and FENRIR, named after figures from Norse mythology who embody the capabilities they provide – working in concert under human oversight.

MIMIR

MIMIR, named after guardian of the Well of Wisdom (Mímisbrunnr) beneath Yggdrasil, from which Odin sacrificed an eye to drink and gain knowledge. MIMIR, which serves as the intelligence backbone of ÆSIR, continuously monitors the global vulnerability landscape. It tracks thousands of CVEs published annually and uses AI agents to perform autonomous security research, correlate threat intelligence, and prioritize vulnerabilities. This system filters signal from noise by providing actionable intelligence to teams of vulnerability researchers, threat researchers, and detection engineers within Trend.

FENRIR

FENRIR, named after the great wolf of Norse legend whose hunting prowess was unmatched, handles zero-day vulnerability discovery and agentic triage. FENRIR analyzes source code to identify patterns consistent with known vulnerability classes (like deserialization flaws, authentication weaknesses, and injection points), surfacing candidates for further review, then analyzes and prioritizes this vulnerability intelligence by potential severity, exploitability, and internal metrics.

However, these components don't operate in isolation. MIMIR and FENRIR form a bidirectional intelligence loop that amplifies both offensive and defensive capabilities:

- From intelligence to discovery. When MIMIR identifies vulnerability patterns being actively exploited in the wild, such as a surge in deserialization attacks or authentication bypasses, it feeds this intelligence to FENRIR. FENRIR then prioritizes hunting for similar vulnerability classes in new targets. The NVIDIA Isaac GR00T vulnerabilities emerged from exactly this kind of pattern recognition: MIMIR's analysis of exploitation trends informed FENRIR's focus on deserialization and authentication weaknesses.

- From discovery to protection. When FENRIR discovers new zero-day vulnerabilities, they become n-day vulnerabilities once disclosed. MIMIR tracks these through their lifecycle: monitoring exploitation attempts, patch availability, and bypass discoveries. This synthesis of proactive threat intelligence powers the TrendAI Vision One™ platform, enabling faster protection for customers. When we found the Isaac GR00T patch bypasses, MIMIR immediately updated its intelligence to flag organizations still running vulnerable versions and accelerated detection development across TrendAI Vision One™.

- Sustained protection and vigilance. MIMIR continuously monitors the threat landscape for new exploits and techniques on n-day vulnerabilities and exploitation patterns in the wild. As an example, MIMIR has tracked and triaged over 140 proof-of-concept exploits and multiple in-the-wild campaigns exploiting CVE-2025-55182 (React2Shell).

This creates a virtuous cycle where offensive research informs defensive intelligence, defensive intelligence guides offensive research priorities, and both feed actionable threat intelligence directly into our customer protections as well as numerous TrendAI™ threat hunting initiatives.

Every step involves human direction of the AI resources:

- Discovery - Researchers direct the analysis, choose targets, and validate findings. AI generates leads; humans make judgments about what to pursue.

- Triage - When FENRIR flags potential vulnerabilities, human researchers investigate. They verify that the bug is real, assess actual impact, and determine whether it affects production systems or exists only in theoretical scenarios. This helps cut down on the slop produced by other techniques.

- Disclosure - Every vulnerability we report goes through the established disclosure process of TrendAI™ Zero Day Initiative™ (ZDI). We prepare comprehensive technical documentation and develop proof-of-concept demonstrations. We engage constructively with vendors.

- Follow-through - When vendors release patches, our researchers – augmented with AI-enhanced security research – evaluate whether the fixes are complete. When they are not, we work with vendors on improved remediations.

Why library security matters

At GTC, Jensen Huang described the complexity of AI stacks: "The entire stack is incredibly complex." With over 900 CUDA-X libraries, AI models, and acceleration frameworks, there are attack surfaces at every layer. And with 6 million developers, hundreds of libraries, and continuous updates, traditional security research cannot keep pace.

The AI security conversation focuses heavily on model behavior, such as prompt injection, adversarial examples, and training data poisoning. These are real concerns, but not the most urgent. Model attacks require understanding specific architectures and often produce unreliable results. A jailbroken chatbot might generate inappropriate content – embarrassing, but recoverable.

Library attacks are different. They leverage traditional software vulnerabilities that security researchers have understood for decades. A deserialization RCE provides arbitrary code execution. An authentication bypass opens systems to unauthorized access. These are not probabilistic, they are deterministic. They do not require understanding of the AI, they require understanding of the software.

The deserialization flaws don't care what neural network you're training. It doesn't matter if you're building a helpful robot or a harmful one. The vulnerability exists in the serialization layer – any system using that code path is vulnerable.

"Physical AI is an AI that acts in the physical world," Huang explained at CES. "Robots will be the embodiment of AI." When the libraries powering those robots contain critical vulnerabilities, we are not just talking about data breaches. We are talking about vulnerabilities in systems designed to operate alongside humans.

The AI industry is building critical infrastructure. Power grids have security requirements. Financial systems have regulations. The software libraries powering AI – the foundations upon which trillion-dollar industries are being built – deserve the same attention.

A human researcher might spend weeks analyzing a complex AI framework or library. FENRIR can identify candidate vulnerabilities across entire codebases in hours, surfacing the most promising leads for human investigation. The 21 CVEs across NVIDIA, Tencent, MLflow, and MCP infrastructure show that these are exactly the kinds of systems that benefit from AI-assisted security analysis, and exactly the kinds of systems that attackers will increasingly target.

Four NVIDIA Isaac GR00T vulnerabilities

The value of this approach shows the results. We will use the two recent patch cycles for NVIDIA Isaac GR00T as a case study.

In May 2025, FENRIR identified security concerns in NVIDIA's Isaac GR00T architecture. Our AI-assisted analysis flagged patterns consistent with deserialization vulnerabilities and authentication weaknesses – the kind of patterns that would take human researchers significantly longer to identify across large, complex codebases.

At that point, TrendAI™ researchers investigated and confirmed two distinct vulnerabilities.

ZDI-25-847 (CVE-2025-23296)

ZDI-25-847 (CVE-2025-23296) represents a critical deserialization of untrusted data vulnerability in the “TorchSerializer” class that results in remote code execution. The severity of the bug was rated as a CVSS 9.8. As our advisory states: “The specific flaw exists within the “TorchSerializer” class. The issue results from the lack of proper validation of user-supplied data, which can result in deserialization of untrusted data. An attacker can leverage this vulnerability to execute code in the context of root.” This bug was initially patched by NVIDIA in August 2025.

ZDI-25-848 (CVE-2025-23296)

ZDI-25-848 (CVE-2025-23296) represents an authentication bypass vulnerability in the “secure_server” component method. This bug rated a severity of CVSS 7.3. As we state in our advisory, “The issue results from the lack of authentication prior to allowing access to functionality. An attacker can leverage this vulnerability to bypass authentication on the system.” This bug was also patched in August 2025.

We reported these findings through TrendAI™ ZDI's established coordinated disclosure process. NVIDIA responded promptly, acknowledged our researchers, and released patches in a timely manner. We thought the story ended there.

FENRIR hunts patch bypasses

FENRIR does not just find bugs; it helps researchers understand vulnerability patterns. When NVIDIA released their patches, our team analyzed the remediations and identified potential weaknesses. FENRIR re-analyzed the updated codebase under researcher guidance.

In November 2025, we disclosed two additional vulnerabilities – patch bypasses for the original flaws: ZDI-25-1041 (CVE-2025-33183) bypassed the original “TorchSerializer” vulnerability, and ZDI-25-1044 (CVE-2025-33184) bypassed the “secure_server” patch. Again, we reported these bugs to NVIDIA through our normal process, and they were patched in November 2025.

An autonomous system might have found the initial bugs. However, understanding the patch, recognizing its limitations, and systematically identifying bypasses required human expertise to direct AI capabilities.

Our commitment: Responsible AI security research

TrendAI™ ZDI has spent over two decades building trust with the security community. We have processed thousands and thousands of vulnerability reports. We have maintained relationships with vendors across every major technology sector.

ÆSIR extends this institutional knowledge into the AI age, with explicit commitments that differentiate our approach:

- Human empowerment. AI surfaces candidates; humans verify them. This creates a natural synergy between humans and AI where both works together to secure the software we use and love.

- Professional vendor engagement. We follow coordinated disclosure practices that give vendors time to fix issues properly. We provide comprehensive technical documentation. We work with product security teams rather than against them.

- Focus on high-impact targets. We're not using AI to flood understaffed open-source projects with bug reports. We're targeting platforms where vulnerabilities have significant consequences, and most importantly, bugs that could impact our customers.

- Continuous improvement over one-time findings. As the Isaac GR00T case demonstrates, we do not just find bugs – we monitor remediations and identify when patches are incomplete.

Additional ÆSIR finds through FENRIR

Since mid-2025, FENRIR has discovered and disclosed the following vulnerabilities across the AI tech stack:

- CVE-2025-33184 - NVIDIA Isaac-GR00T secure_server Authentication Bypass Vulnerability

- CVE-2025-33183 - NVIDIA Isaac-GR00T TorchSerializer Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-23298 - NVIDIA Merlin Transformers4Rec Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-23296 - NVIDIA Isaac-GR00T Code Injection Vulnerability

- CVE-2025-23296 - NVIDIA Isaac-GR00T Authentication Bypass Vulnerability

- CVE-2025-33185 - NVIDIA AIStore AuthN users Missing Authentication for Critical Function Information Disclosure Vulnerability

- CVE-2025-33185 - NVIDIA AIStore AuthN Information Disclosure Vulnerability

- CVE-2025-23357 - NVIDIA Megatron LM Remote Code Execution Vulnerability

- CVE-2025-11202 - win-cli-mcp-server resolveCommandPath Command Injection Remote Code Execution Vulnerability

- CVE-2025-12489 - evernote-mcp-server openBrowser Command Injection Privilege Escalation Vulnerability

- CVE-2025-11200 - MLflow Weak Password Requirements Authentication Bypass Vulnerability

- CVE-2025-13709 - Tencent TFace restore_checkpoint Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13711 - Tencent TFace eval Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13706 - Tencent PatrickStar merge_checkpoint Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13708 - Tencent NeuralNLP-NeuralClassifier _load_checkpoint Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13716 - Tencent MimicMotion create_pipeline Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13714 - Tencent MedicalNet generate_model Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13710 - Tencent HunyuanVideo load_vae Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13707 - Tencent HunyuanDiT model_resume Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13712 - Tencent HunyuanDiT merge Deserialization of Untrusted Data Remote Code Execution Vulnerability

- CVE-2025-13713 - Tencent Hunyuan3D-1 load_pretrained Deserialization of Untrusted Data Remote Code Execution Vulnerability

These discoveries share common characteristics:

- High-value targets - Foundation libraries used across industries

- Critical severity - CVSS 9.8 vulnerabilities enabling remote code execution as root

- Incomplete patches - Initial remediations that required bypass fixes

- Physical-world implications - Systems designed to power robots working alongside humans

Closing the gap between AI development and security research

The Isaac GR00T vulnerabilities represent one of many ÆSIR discoveries across AI infrastructure. They won't be the last.

As AI libraries become more prevalent and more powerful, the attack surface expands. As AI systems become more capable and more autonomous, the stakes increase. As AI factories become critical infrastructure, to use Huang's framing, they require security commensurate with that status.

The asymmetry between AI development speed and security research speed is the defining challenge of AI security. Not prompt injection. Not adversarial examples. The fundamental inability to analyze code faster than it ships.

Through ÆSIR, TrendAI™ closes that gap. Our platform delivers comprehensive capabilities across the vulnerability lifecycle, from MIMIR's n-day intelligence gathering and prioritization, through FENRIR's zero-day discovery, to complete disclosure workflow automation.

21 CVEs across NVIDIA's AI infrastructure, machine learning (ML) platforms, and emerging MCP tooling. Multiple disclosure cycles. Patch bypasses caught before exploitation. Critical infrastructure security meaningfully improved.

The libraries powering AI have become critical infrastructure. They're shipping at machine speed. Securing them requires matching that speed. ÆSIR delivers – and we're just getting started. We'll be disclosing more about the ÆSIR framework and its components over the coming months as more bugs are disclosed and remediated by vendors.