By Alfredo Oliveira and David Fiser

Key Takeaways:

- MCP allows AI applications to securely access external data sources, but improper secret management creates significant risks.

- Many of our observed MCP configurations store sensitive credentials in plaintext files such as .env or JSON, making them vulnerable to theft and misuse.

- Around 48% of the MCP servers we have reviewed recommend unsecure storage methods, which threat actors can exploit to gain access to cloud resources, databases, or inject malicious code.

- Trend Micro recommends implementing centralized security controls, continuous auditing, and adopting secure configuration defaults, as well as leveraging solutions like Vision One™ - Cyber Risk Exposure Management – Cloud Risk Management for comprehensive protection.

Model Context Protocol (MCP) is an open-source standard that enables AI applications to connect with external data sources and tools. It allows these applications to interact with systems like databases, APIs, and services using natural language. This makes it easier to construct complex workflows on top of LLMs in a more scalable way.

However, MCP-based systems also introduce risks. In a previous entry, we covered the authentication issue of network-exposed MCP servers. Now we focus on another issue — one that involves secret management for linked data sources. Our research uncovered that the MCP configuration files pose a considerable risk for organizations' private data.

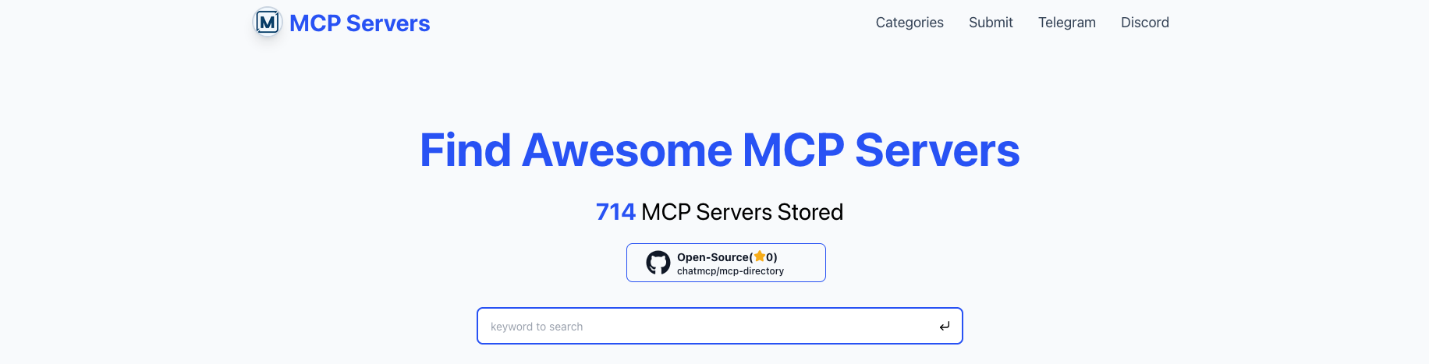

Figure 1. Increasing number of MCP servers from 714 in January of 2025 to 16,098 in July 2025

Source: MCP.so

The MCP server

An MCP server is a lightweight service that acts as an intermediary between AI applications and data sources. One of the core server functions is to handle authentication and authorization for underlying systems, providing resources (data) and tools (functions) to AI clients.

This often requires passing a secret — such as API keys or credentials — to the data source API.

As we have described in our earlier entry on MCP security, the credentials to the data source can be configured in various ways:

- Set on the server:

- hardcoded or injected at runtime

- No Role-Based Access Control (RBAC) (presents potential overprivileged use cases)

- LLM client configuration:

- OAuth token or a generic API key

Theoretically, passing credentials via the client is the safest method if implemented correctly. For instance, using a dynamically obtained OAuth token, securely stored in a vault and referenced by the client, reduces exposure and improves traceability.

Misconfiguration and exposed secrets

Our current research reveals that all the poor practices we identified in our earlier paper, “Unleashing Chaos: Real World Threats in the DevOps Minefield,” are also present within the MCP configuration files.

In this previous research, we uncovered the widespread misuse of environment variables for secret handling. Sensitive credentials were often:

- Stored in .env files

- Hardcoded into containers

- Exposed via logs, memory dumps, or image registries

These secrets were poorly protected and bundled together, making them prime targets for automated attacks and supply chain breaches.

We reference our findings from this research extensively throughout this article. To gain a deeper understanding of the active interest threat actors have on exposed configurations, we recommend revisiting the full report.

We must conclude that, in practice, many MCP server configurations suggest they have become the new .env file — aggregating secrets across all data sources provided to the LLM.

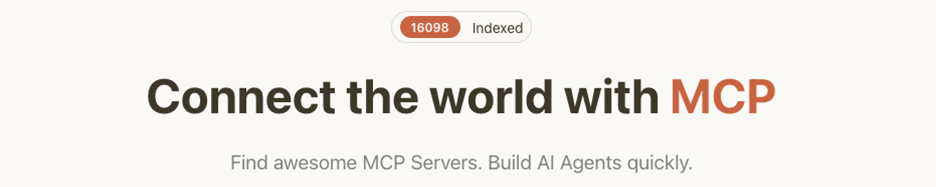

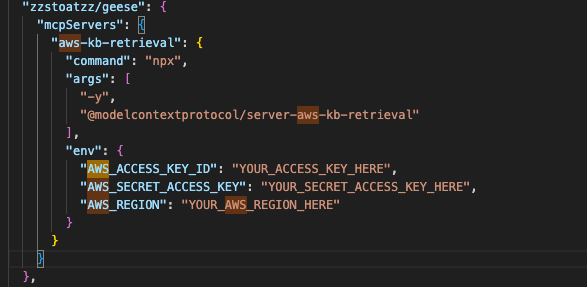

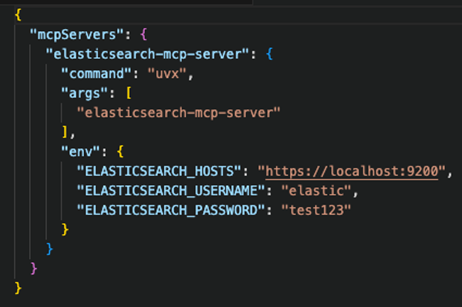

This is echoed by the following configuration examples:

Figure 2. Database MCP configuration

Figure 3. Cloud token configuration in MCP

Figure 4. MCP secrets configuration

Figure 5. MCP server and its API Key configuration

Figure 6. MCP configuration with loading .env file

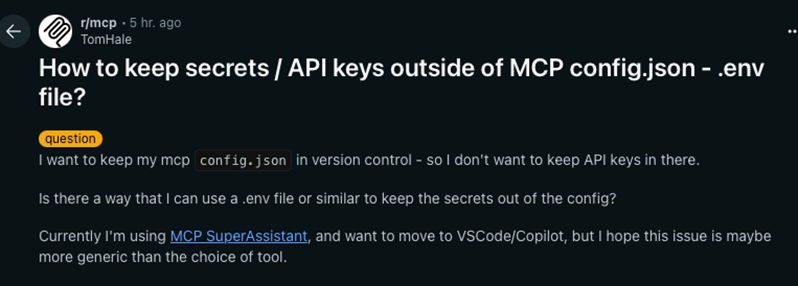

Figure 7. The problem of keeping credentials safe as discussed on Reddit

We collected 19,402 MCP server source codes. After analyzing the code, documentation, and usage patterns, we found that 9,294 (48%) of them recommend using the .env file to pass the secrets to the MCP server and authorize at the data source. The alternative option being hardcoding the credentials inside a plaintext JSON configuration file. Neither of these approaches is secure as both aggregate multiple secrets into a single file.

Threat actors are already hunting down publicly exposed .env files. It is only a matter of time before they shift their focus to the MCP deployment configurations and gain access to private data through natural language interfaces.

What types of attacks can be expected?

Unsafe credential storage might not be seen as disastrous in the case of locally deployed data sources until a breach happens. However, the risk increases significantly in cloud production deployments. MCP configurations can grant access to critical resources such as cloud account management, databases, and many more resources. With leaked access tokens, threat actors can inject malicious code into distributed software binaries, triggering a supply chain attack — scenarios we unpacked in our previous research.

Every MCP user should be cautious. It is only a matter of time before threat actors adapt to the new situation and target the MCP server configuration to access sensitive corporate data. With the growing adoption of LLM and MCP, we can expect a new class of attacks.

The fundamental difference from the past is that threat actors no longer require extensive technical knowledge. A stolen MCP configuration and natural language query, which does not need to sound malicious at all, is all they need.

Domain administrators should enforce rules for safe MCP usage and consider securing centralized MCP access over unmanaged individual configurations.

While not many MCP servers might support safe secrets loading, a simple wrapper script that retrieves the secrets from a vault will mitigate the risk. Users should be especially cautious and examine how secret credentials are stored within the MCP workflows. Our research has unveiled several insecure practices that we have already reported to the affected vendors through the Trend Zero Day Initiative™ (ZDI).

How to keep MCP secrets safe

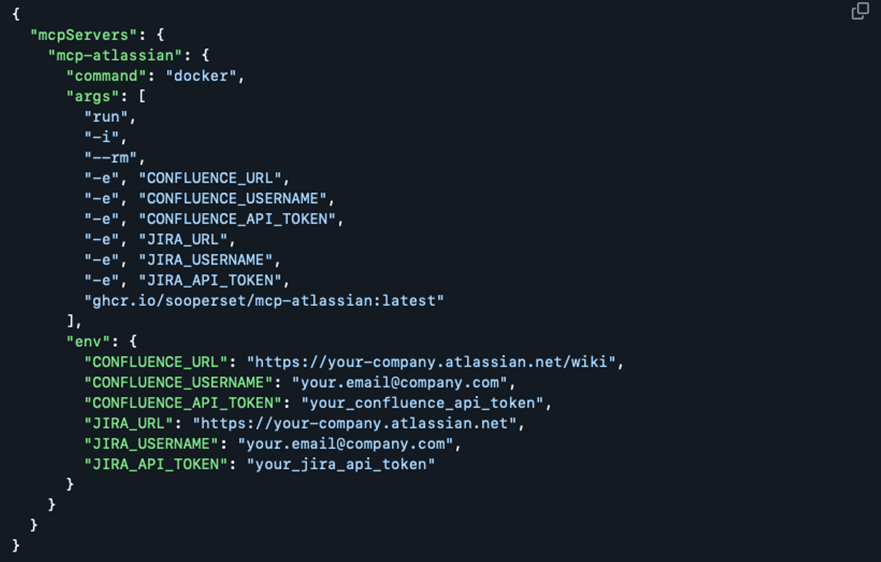

We reviewed a sample MCP server configuration for a JIRA server. As can be observed from Figure 8, sensitive information, including JIRA_API_TOKEN, CONFLUENCE_API_TOKEN, and associated usernames, will be hardcoded inside a plaintext JSON file.

Figure 8. Suggested MCP server settings

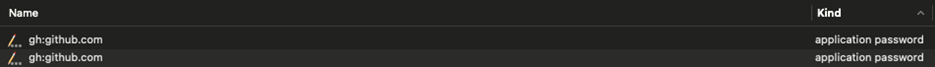

We continuously reiterate how poor DevOps practices, especially around secret management, create conditions for a ticking bomb of compounding issues and a major source of data breaches. Fortunately, not everyone is getting it wrong. A good example is the popular GitHub CLI tool, which stores the GitHub access token securely within the system vault.

Figure 9. GitHub CLI tool stores its secrets in the keychain by default!

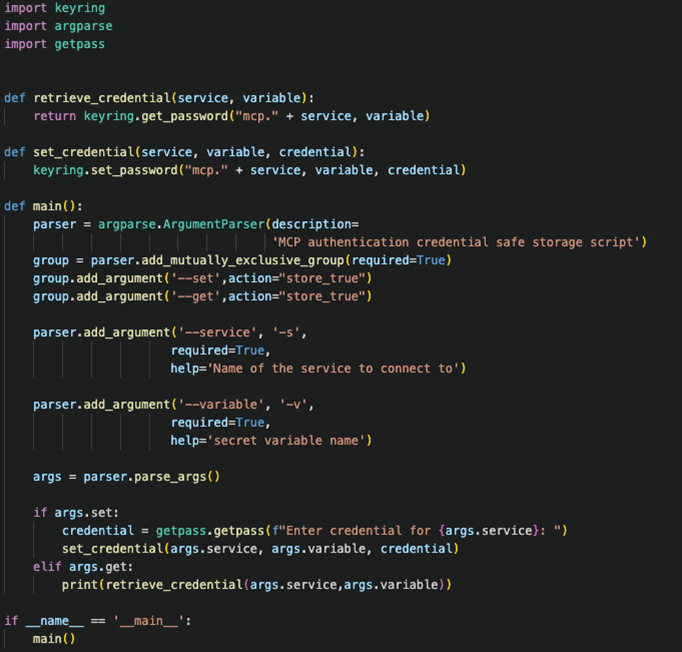

We apply the same in our wrapper script. To make it work, we must solve the following challenges:

1. Secure credential storage and retrieval.

2. Secrets expansion at the MCP server execution.

3. Configuration format replacement.

We have chosen Python as our scripting language and use the keyring library to access the system vault. The library supports all three major operating systems: Windows, Linux, and macOS. Our helper script enables secure retrieval and secret storage. For this example, we are running on macOS.

Figure 10. System keychain credential retriever/storage script

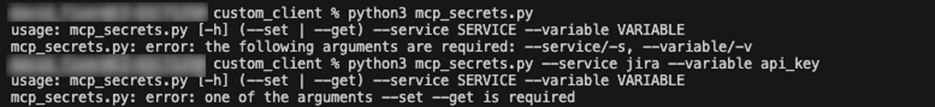

Once we have our script, we save our JIRA API key using the set argument. The service and variable parameters act as identifiers for our stored secret. Note that the secret is not exposed even during input. If needed, we can retrieve it later using the get parameter.

Figure 11. Credential helper script in action

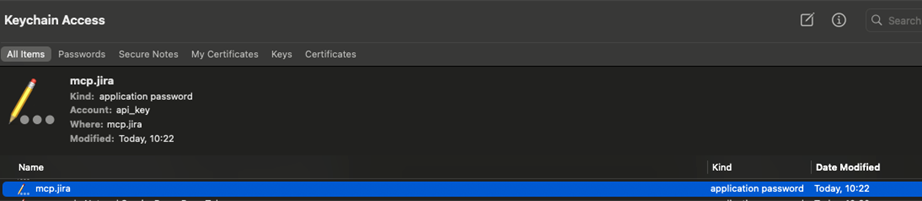

Compared to the widely suggested configuration, we stored the API key securely in the system vault. The next step is to determine how to pass it to the MCP server.

Figure 12. API key for the MCP server securely stored in the system vault

The plan is to connect the MCP server to Antropic’s Claude desktop application. The obvious target for this integration is the MCP server’s configuration file, claude_desktop_config.json

Our goal is to implement a configuration that references a secret securely. Unfortunately, we haven’t found that functionality in the Claude desktop that would support vault secrets retrieval and/or safe reference.

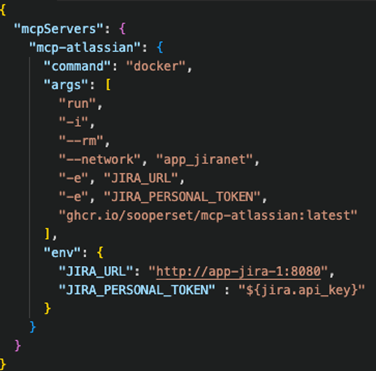

Figure 13. MCP server sensitive information reference

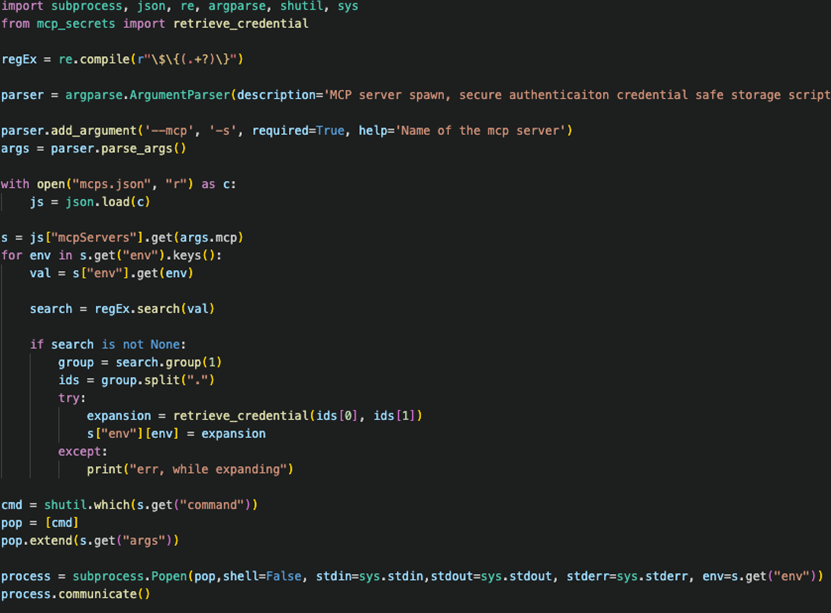

For this reason, we created another Python script that safely spawns the MCP server inside a container. Secrets are retrieved from a vault during the execution and passed to the MCP as environment variables. That also requires a copy of the original MCP server’s configuration file with secrets as placeholders. Our script will parse the file, expand referenced secrets, and pass them to the MCP server.

Figure 14. Spawn replacement script

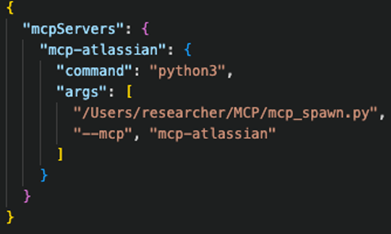

In the final step, we replace the original claude_desktop_config.json file, substituting the original command with our wrapper script and specifying that we want to execute the mcp-atlassian server. The wrapper script parses a copy of the modified MCP server configuration file and retrieves referenced secrets from the system vault. It then executes the original command and passes the secrets as environment variables to the MCP server.

It is important to note that we used the same configuration format. The only difference is that we implemented an additional security feature.

Figure 15. Replaced claude_dekstop_config.json, spawning our wrapper script, and safely expanding secrets from the system vault

This functionality could have been implemented within the Claude Desktop and similar products themselves. However, the rapid pace of innovation combined with flawed DevOps practices continue to undermine secure deployments. Staying safe requires taking that extra step away from defaults and suggested configurations.

Conclusion and recommendations

Regardless of how you run your MCP server, it is important to adopt secure secret management and design workflows that keep secrets out of the environment whenever possible. Transforming secure practices from recommendations to the norm, reduces the risk of accidental leaks, misconfiguration, and exposure. Plaintext and hardcoded credentials, no matter the filename, are never a secure option!

Trend Micro and its Vision One™ platform offers the tools and guidance to help prevent these risks from turning into full-scale data breaches.

Additionally, Vision One™ Cyber Risk Exposure Management and Cloud Risk Management provides continuous security, compliance, and governance by identifying unwanted exposures and insecure cloud policies. It supports teams in managing and resolving cloud resource misconfigurations — a critical capability when dealing with MCP deployments in cloud environments.

Figure 16. Trend Micro’s Vision One platform’s tools to help prevent data breaches.

Trend Micro’s Artifact Scanner helps detect and block exposed secrets like API keys, passwords, and tokens in MCP server repositories before they reach production, reducing the risk of data breaches.

Trend Vision One™ – Container Security detects exposed secrets within containers running in Kubernetes-based microservices, helping reduce the risk of data leaks and unauthorized access.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One