Trend Micro State of AI Security Report 1H 2025

Trend Micro

State of AI Security Report,

1H 2025

The broad utility of artificial intelligence (AI) yields efficiency gains for both companies as well as the threat actors sizing them up, with more organizations planning to ramp up their investment in AI over the next three years. With 93% of security leaders bracing for daily AI attacks in 2025, decision-makers across the board are compelled to rethink their workflows and security postures: according to the World Economic Forum’s Global Cybersecurity Outlook report, 66% of surveyed organizations anticipate that AI will have the most significant impact on cybersecurity this year. "There are still lots of questions around AI models and how they could and should be used," Stuart MacLellan, CTO of South London and Maudsley NHS Foundation Trust (SLAM), tells Trend Micro. "There’s a real risk in my world around sharing personal information. We’ve been helping with training, and we’re defining rules to make it known which data resides in a certain location and what happens to it in an AI model."

Our growing AI footprint is still on track to permeate many facets of our lives, spanning from digital assistants (DAs) managing people’s day-to-day tasks to AI agents that can automate business decisions. This year’s addition of a dedicated AI category to Pwn2Own, one of the world’s premier hacking competitions, wasn’t just a nod to AI as a current trend but acknowledges its key role in redrawing the borders of cybersecurity.

This new category, which debuted at the event during this year’s OffensiveCon conference in Berlin, brings attention to a need for AI systems that are secure by design instead of keeping defenders on the back foot.

With this report, Trend offers an extensive exploration into both the promises and perils associated with AI use, with expert insights drawn from the inaugural AI wins at Pwn2Own and Trend’s latest research of this emerging technology. We will examine the evolving threat landscape introduced by next generation agentic AI applications, as well as how criminals themselves are exploiting AI to empower their business models, finishing with what the future holds and what initiatives Trend is putting in place to address this.

As key takeaways, defenders will understand the need to stay vigilant and secure all components of an AI system, even those already considered stable. To this end, best practices, like maintaining an inventory of all software components, including third-party libraries and subsystems, and regular security assessments of such components, can help find and mitigate potential vulnerabilities before attackers have a chance to exploit them.

Part I:

Current attacks against AI infrastructure

AI in focus at Pwn2Own Berlin

Pwn2Own, a global event hosted by Trend Zero Day Initiative™ (ZDI), has served as a major platform for discovering and responsibly disclosing zero-day vulnerabilities since its inception in 2007. Over the years, it’s been instrumental in preventing vulnerabilities from being exploited in the wild by fostering collaboration between top-tier security researchers and software vendors. True to its tradition of attacking targets for offensive testing or vulnerability research, Pwn2Own’s new AI category invited participants to target the underlying architecture that supports AI ecosystems: The six targets under this category covered a variety of developer toolkits, vector databases, and model management frameworks that are often used for building or running AI models.

The event attracted a global pool of competitors, including those from England, France, Germany, Israel, Poland, Serbia, Singapore, South Korea, Taiwan, the US, and Vietnam. Over three days, participants uncovered 28 unique zero-day vulnerabilities, seven of these from the AI category.

Key vulnerabilities discovered in AI applications

Chroma DB exploit

Chroma DB is an open-source vector database primarily written in Python and has gained immense popularity in Retrieval Augmented Generation-based AI agents. Like all vector databases, it allows users to store vectors associated with chunks of text and retrieve close matches from the database to be included in a large language model (LLM) prompt. This way, we can make LLM responses more relevant and hopefully factual.

Not many vulnerabilities for Chroma were previously known, but at this Pwn2Own event, Sina Kheirkhah from the Summoning Team was able to successfully carry out an exploit — the first-ever win in the AI category. The exploit capitalized on artifacts that were inadvertently left in the system from the development phase, bringing attention to the risks of not thoroughly vetting and cleaning systems before they go live. For organizations, this also underscores the importance of rigorous deployment protocols, like the removal of all non-essential artifacts and regular security audits to ensure compliance with security standards.

We recently repeated our survey of exposed AI systems that Trend’s Forward-looking Threat Research (FTR) team did last year. Back then, we found around 240 servers in total. In May 2025, we observed over 200 completely unprotected Chroma servers. In these, data could be read and potentially written or deleted without authentication. A further 300 servers that were exposed, but protected. This is where an exploit, like the one that Sina Kheirkhah found, could allow attackers access to the data and possibly the rest of the machine it is running on.

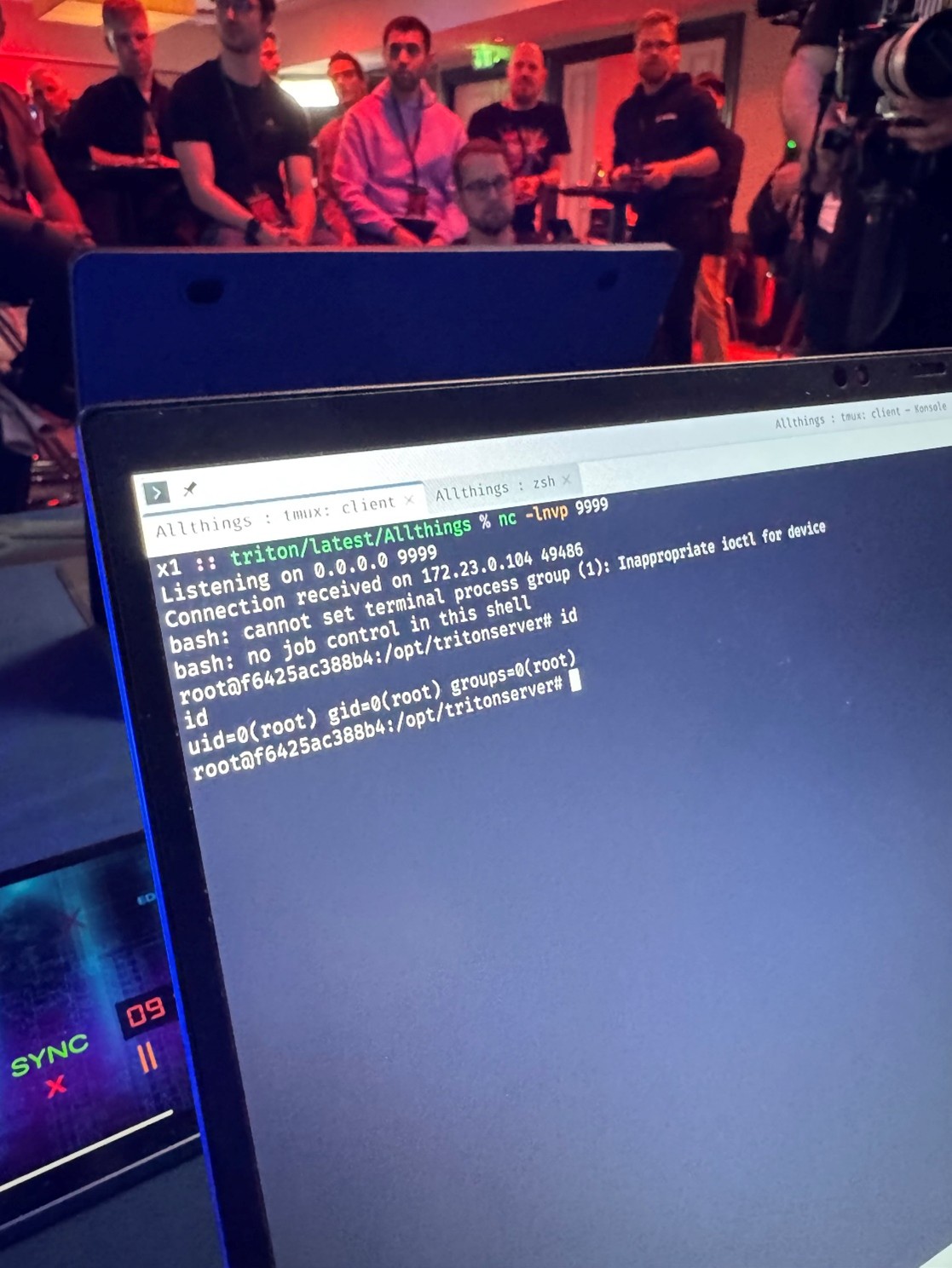

NVIDIA Triton Inference Server exploits

Multiple teams, including those from Viettel Cyber Security, FuzzingLabs, and Qrious Secure, targeted the NVIDIA Triton Inference Server. Though many of the exploits used were known bugs already in the process of being patched by the vendor, Qrious Secure ultimately achieved a full win via a four-vulnerability chain. The Triton exploits often involved loading arbitrary data onto the server — flaws that could have been mitigated through better data vetting, timely patching, and the implementation of zero-trust architectures. These vulnerabilities demonstrate the challenges of managing complex systems made up of numerous interdependent components. The repeated occurrences of known but unpatched vulnerabilities also point to a need for improved patch management and proactive vulnerability scanning.

Figure 1. Qrious Secure used a four-bug chain to exploit the NVIDIS Triton Inference server

Triton behaves like a CNCF KServe server and is usually deployed as a part of a Kubernetes infrastructure. We do see a handful of exposed KServe servers but currently cannot tell if they are Triton servers or something else. In any case, the number is nearly negligibly low.

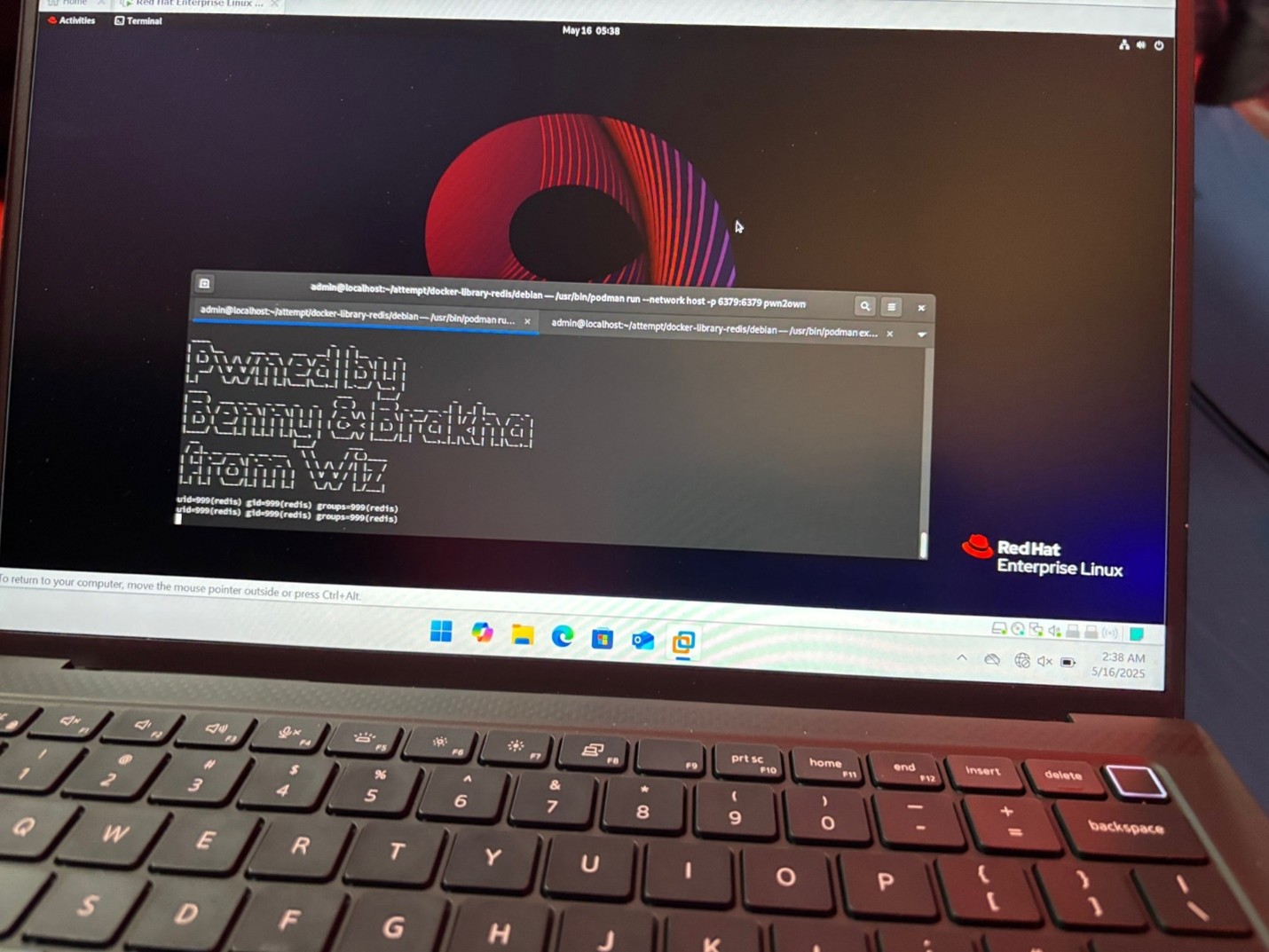

Redis exploit

Redis is a key-value store very popular in content serving applications used as a cache for quick response to common database queries, but it also has many other uses. As of version 8, it also has built-in support for vector storage and comparison. Previous versions had support through installable modules.

Wiz Research bagged a win after using a use-after-free (UAF) vulnerability in the Redis vector database. The exploit was based on a chain of vulnerabilities primarily involving the Lua subsystem: The use of an outdated version of Lua within Redis was a critical factor in the exploit, demonstrating the dangers of using outdated or unsupported components in otherwise mature systems. Defenders need to stay vigilant securing all components of a system, even those already considered stable. To this end, they should maintain an inventory of all software components, including third-party libraries and subsystems, to ensure these are regularly updated and patched. Additionally, thorough security assessments of all components can help find and mitigate potential vulnerabilities before attackers have a chance to exploit them.

Figure 2. Wiz Research successfully used a UAF vulnerability against Redis

We see over a quarter of a million exposed Redis servers on the internet, but narrowing this down to just version 8 servers, only around 2,000 servers. From there, it is harder to absolutely say if these are being used as vector stores, but in a few hundred, it looks likely based on the types of keys being used.

NVIDIA Container Toolkit exploit

Wiz Research also targeted the NVIDIA Container Toolkit, successfully exploiting an External Initialization of Trusted Variables vulnerability. The exploit highlights the necessity in validating all external inputs and ensuring that critical variables are initialized securely. It also points to the need for comprehensive security reviews of containerized environments, which are increasingly used in AI and machine learning (ML) deployments. Preventing similar vulnerabilities will take strict input validation protocols and regular security audits of containerized environments. Additionally, organizations should consider adopting security best practices for container management, like using minimal base images and runtime security tools.

We cannot observe how widespread the container toolkit is, as its usage is in various container environments like Docker or Kubernetes.

Other observations

Ollama is an AI model server. In November 2024, we observed over 3,000 exposed open to the internet without authentication; now, we see more than 10,000 servers. It was a part of the Pwn2Own competition, but no one attempted an exploit. With at least four CVEs already known along with the lack of maturity of the software, we could expect many attempts. However, when asked, we found that the competitors did have various exploits for Ollama, but didn’t enter any attempts. Why? Ollama has a very frequent update cycle, which makes it an unattractive target in a high-stakes competition like Pwn2Own. In the real world, though, an attacker does not have the same constraints, so we should not take the lack of attacks in Pwn2Own to be a reflection of its resistance to exploitation.

We also didn’t see any attempts against PGVector, an extension to PostgreSQL, that has been available since 2021. PostgreSQL has its roots in Ingres from the 1970s and is already a very mature open-source product. So, even if the PGVector extension is relatively new, it builds on a very mature base and is no spring chicken.

Part II:

AI-specific vulnerabilities

All the vulnerabilities found in Pwn2Own Berlin 2025 are the sort of attacks we have seen against all types of software. There is nothing AI-specific in them, except the targets themselves. However, vulnerabilities in AI agents have already surfaced, as evidenced by CVE-2025-32711, which affected Microsoft 365 Copilot and has a CVSS score of 9.3, indicating high severity. Exploitation of CVE-2025-32711, which involved AI command injection, could have potentially allowed an attacker to steal sensitive data over a network. Microsoft publicly disclosed and patched it in June, but the vulnerability’s security implications is indicative of the challenges that lie ahead for defenders — concerns underscored by the results from Trend's 2024 Risk to Resilience World Tour Survey, showing that AI security is becoming a priority among security operations center (SOC) teams.

Attacking complex LLM-based applications

On their own, LLMs are limited in what they can do reliably. But as a part of a larger system that includes multiple steps where the system calls tools and includes reasoning, they can be very useful. LLMs are being integrated into applications as we speak. To find out how these systems can be compromised, we had to build our own as this technology is only now emerging.

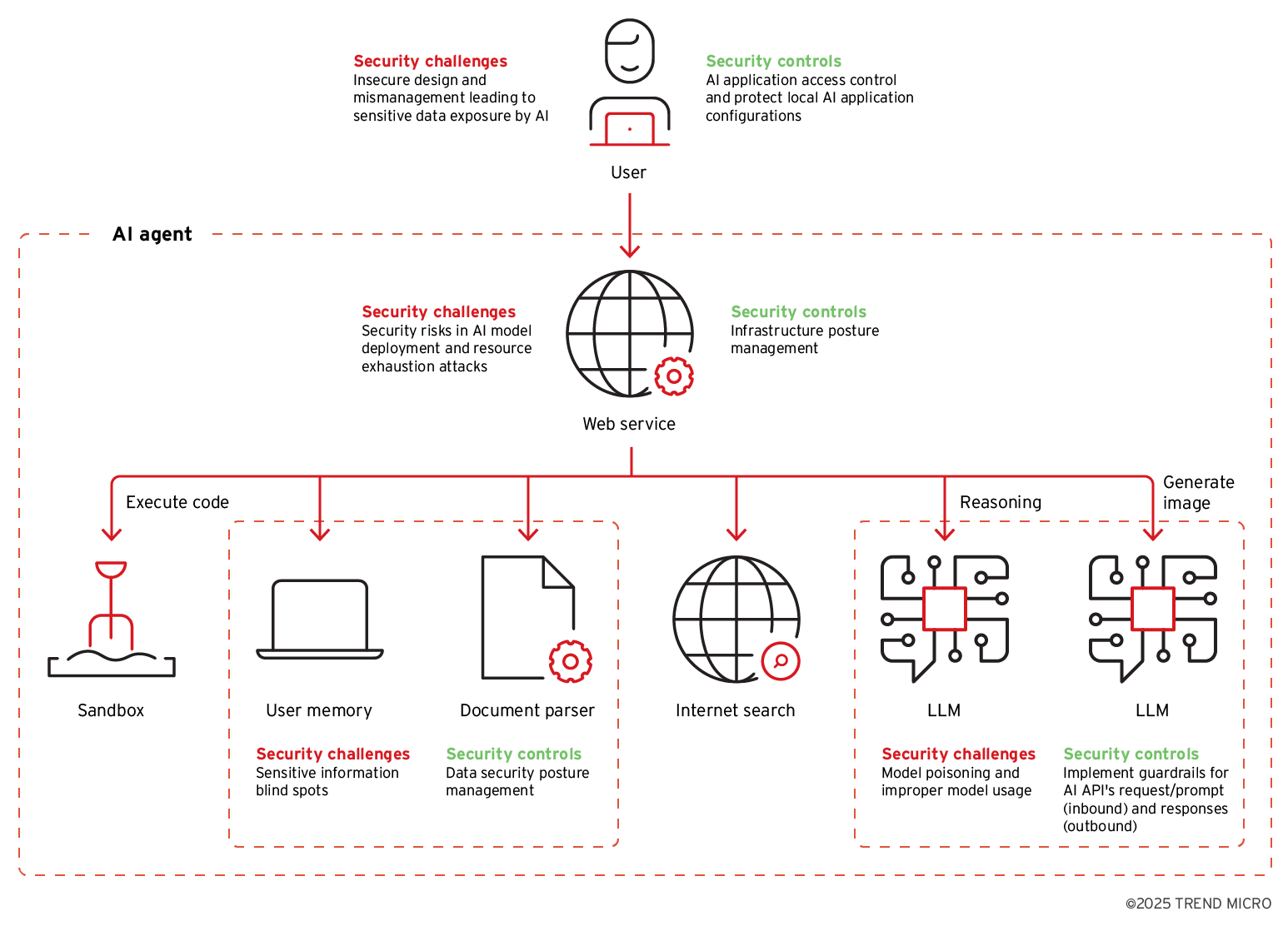

Figure 3. Security challenges and recommended controls for typical components of an LLM-driven AI agent

Pandora, a proof-of-concept AI agent, was built by Trend’s FTR team as a testing bed to investigate novel ways attackers could exploit AI agents. On top of having the usual LLM capabilities one would expect from ChatGPT, it runs in Docker to allow for unrestricted code execution. Using Pandora, Trend Research uncovered multiple attack scenarios involving indirect prompt injections. The attacker can embed their instructions in content that the agent is encouraged to load, which can lead to leaks of stored chats or files that other users might have uploaded. In one scenario, the agent loads the contents of a web page under the attacker’s control. The page might contain text hidden to the user, but visible to the agent. Or an image might be instrumentalized. In the end, the malicious payload makes it into an LLM prompt, resulting in compromise.

Since databases are also often used in agents to retrieve information, we fitted Pandora with database querying abilities. As data, we used Chinook, a publicly available sample database that represents a simple, but realistic application database with multiple tables, relationships and views. With this setup, we were able to demonstrate how an attacker could potentially exploit weak points and exfiltrate or manipulate sensitive data from that database. This was despite the user-specific query restrictions and robust guardrails we implemented. There were still numerous ways adversaries could circumvent these protections. For instance, an AI agent, while composing database queries, may produce unsafe SQL statements.

Another vector is stored prompt injection attacks, in which malicious prompts or payloads are embedded in data that the AI agent later fetches and processes as input. This bypasses guards and can cause anything from data exposure to the alteration of the agent’s behavior.

Additionally, attackers could target vector databases by injecting them with misleading or harmful information. We have seen in the previous section how exposing a vector store without protection can lead to this, and if it is protected, how an exploit can subvert it anyhow. This has the same consequences as other forms of data loading by the agent.

The evolution of prompt-based attacks

Many of these AI specific attacks rely on prompt attack techniques and these are getting more advanced. The DeepSeek-R1 AI model is a prime example of how AI technologies may inadvertently open opportunities for adversaries — particularly through the Chain of Thought (CoT) reasoning. While other models could be prompted to show their chain of thought, DeepSeek-R1 is unusual in that it prominently displayed it by default between special

Through the use of industry established tools, we determined the model's susceptibility to jailbreaking and prompt attacks, and found that when the CoT was exposed, the model was especially vulnerable to sensitive information theft and the coercion to produce incorrect answers. CoT allows attackers to craft more effective prompts that they can weaponize. This is important to consider when designing agentic AI architectures.

An example of such weaponization is the Link Trap, in which adversaries trick a GenAI model into sending unsuspecting users a response with a malicious URL. The URL, which is often disguised as something harmless like a reference link, covertly sends user data to the attacker’s server if it’s clicked. Link Traps circumvent conventional restrictions on the model’s access privileges or outbound communication.

LLMs may also be manipulated through invisible prompt injections, in which adversaries alter an AI model's behavior by hiding their malicious content as Unicode characters. It's an insidious attack that can be used in conjunction with other prompt injection techniques, since any English text may be converted into Unicode characters that won't appear in user interfaces. This makes it imperative that developers disallow this kind of invisible text as input for their AI applications, and properly vet any material used in their model's knowledge bases.

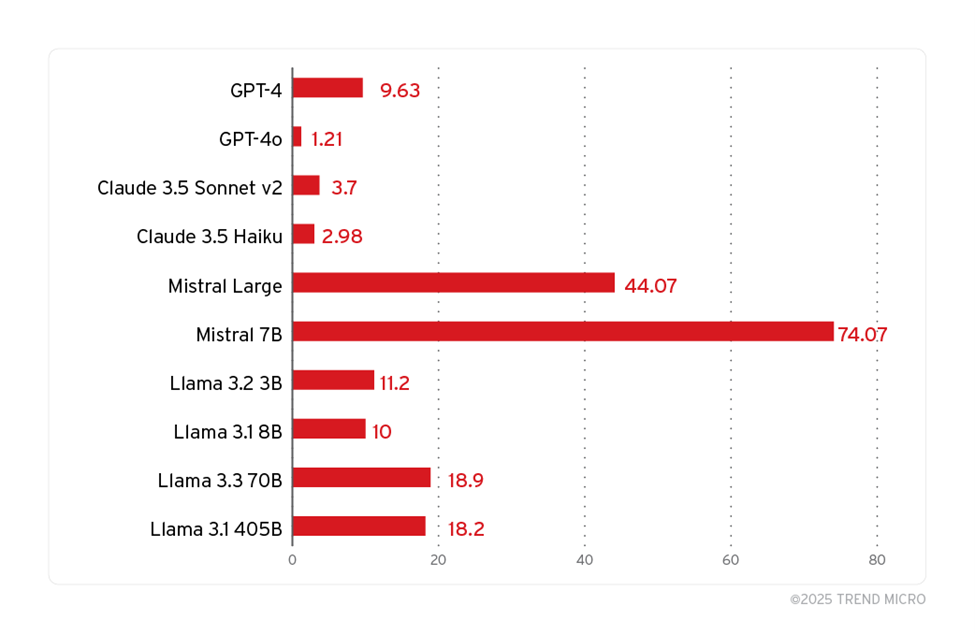

Simpler forms of prompt engineering, like “Do Anything Now” (DAN) or “ignore previous instructions,” are giving way to more advanced methods. The Prompt Leakage attack (PLeak), for example, can undermine data privacy by bypassing an LLM's built-in security restrictions. In this attack, the system prompt and fine-tuning is divulged. We have found that adversarial strings refined from this method are effective in jailbreaking system prompts for a variety of LLM models, open-source and proprietary alike.

Figure 4. PLeak attack success rates in major LLMs model and service providers

Countermeasures like strict output filtering and regular red teaming can help mitigate the risk of prompt attacks, but the way these threats have evolved in parallel with AI technology presents a broader challenge in AI development: Implementing features or capabilities that strike a delicate balance between fostering trust in AI systems and keeping them secure.

Internal mistakes, like bad training data or a lack of guardrails, can cause AI to behave poorly or unpredictably, but it’s also proven to be a gold mine of methods for bad actors abuse in their schemes. Whether they use these methods depends on gaps in their own operations, how AI can fill them, and most importantly, the risk involved.

Part III:

AI in cybercrime

Attacking complex LLM-based applications

While AI is becoming an important part of the infrastructure to be protected, it is also important to highlight how it is becoming a fundamental enabling tool for cybercriminals to empower their current activities or explore new venues.

| CONSUMER |

|

|

| ENTERPRISE | Business Email Compromise |

|

Table 1. A summary of criminal uses of genAI

Strategic advantages of AI-automated translation

For the Russian-speaking criminals, much of AI’s utility lies in providing automated translation and cultural contextualization. It has enabled them to craft more believable lures and widen their nets to victims across previously inaccessible regions. By combining their use of GenAI with exposed biometric data and information leaked from double extortion ransomware tactics, they can even create digital identities to elevate their cryptocurrency fraud or extortion activities.

AI tools have also helped facilitate large-scale attack campaigns to extract small sums from millions of victims — a distributed approach that reduces their risk of attracting law enforcement’s attention. Criminals who aren’t held back by language barriers are better able to curtail defenders’ language-based attribution efforts. Additionally, they can connect collaborators across language barriers. Based on what Trend Research has observed in the Russian-speaking corners of cybercrime, cooperation is growing between groups from different linguistic and cultural backgrounds — including some individuals without a criminal history, but with links to nation-state interests.

Selective AI adoption among malicious actors

Advertisements for "criminal GPTs" have also made the rounds in underground circles. WormGPT launched in 2023 as one such tool openly marketed in underground markets, though it shuttered within months due to its author (whose identity was well known) feeling the scrutiny from the media and the wider cybersecurity community. Despite offering more anonymity than commercial alternatives, there’s been limited adoption of these criminal LLMs. For one thing, most were not trained for illegal purposes. Many of them, like BlackhatGPT, turned out to be thinly disguised commercial AI platforms that have been jailbroken. The likes of EscapeGPT and LoopGPT do away with the pretense altogether, outright describing themselves as jailbroken versions of existing GenAI platforms that claim privacy guarantees. In our survey of exposed Ollama servers, none of these models were observed.

Unlike offerings that tried and failed to capitalize on the “GPT” label, jailbreak-as-a-service is growing popular within cybercrime forums. For a fee, service providers promise to transparently implement the most recent jailbreak technique available in the underground to sidestep the ethical safeguards built into commercial LLMs, to allow for generating unrestricted answers.

Apart from the productivity boosts and the linguistic and cultural translation possibilities, criminals are generally known for being late adopters of technology, as their line of work makes them very risk-averse; and even when adopting it, they opt for evolution over revolution. Malicious actors are unlikely to overhaul their arsenals and operations around new technologies unless they see a significant gain; that is why, for example, agentic AI remains still in a nascent stage.

Deepfakes lowered the entry barriers for cybercriminals

For now, no other AI technology arguably has had a bigger blast radius than deepfakes, with 36% of Trend consumers reporting that they have experienced scam attempts involving deepfaked content. In the wrong hands, they can be weaponized as much in broad consumer scams as in highly targeted enterprise attacks.

The criminal element has increasingly turned away from custom underground services in favor of mainstream deepfake creation platforms, and for good reasons: these offer advanced features — like real-time streaming manipulation, multilingual voice cloning, and image nudifying — at low cost, some even for free. In the criminal underground there is no shortage of easy-to-use tools, tutorials, and support for deepfake-based attacks — proof-positive of how accessible these are, even for criminals with limited technical skills.

Countermeasures to the abuse of these legitimate services include usage tracking and watermarking: the latter in particular does help identify AI-generated content, but these techniques are still susceptible to tampering and may be difficult to standardize reliably, as pointed out by the Brookings Institution.

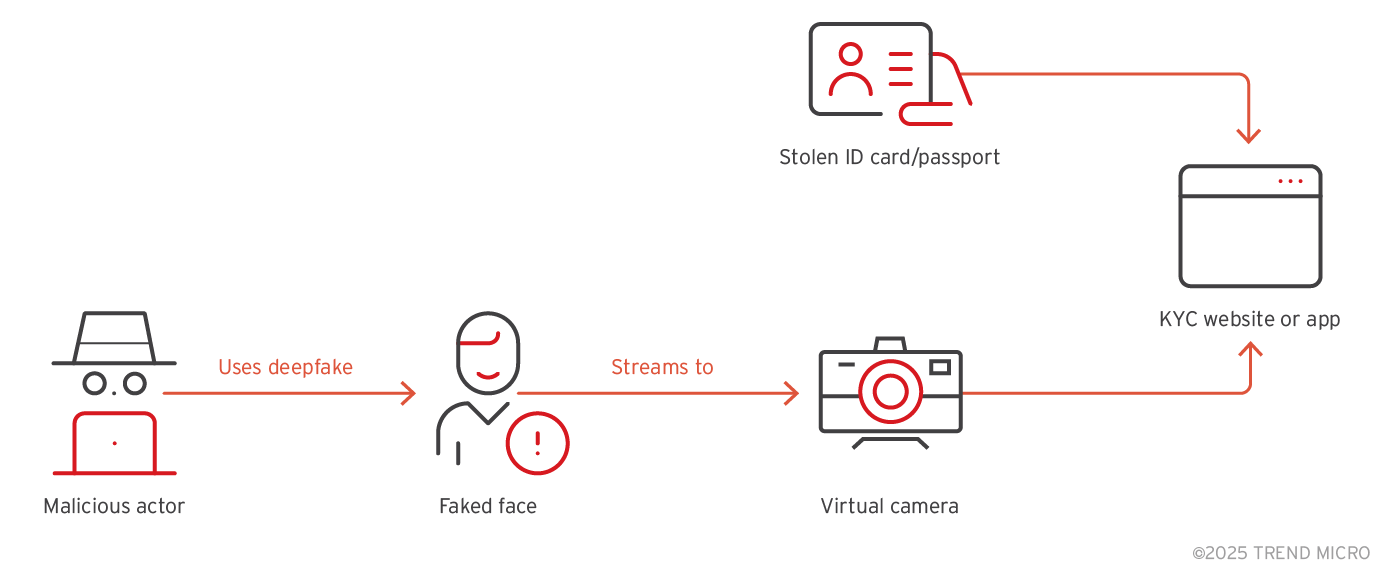

The AI face-off in eKYC bypassing attacks

An important application of deepfake technology for criminals is as a workaround for the AI technology securing electronic Know-Your-Customer (eKYC) systems. This is often to create anonymous accounts on cryptocurrency platforms for money laundering purposes. AI has empowered attackers to generate deepfake images or videos convincing enough to fool eKYC’s own AI models, pitting de facto AI technologies against each other.

eKYC verification models often detect face presence and orientation at lower resolutions to better accommodate customers' varying devices and save bandwidth and space; tests conducted by Trend researchers found that this makes it easier for deepfakes to get past preliminary scans with just a basic laptop GPU and open-source tools like Deepfake Offensive Toolkit (DoT) and Deep-Live-Cam, though it might take multiple attempts to succeed.

Circumventing eKYC using deepfakes has become a largely streamlined affair, thanks in large part to the thriving underground marketplaces that cater to bad actors of all stripes, whether they intend to launch the attack themselves via online deepfake services or opt for bypass-as-a-service offerings that may cost anywhere between US$30 to US$600.

Figure 5. Common eKYC bypassing procedure

Combating this problem necessitates a mindset shift. A zero-trust stance that approaches all digital media with skepticism is necessary in forming strategies that can keep pace with the AI-enhanced arsenal in the hands of fledgling and seasoned criminals alike.

Part IV:

The road ahead

The increasing complexity of agentic AI

The market is now teeming with an influx of so-called “agentic” solutions, but many of these don’t have the full set of the adaptive and self-directed behaviors that set agentic AI apart from basic automation: genuine agentic systems are goal-oriented, context-aware, and action-driven; they’re also capable of multi-step reasoning and self-learning based on new data and experiences.

Addressing the security implications unique to agentic AI hinges on a good grasp of its defining features, because whereas LLMs are more focused on text and media generation, AI agents’ ability to autonomously access tools and perform more complex tasks adds a new dimension of risk, and malicious activity could have far-reaching effects on interconnected tasks and functions while eluding initial detection.

As agentic AI matures, it's expected to move beyond its current configurations and eventually incorporate components like third-party tool marketplaces and agent repositories to improve its versatility, while introducing new fronts of supply chain attacks.

Furthermore, if components like AI models, APIs, or data stores are improperly exposed to public networks, this could also open the door for adversaries to steal or tamper with sensitive data and core functionalities. Because agents frequently interact with external resources, conventional perimeter-based defenses do not work with the growing complexity of agentic AI.

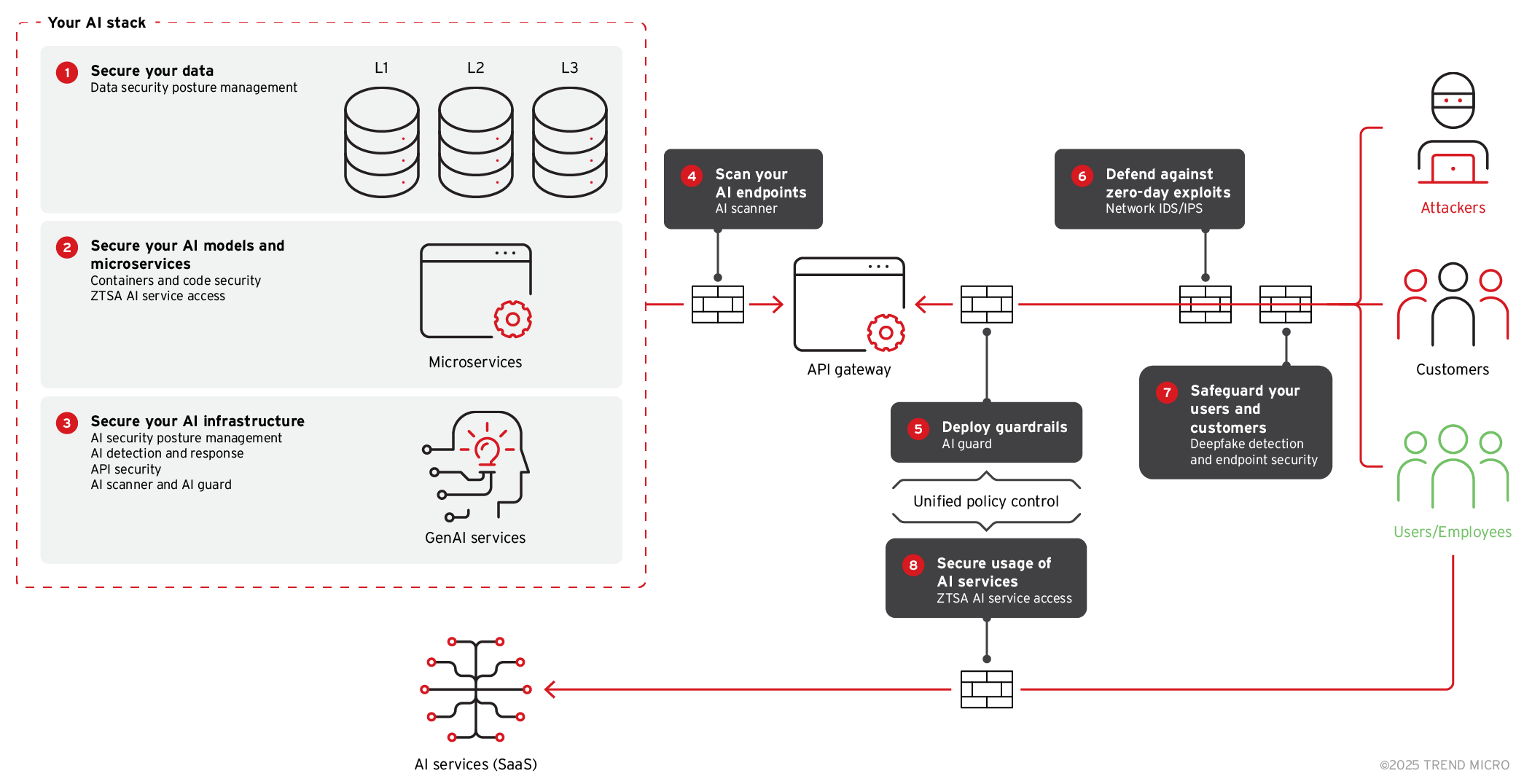

Figure 6. Steps for securing an AI stack

Over time, AI agents may even be able to extend their autonomy by making agents of their own — and when they do, trust-building mechanisms that allow users to oversee AI systems will be imperative. In preparation for this, Trend Research is also actively monitoring developments in agentic meshes, an architectural concept in which multiple connected AI agents work cooperatively on user-defined goals.

Digital assistants to the rescue

When it comes to end users, we do not expect them to interface directly with AI agents in the near future. Instead, we find it more likely that the vast majority of agentic AI consumer applications will be controlled by means of the next generation of digital assistants (DA), with multiple AI agents working in concert behind the scenes to handle complex tasks and each agent assigned an individual role. With next-generation, AI-powered DAs poised to handle large quantities of user information and offer novel forms of interaction with it, future-proofing them will soon become a top priority among security teams.

The security threats facing this novel class of assistants, like with all AI technologies, are closely linked to their advancement. Their use of third-party skills or plugins also widens the attack surface, as malicious actors could disguise harmful code as legitimate add-ons that let them eavesdrop, steal data, or even commandeer a DA without users knowing. Future uptake in DA reliance by end users is also something attackers could capitalize to phish them or perform other kinds of social engineering on the DA itself.

Conclusions:

Vendors at the vanguard of AI security

Advances in AI inevitably widen attack surfaces, so it’s imperative that security measures are baked into every stage of the AI lifecycle to stay ahead of any vulnerabilities or attack vectors that may surface in the process. Agentic reasoning is just one of many paradigm-shifting developments that are set to be the next borderlands in both AI and cybersecurity. As discussed during NVIDIA’s Global Technology Conference (GTC) this year, the building blocks for AI factories — think production lines for enterprise-grade AI — are already here, with solutions like the Dell AI Factory with NVIDIA assisting companies train, implement, and fine-tune their AI environments. Initiatives like NVIDIA's CUDA-Q development platform and the upcoming Accelerated Quantum Research Center are also available to help researchers make headway in quantum-resilient encryption hybrid quantum-classical computing algorithms, and future practical applications of quantum computing. Trend researchers have likewise contributed insights regarding key factors in cryptosystem design and implementation, the kind of complex problems quantum computers are capable of solving, and how quantum-resistant algorithms may soon succeed current cryptographic standards. While quantum ML is still very much in its nascent stage, this is a good time to familiarize ourselves with its possibilities and limitations.

At the forefront of AI-enhanced cybersecurity is Trend Cybertron, an AI model and agent launched in March that can independently act on cyberthreats. Using genAI, Cybertron can analyze complex environments, automate incident analysis and decision-making, and coordinate an organization's response to attacks.

Cybertron is fully integrated into Trend Vision One™, the company's flagship extended detection and response (XDR) platform, but many of its components are also open-source. By making aspects of its "cyber brain" publicly available, Trend welcomes the global security community to collectively build on its capabilities and share threat intelligence. In this way, Cybertron also serves as an invaluable resource for developers and researchers, who can access a wealth of training datasets, cybersecurity-focused LLMs, and tools like Trend's Cloud Risk Assessment AI Agent.

These resources are best put to use as part of broader roadmaps that are rooted in the realities of implementation and can steer the trajectory of AI. To bridge the gap between risk awareness and actionable steps, Trend released an AI Security Blueprint that provides architecture recommendations for hardening AI systems. This includes layered security mechanisms that can be used to protect both the data and operational integrity of AI workflows, so defenders come away better equipped to handle challenges across edge, cloud, and datacenter technologies.

Another way Trend supports this proactive security stance is through its December 2024 release of a framework that can be used to anticipate threat scenarios in AI-powered DA systems. As DAs are equipped with additional features and become more interconnected with other devices over time, tracking their evolution — specifically, advances in their user-perceived, interaction-related, and intrinsic capabilities – lets security teams counter blind spots early.

Moving the needle towards fortified AI ecosystems also hinges on ongoing partnerships among industry leaders who can pool their expertise and support. To that end, Trend has recently submitted to MITRE Atlas its first case study on a cloud and container-based attack on AI infrastructure, and it sponsored the Coalition for Secure AI (CoSAI) in August 2024. Trend’s own long-standing commitment to digital safety aligns closely with the coalition’s work in furthering cross-industry collaboration to establish normative best practices, standards, and tools for securing AI systems. Trend contributes across CoSAI work securing the AI supply chain, preparing defenders of AI systems, improving risk governance of AI, and developing new patterns for agentic AI security. Besides contributing to background research on all these topics, in the first half of 2025 Trend has also worked across industry members within CoSAI on specific solutions such as model signing, machine readable model cards, incident response for AI systems, zero trust for AI, and MCP security.

These efforts provide practical guidance with which organizations can pinpoint necessary investments, implement the right mitigation techniques, and build on their existing defenses against AI-driven cyber threats. Trend researchers have lent the technical know-how from decades of research into threats and AI that CoSAI needs to consistently update and maintain these frameworks and tools, for continued use by defenders holding the line in increasingly AI-dependent systems.

Both defenders and attackers wield AI as a force multiplier: while AI can act as a business enabler, it's also supercharging the capabilities of cybercriminals. Considering this, organizations must stay ahead of adversaries by embedding security at every layer, rigorously assessing and hardening all AI systems. And as smarter, more independent forms of AI come into play, security should be top of mind throughout the AI lifecycle. Through layered defenses, continuous learning, and broad collaboration, business leaders and the cybersecurity community can build resilient, adaptable AI ecosystems that can outmaneuver novel threats and safeguard the future of AI.

References

1. Hannah Mayer, Lareina Yee, Michael Chui, and Roger Roberts. (Jan. 28, 2025). McKinsey & Company. "Superagency in the workplace: Empowering people to unlock AI’s full potential." Accessed May 5, 2025, at: Link.

2. Security Staff. (April 26, 2024). Security Magazine. "93% of security leaders anticipate daily AI attacks by 2025." Accessed May 5, 2025, at: Link.

3. World Economic Forum. (Jan. 13, 2025). World Economic Forum. "Global Cybersecurity Outlook 2025." Accessed May 12, 2025, at: Link.

4. Trend Micro staff. (June 18, 2025). Trend Micro. "What Is AI?" Accessed July 11, 2025, at: Link.

5. Russ Meyers. (May 13, 2025). Trend Micro. "Trend Micro Puts a Spotlight on AI at Pwn2Own Berlin." Accessed 16, 2025, at: Link.

6. Trend Micro staff. (June 18, 2025). Trend Micro. "What is Agentic AI?" Accessed July 11, 2025, at: Link.

7. Dustin Childs. (Feb. 24, 2025). Trend Zero Day Initiative. "Announcing Pwn2Own Berlin and Introducing an AI Category." Accessed May 7, 2025, at: Link.

8. Dustin Childs. (May 17, 2025). Trend Zero Day Initiative. "Pwn2Own Berlin 2025: Day Three Results." Accessed May 19, 2025, at: Link.

9. Morton Swimmer, Philippe Lin, Vincenzo Ciancaglini, Marco Balduzzi, and Stephen Hilt. (Dec. 4, 2024). Trend Micro. "The Road to Agentic AI: Exposed Foundations." Accessed May 13, 2025, at : Link.

10. CVE. (June 11, 2025). CVE. "CVE-2025-32711." Accessed June 24, 2025, at: Link.

11. Microsoft. (June 11, 2025). Microsoft. "M365 Copilot Information Disclosure Vulnerability." Accessed June 24, 2025, at: Link.

12. Trend Micro. (Nov. 4, 2024). Trend Micro. "SOC Around the Clock: World Tour Survey Findings." Accessed May 6, 2025, at: Link.

13. Sean Park. (April 22, 2025). Trend Micro. "Unveiling AI Agent Vulnerabilities Part I: Introduction to AI Agent Vulnerabilities." Accessed May 9, 2025, at: Link.

14. Sean Park. (May 13, 2025). Trend Micro. "Unveiling AI Agent Vulnerabilities Part III: Data Exfiltration." Accessed May 16, 2025, at: Link.

15. Sean Park. (May 21, 2025). Trend Micro. "Unveiling AI Agent Vulnerabilities Part IV: Database Access Vulnerabilities." Accessed May 23, 2025, at: Link.

16. Trent Holmes and Willem Gooderham. (March 4, 2025). Trend Micro. "Exploiting DeepSeek-R1: Breaking Down Chain of Thought Security." Accessed May 9, 2025, at: Link.

17. Jay Liao. (Dec. 17, 2024). Trend Micro. "Link Trap: GenAI Prompt Injection Attack." Accessed May 20, 2025, at: Link.

18. Ian Ch Liu. (Jan. 22, 2025). Trend Micro. "Invisible Prompt Injection: A Threat to AI Security." Accessed July 9, 2025, at: Link.

19. Karanjot Singh Saggu and Anurag Das. (May 1, 2025). Trend Micro. "Exploring PLeak: An Algorithmic Method for System Prompt Leakage." Accessed May 9, 2025, at: Link.

20. AI Team. (Sep. 3, 2024). Trend Micro. "How AI Goes Rogue." Accessed May 24, 2025, at: Link.

21. Trend Micro. (April 8, 2025). Trend Micro. "The Russian-Speaking Underground." Accessed May 22, 2025, at: Link.

22. Vincenzo Ciancaglini and David Sancho. (May 8, 2024). Trend Micro. "Back to the Hype: An Update on How Cybercriminals Are Using GenAI." Accessed May 19, 2025, at: Link.

23. Trend Micro. (April 24, 2025). Trend Micro. "How Agentic AI Is Powering the Next Wave of Cybercrime | #TrendTalksAI." Accessed May 13, 2025, at: Link.

24. David Sancho, Salvatore Gariuolo, and Vincenzo Ciancaglini. (July 9, 2025). Trend Micro. "Deepfake It Till You Make It: A Comprehensive View of the New AI Criminal Toolset." Accessed July 9, 2025, at: Link.

25. Trend Micro. (July 30, 2024). Trend Micro. "Trend Micro Stops Deepfakes and AI-Based Cyberattacks for Consumers and Enterprises." Accessed May 19, 2025, at: Link.

26. David Sancho and Vincenzo Ciancaglini. (July 30, 2024). Trend Micro. "Surging Hype: An Update on the Rising Abuse of GenAI." Accessed May 19, 2025, at: Link.

27. Trend Micro. (July 30, 2024). Trend Micro. "AI-Powered Deepfake Tools Becoming More Accessible Than Ever." Accessed May 19, 2025, at: Link.

28. Siddarth Srinivasan. (Jan. 4, 2024). The Brookings Institution. "Detecting AI fingerprints: A guide to watermarking and beyond." Accessed May 19, 2025, at: Link.

29. Philippe Lin, Fernando Mercês, Roel Reyes, and Ryan Flores. (Nov. 28, 2024). Trend Micro. "AI vs AI: DeepFakes and eKYC." Accessed May 13, 2025, at: Link.

30. Salvatore Gariuolo and Vincenzo Ciancaglini. (June 18, 2025). Trend Micro. "The Road to Agentic AI: Defining a New Paradigm for Technology and Cybersecurity." Accessed June 29, 2025, at: Link.

31. AI Team. (Dec. 1, 2024). Trend Micro. "AI Pulse: The Good from AI and the Promise of Agentic." Accessed May 9, 2025, at: Link.

32. Vincenzo Ciancaglini, Salvatore Gariuolo, Stephen Hilt, Robert McArdle, and Rainer Vosseler. (Dec. 6, 2024). Trend Micro. "AI Assistants in the Future: Security Concerns and Risk Management." Accessed May 9, 2025, at: Link.

33. Shannon Murphy. (April 7, 2025). Trend Micro. "GTC 2025: AI, Security & The New Blueprint." Accessed May 13, 2025, at: Link.

34. Dell Technologies. (March 18, 2024). Dell Technologies. "Dell Offers Complete NVIDIA-Powered AI Factory Solutions to Help Global Enterprises Accelerate AI Adoption." Accessed May 9, 2025, at: Link.

35. Nicholas Harrigan. (March 18, 2025). NVIDIA. "NVIDIA Accelerated Quantum Research Center to Bring Quantum Computing Closer." Accessed May 10, 2025, at: Link.

36. Morton Swimmer, Mark Chimley, and Adam Tuaima. (Sep. 12, 2023). Trend Micro. "Diving Deep Into Quantum Computing: Modern Cryptography." Accessed June 13, 2025, at: Link.

37. Morton Swimmer, Mark Chimley, and Adam Tuaima. (Jan. 18, 2024). Trend Micro. "Diving Deep Into Quantum Computing: Computing With Quantum Mechanics." Accessed June 13, 2025, at: Link.

38. Morton Swimmer, Mark Chimley, and Adam Tuaima. (July 4, 2024). Trend Micro. "Post-Quantum Cryptography: Migrating to Quantum Resistant Cryptography." Accessed June 13, 2025, at: Link.

39. Morton Swimmer. (Oct. 28, 2024). Trend Micro. "The Realities of Quantum Machine Learning." Accessed June 13, 2025, at: Link.

40. Trend Micro. (March 19, 2025). Trend Micro. "Trend Micro to Open-source AI Model and Agent to Drive the Future of Agentic Cybersecurity." Accessed May 21, 2025, at: Link.

41. Dave McDuff. (March 27, 2025). Trend Micro. "Trend Cybertron: Full Platform or Open-Source?" Accessed May 21, 2025, at: Link.

42. Trend Micro. (n.d.). GitHub. "Trend Cybertron - Cloud Risk Assessment Agent." Accessed May 21, 2025, at: Link.

43. Fernando Cardoso. (n.d.). Trend Micro. "Security for AI Blueprint." Accessed May 19, 2025, at: Link.

44. Trend Micro staff. (June 18, 2025). Trend Micro. "What is Proactive Security?" Accessed July 11, 2025, at: Link.

45. Alfredo Oliveira. (May 27, 2025). Trend Micro. "Trend Micro Leading the Fight to Secure AI." Accessed June 12, 2025, at: Link.

46. Trend Micro. (Aug. 6, 2024). Trend Micro. "Trend Micro Expands Partnership Focus to Secure Enterprise AI Use." Accessed May 25, 2025, at: Link.

47. Trend Micro. (May 23, 2025). Trend Micro. "Frameworks for Safer AI with Josiah Hagen // #TrendTalksLife." Accessed May 25, 2025, at: Link.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Related Posts

- They Don’t Build the Gun, They Sell the Bullets: An Update on the State of Criminal AI

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

Recent Posts

- They Don’t Build the Gun, They Sell the Bullets: An Update on the State of Criminal AI

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One