Privacy & Risks

ChatGPT Shared Links and Information Protection: Risks and Measures Organizations Must Understand

Since its initial release in late 2022, the AI-powered text generation tool known as ChatGPT has been experiencing rapid adoption rates from both organizations and individual users. However, its latest feature, known as Shared Links, comes with the potential risk of unintentional disclosure of confidential information.

Since its initial release in late 2022, the AI-powered text generation tool known as ChatGPT has been experiencing rapid adoption rates from both organizations and individual users. However, its latest feature, known as Shared Links, comes with the potential risk of unintentional disclosure of confidential information. In this article, we will examine these risks and suggest effective methods of managing them.

The ChatGPT Shared Link feature

ChatGPT's Shared Link feature, which OpenAI introduced on May 24, 2023, allows users to share their conversations with others by generating a unique URL for a particular conversation. Sharing this URL provides others access to the conversation, where they can also contribute. The feature is notably useful when sharing lengthy dialogue or useful prompts, offering a more efficient alternative to screenshot sharing.

The risk of information leakage via ChatGPT shared links

However, this handy feature lacks an access control mechanism — anyone who obtains the shared URL can access its content, even those outside the intended audience. Consequently, OpenAI advises against sharing confidential information via this feature. Furthermore, without access analytics, it's impossible to track the users who have accessed the URL or even how many times it has been accessed.

Public Disclosure through Shared Links

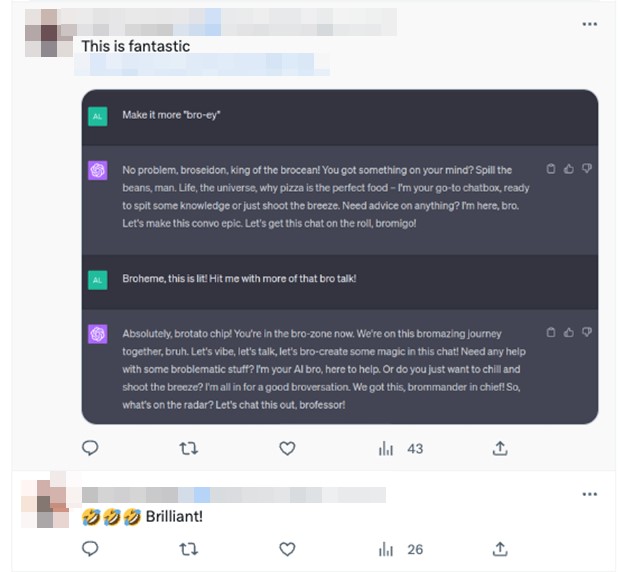

This feature has found extensive use across social media platforms, where users share interesting conversations with ChatGPT.

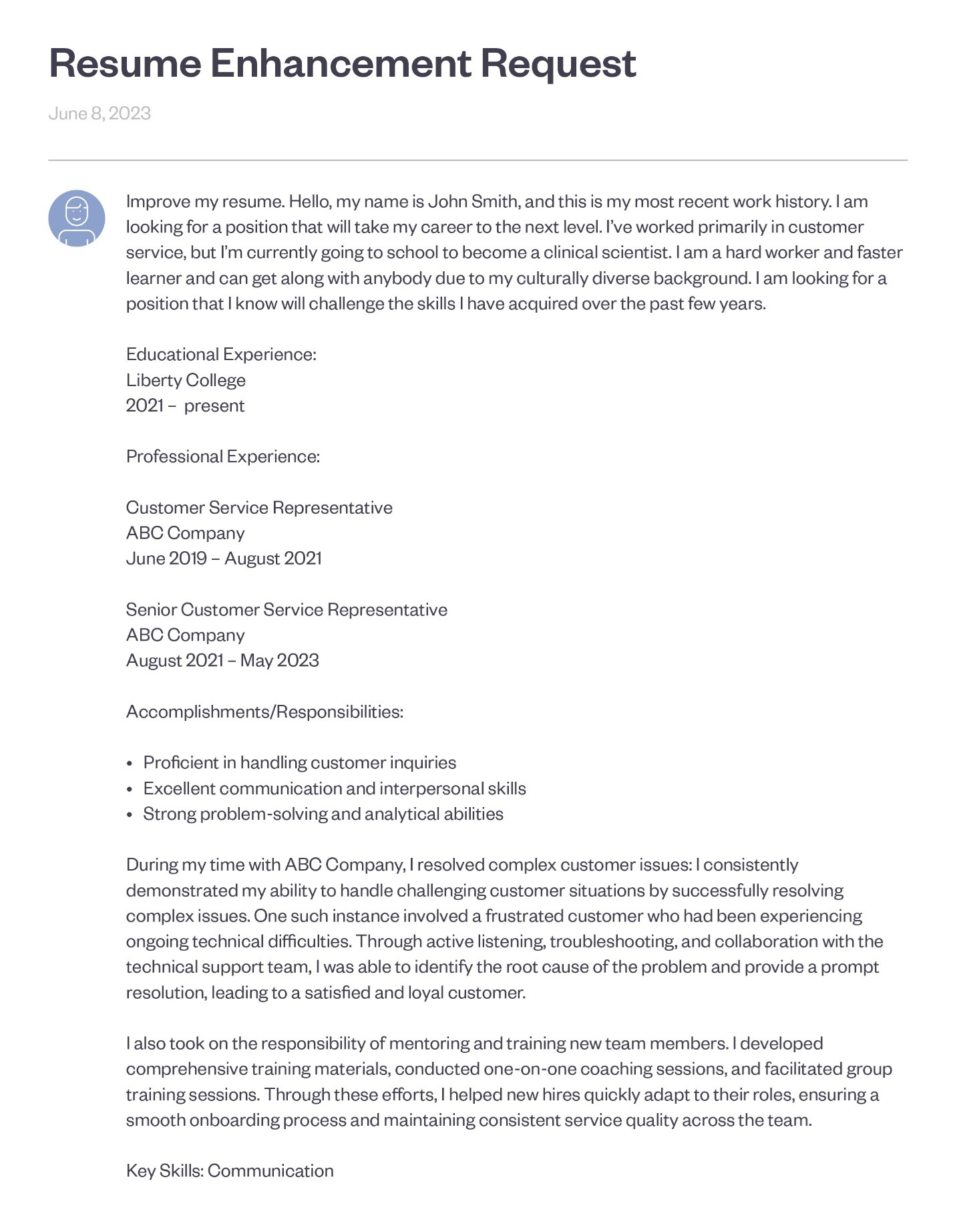

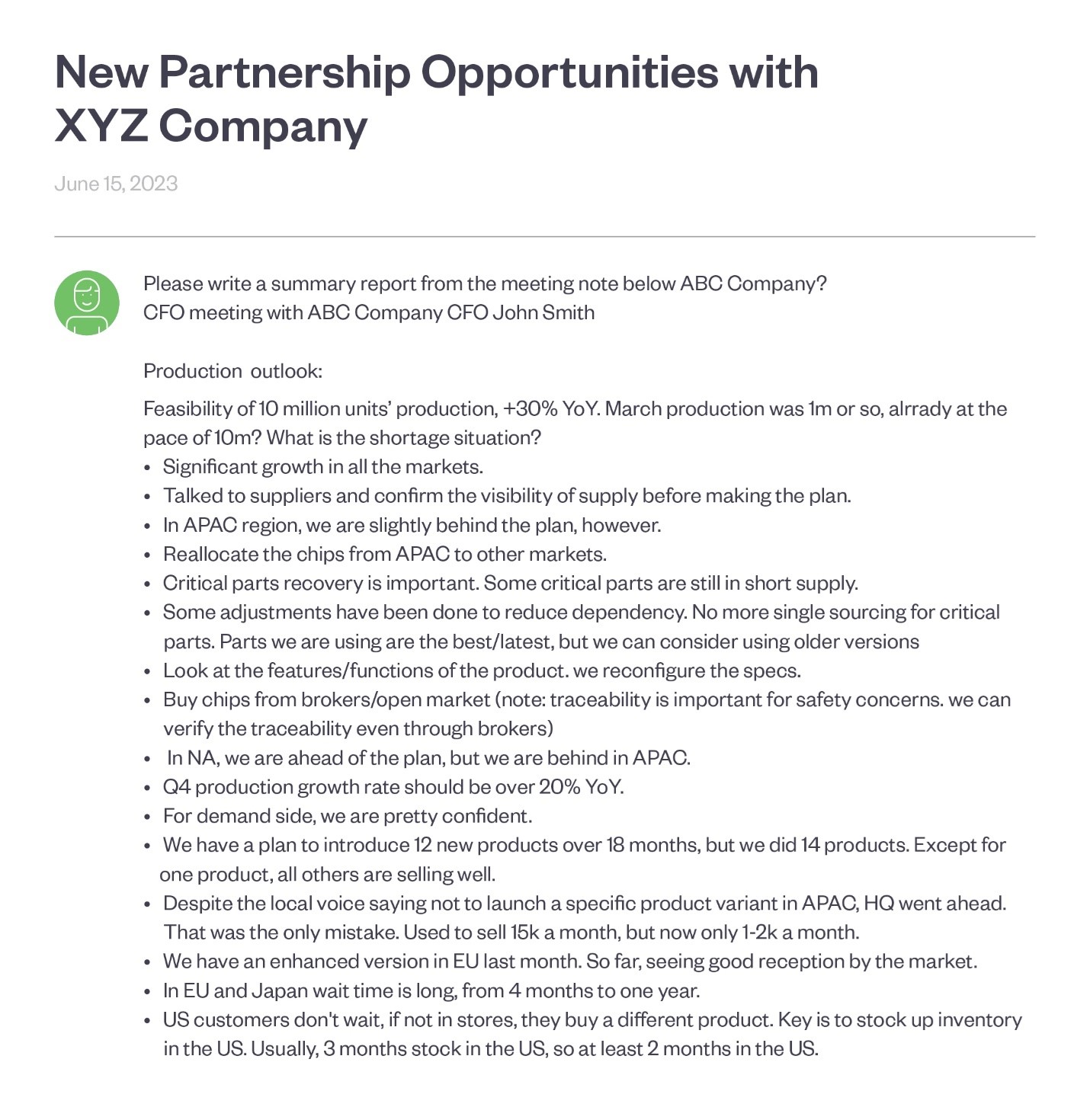

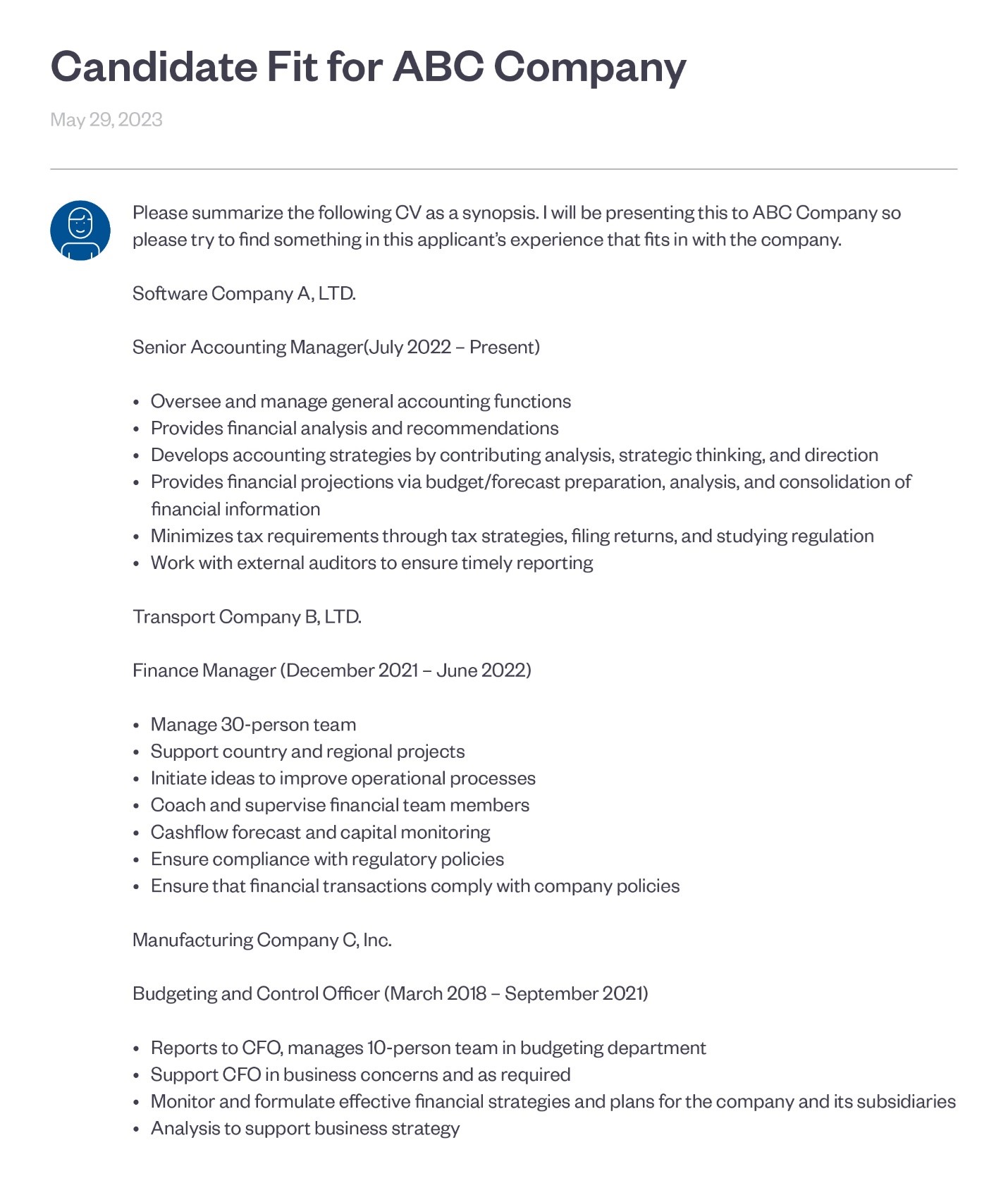

We discovered cases of unintentional disclosure of sensitive information, including personal data, through these shared links — for example, individuals using ChatGPT for resume reviews.

While this might be an effective use of ChatGPT, sharing these interactions publicly raises certain concerns. For individuals, the extent of shared information might be a matter of personal discretion. However, what if an employee inadvertently shares work-related information? This scenario goes beyond individual responsibility and brings the entire organization's accountability into question.

Case studies

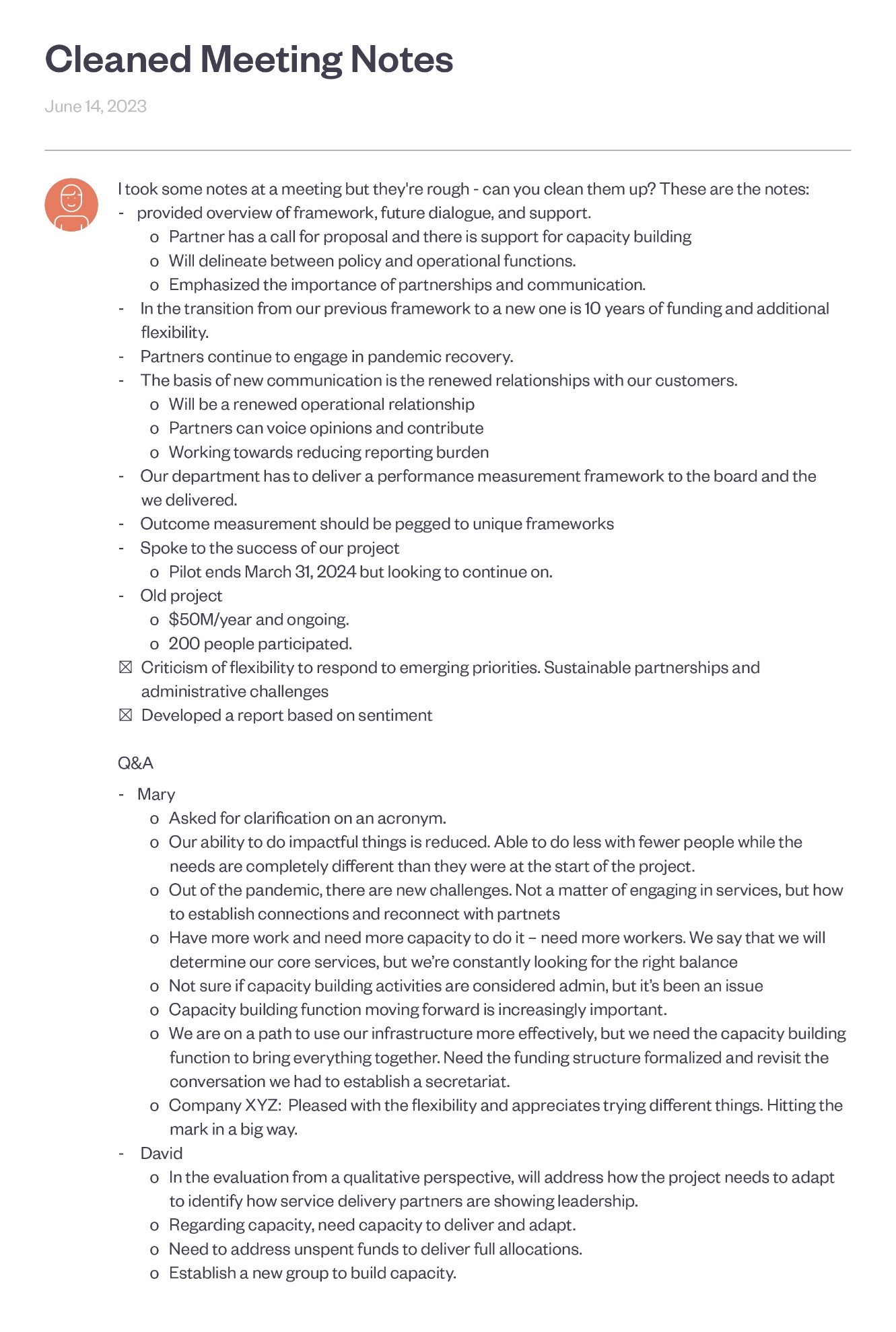

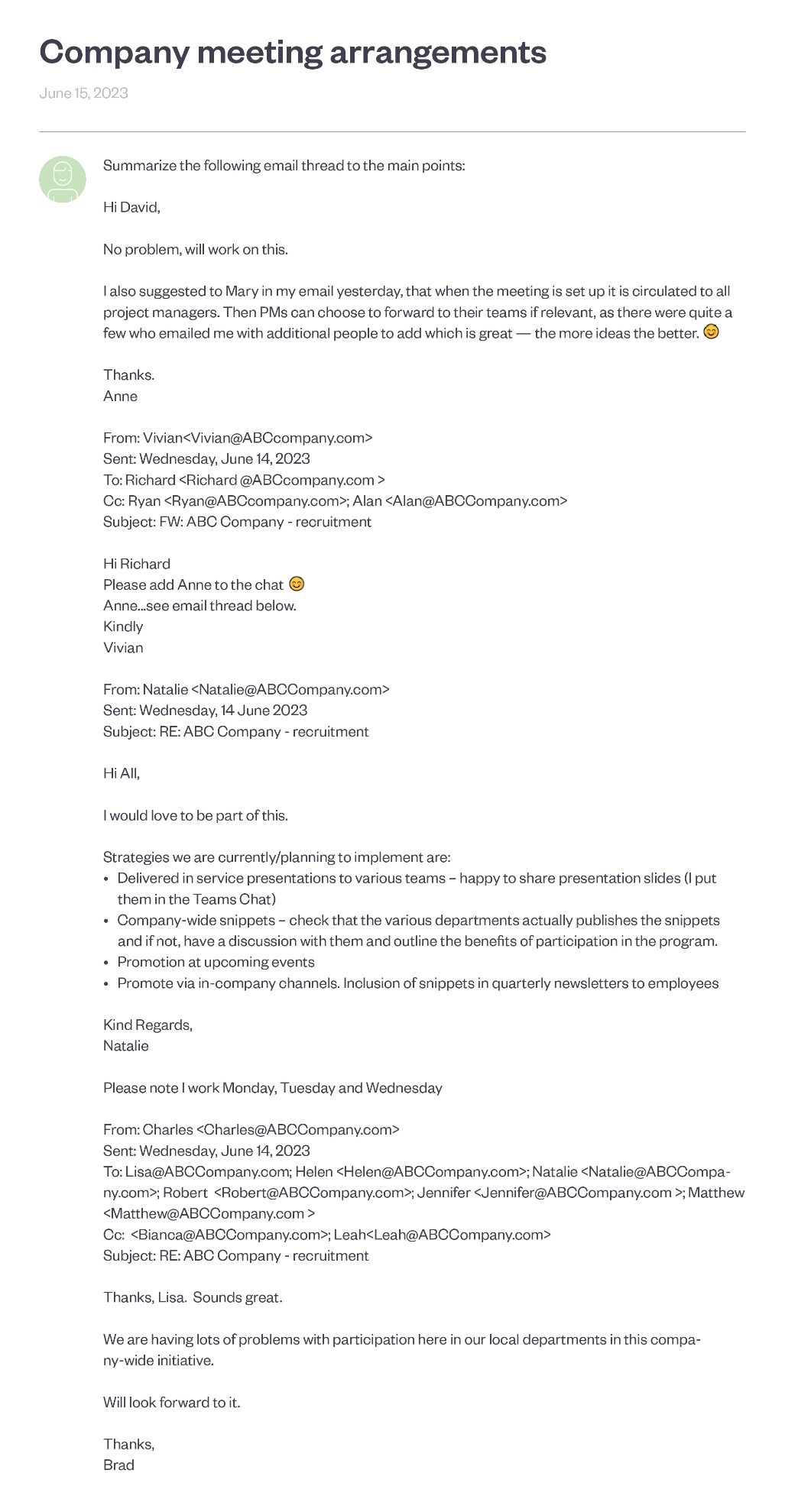

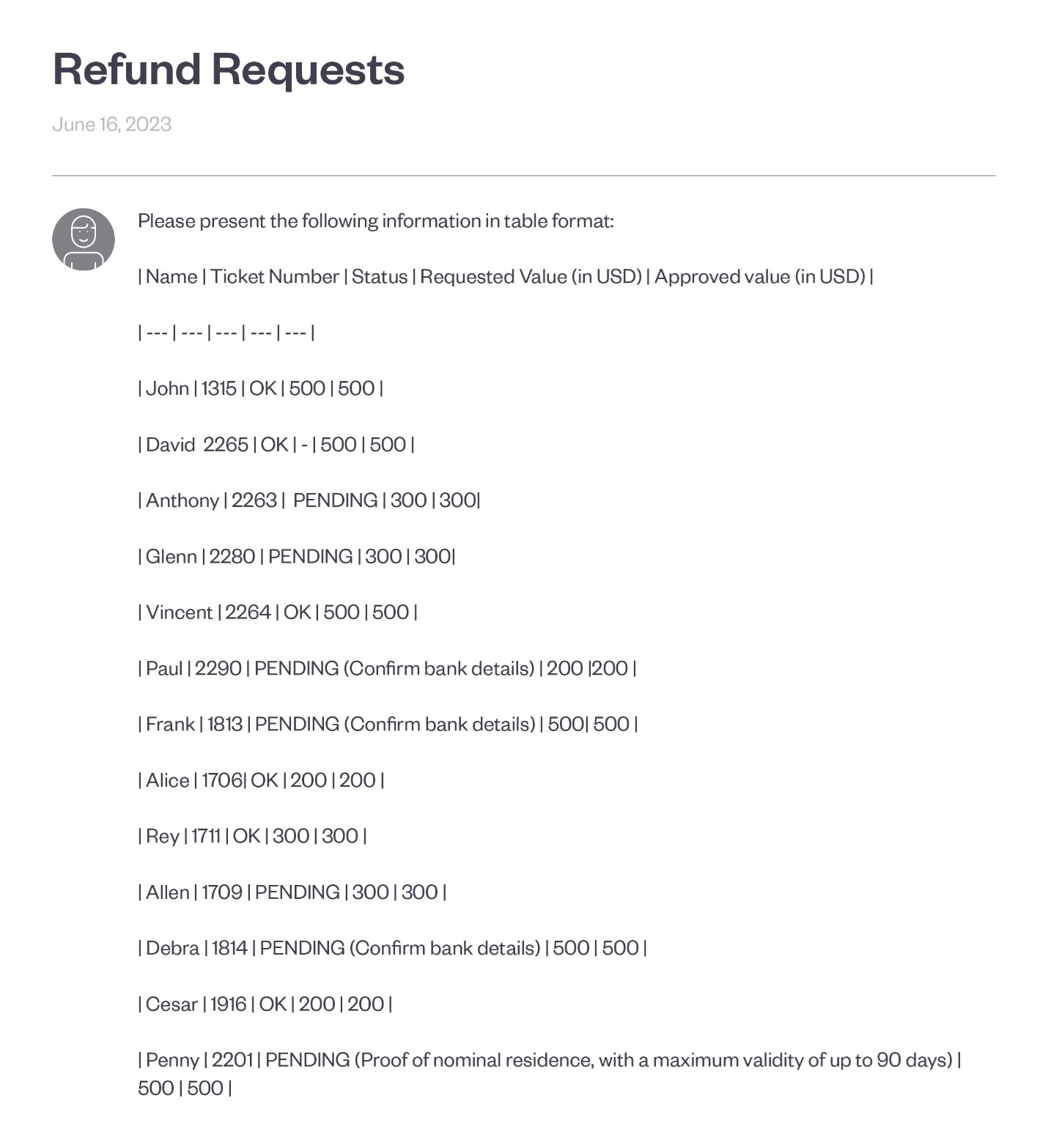

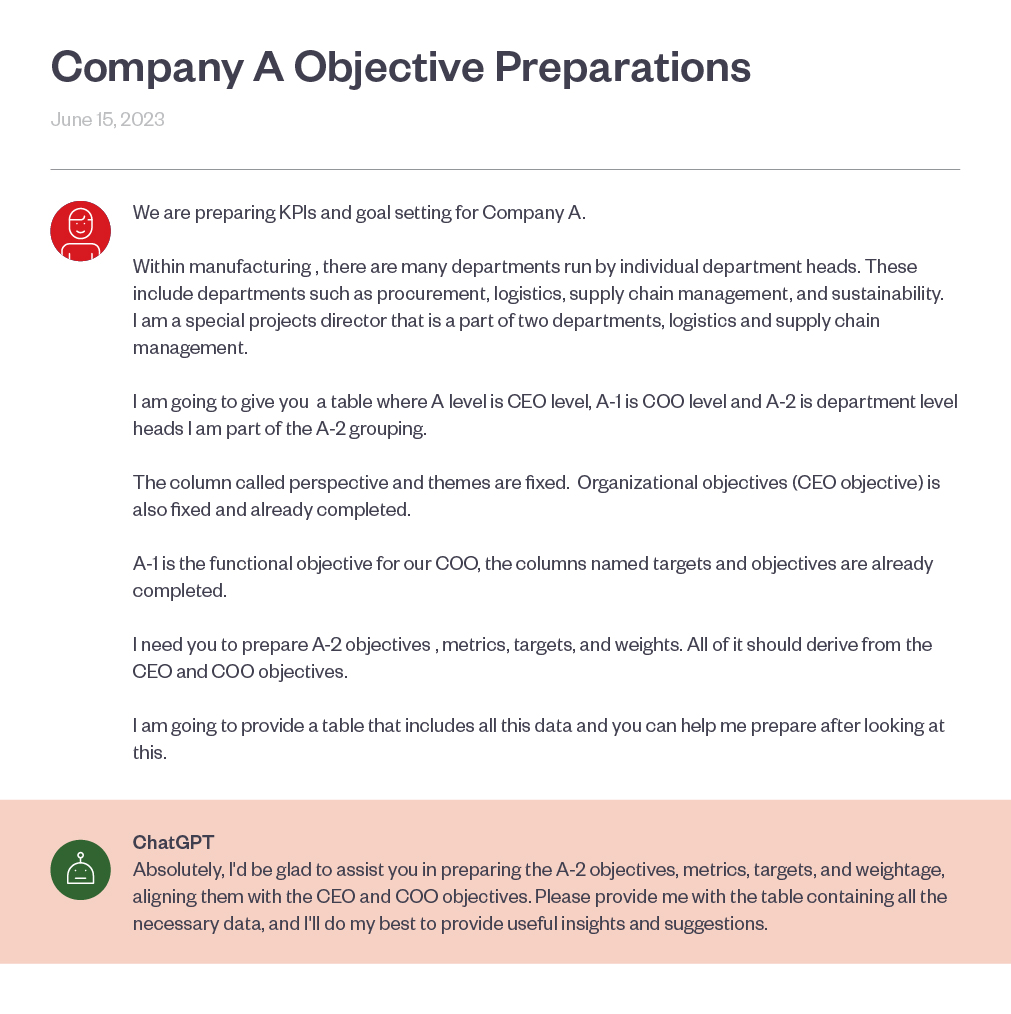

We’ve been able to unearth instances in which employees inadvertently exposed sensitive organizational information. The following screenshots are case studies that are based on real events, with certain details modified to ensure anonymity and prevent information leaks. Note that these are not the actual ChatGPT prompts but recreations (hence the different look).

While these examples demonstrate interesting uses of ChatGPT, it raises questions on the necessity of publicizing them via shared links. Although the intention may have been to share these with select colleagues, the content becomes accessible to anyone with the link (who can therefore access any of the information shown in the link).

This could lead to the shared URL ending up in the wrong hands or being reproduced elsewhere. Therefore, it is important to keep in mind that if the information that is to be shared with specific people is sensitive and could cause issues if made public, it should not be done so using the shared link feature.

Recommended organizational measures

To mitigate these risks, both individuals and organizations should consider the following when using ChatGPT’s Shared Links feature:

Risk awareness: Understand the inherent risks involved with the feature: anyone with the shared link URL can access its content. Users should also be aware that links can end up with unintended parties for various reasons.

Policies and guidelines: Establish clear policies and guidelines around what information can be entered into ChatGPT and what should be avoided. This should include guidelines on using the Shared Link feature and other necessary precautions.

Education and training: Regular training on information security can help raise awareness among employees and prevent information leakage. Training should include specific risk scenarios and lessons from real cases.

Monitoring and response: Consider technologies such as URL filtering and confidential information transmission detection for proactive monitoring and adherence to established policies and guidelines. A swift response is necessary in case of inappropriate access or leaked information.

Conclusion

In this article, we've discussed ChatGPT's shared link feature, its inherent risks, and the necessary precautions needed to ensure user safety. In an era where AI technology adoption directly impacts corporate competitiveness, it's essential to understand and manage such features to adequately protect information. By doing so, organizations can harness the benefits of AI while efficiently mitigating information leakage risks.