Artificial intelligence (AI) is a technology that empowers computers and machines to learn, understand, create, solve problems, predict outcomes, and make decisions.

Table of Contents

What is AI?

On the most basic level, AI refers to computer or machine systems that use technologies like machine learning (ML), neural networks, and cognitive architectures to perform the kinds of complicated tasks that previously only human beings were able to do.

These include everything from creating content to planning, reasoning, communicating, learning from experience, and making complex decisions. That said, because AI systems and tools are so broad and varied, no single definition applies perfectly to all.

Since AI was first introduced in the 1950s, it has transformed almost every aspect of modern life, society, and technology. With its ability to analyze vast amounts of data, understand patterns, and acquire new knowledge, AI has become an indispensable tool in virtually every field of human activity, from business and transportation to healthcare to cybersecurity.

Among other applications, organizations use AI to:

- Reduce costs

- Drive innovation

- Empower teams

- Streamline operations

- Accelerate decision-making

- Consolidate and analyze research findings

- Provide prompt customer support and service

- Automate repetitive tasks

- Assist with idea generation

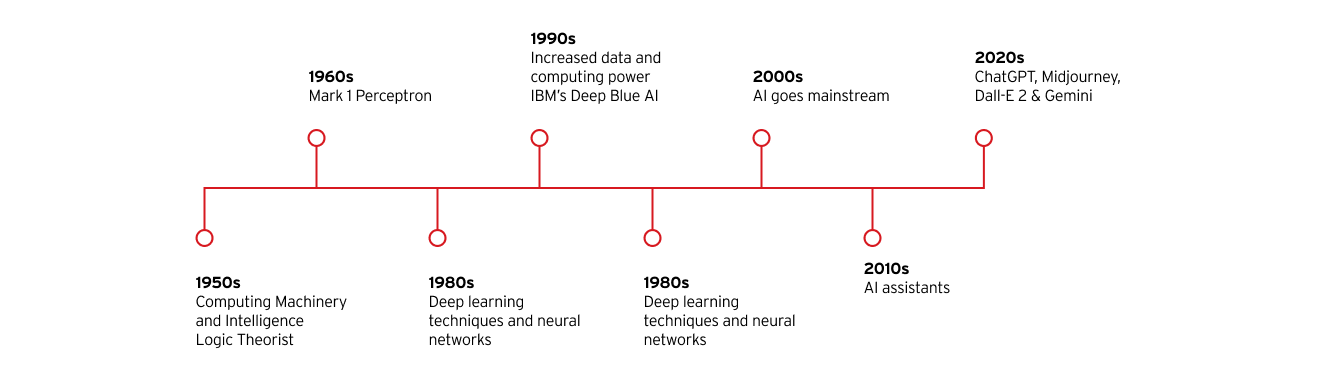

A brief history of AI

The idea of a machine that can think for itself goes back thousands of years. In its modern context, artificial intelligence as a defined concept can be traced back to the year 1950, when mathematician and computer scientist Alan Turing—creator of the famous “Turing Test” to determine if a computer can think like a human being—published his influential paper on the notion of artificial intelligence, Computing Machinery and Intelligence.

In the decades since Turing’s paper first appeared, AI has undergone a dramatic evolution in its scope and capabilities, propelled by exponential advancements in computing power, algorithmic sophistication, data availability, and the introduction of technologies like machine learning, data mining, and neural networks.

Key milestones in the evolution of artificial intelligence

Year

Milestones

1950's

- Publication of Alan Turing’s “Computing Machinery and Intelligence”

- John McCarthy coins the term “artificial intelligence”

- Creation of Logic Theorist, the first AI computer program

1960's

- Creation of Mark 1 Perceptron, the first computer to learn by trial and error

1980's

- Growth of deep learning techniques and neural networks

1990's

- Increased data and computing power accelerate growth of, and investment in, AI

- IBM’s Deep Blue AI defeats reigning world chess champion Garry Kasparov

2000's

- AI goes mainstream with the launches of Google’s AI-powered search engine, Amazon’s product recommendation engine, Facebook’s facial recognition system, and the first self-driving cars

2010's

- AI assistants like Apple’s Siri and Amazon’s Alexa are introduced

- Google launches its open-source machine learning framework, TensorFlow

- The AlexNet neural network popularizes the use of graphical processing units (GPUs) for training AI models

2020's

- OpenAI releases the third iteration of its highly popular ChatGPT large language model (LLM) generative AI (GenAI)

- The GenAI wave continues with the launch of image generators like Midjourney and Dall-E 2 and LLM chatbots like Google’s Gemini

How does AI work?

AI systems work by being fed or ingesting vast amounts of data and using human-like cognitive processes to analyze and assess that data. In doing this, AI systems identify and categorize patterns and use them to carry out tasks or make predictions about future outcomes without direct human oversight or instructions.

For example, an image-generating AI program like Midjourney that’s fed huge numbers of photographs can learn how to create “original” images based on prompts entered by a user. Similarly, a customer service AI chatbot trained on large volumes of text can learn how to interact with customers in ways that mimic human customer service agents.

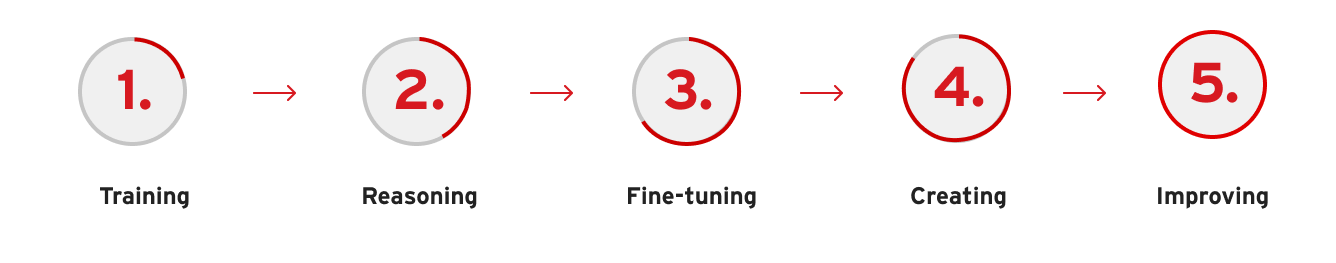

While each system is different, AI models are usually programmed following the same five-step process:

- Training—the AI model is fed huge amounts of data and uses a series of algorithms to analyze and assess the data.

- Reasoning—the AI model categorizes the data it has received and identifies any patterns in it.

- Fine-tuning—as the AI model tries different algorithms, it learns which ones tend to be most successful and fine-tunes its actions accordingly.

- Creating—the AI model uses what it’s learned to carry out assigned tasks, make decisions, or create music, text, or images.

- Improving—finally, the AI model makes continuous ongoing adjustments to improve its accuracy, effectively “learning” from its experience.

Machine learning vs. deep learning

Most modern AI systems use a variety of techniques and technologies to simulate the processes of human intelligence. The most important of these are deep learning and machine learning (ML). While the terms machine learning and deep learning are sometimes used interchangeably, they’re actually very distinct processes in the context of AI training.

Machine learning uses algorithms to analyze, categorize, sort, learn, and make sense of massive quantities of data to produce precise models and predict outcomes without having to be told exactly how to do it.

Deep learning is a sub-category of machine learning that accomplishes the same objectives by using neural networks to mimic the structure and function of the human brain. We’ll explore both of these concepts in greater detail below.

Basic principles of machine learning

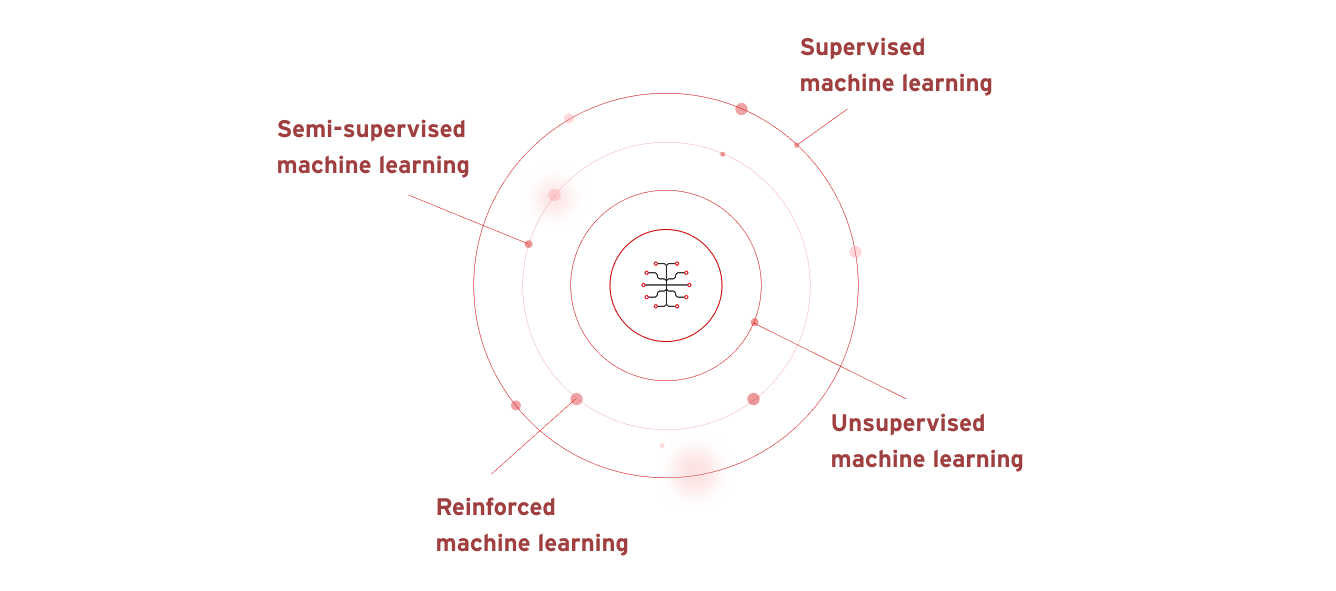

There are four main different types of machine learning:

- Supervised machine learning—the AI uses datasets that are known, established, and classified to discover patterns before feeding them to the algorithm.

- Semi-supervised machine learning—AI is trained on a small known and labeled dataset, which it then applies to larger, unlabeled, and unknown datasets.

- Unsupervised machine learning—the AI learns from datasets that are unknown, unlabelled, and unclassified.

- Reinforced machine learning—the AI model isn’t trained initially on any datasets, so it learns from trial and error instead and alters its behaviors until it is successful.

Machine learning is what enables self-driving cars to respond to changes in the environment well enough to be able to deliver their passengers safely to their chosen destination. Other applications of machine learning run the gamut from image and speech recognition programs, language translation apps, persona-driven AI agents, and data mining to credit card fraud detection, health care diagnoses, and social media, product, or brand recommendations.

Deep learning and neural networks

Deep learning is a form of machine learning that’s based on the use of advanced neural networks—machine learning algorithms that emulate how the neurons in a human brain function in order to identify complex patterns in large sets of data.

For example, even very young children can instantly tell their parents apart from other adults because their brains can analyze and compare hundreds of unique or distinguishing details in the blink of an eye, from the color of their eyes and hair to distinctive expressions or facial features.

Neural networks replicate the way human brains work by similarly analyzing thousands or millions of tiny details in the data they’re fed to detect and recognize larger patterns between them. Generative AI (or “GenAI”) systems like OpenAI’s ChatGTP or the Midjourney image generator, for example, use deep learning to ingest and analyze large numbers of images or text, and then use that data to create new text or images that are similar to—but different from—the original data.

What are the latest developments in AI?

In recent years, ground-breaking innovations in natural language processing, computer vision, reinforcement learning, and cutting-edge technologies like generative adversarial networks (GANs), transformer models, and true AI-enabled machines (AIEMs) have greatly expanded the capabilities of AI systems to mimic human intelligence processes more closely, generate more realistic content, and perform increasingly complex tasks.

Breakthroughs in machine learning and deep learning

In recent years, ground-breaking innovations in natural language processing, computer vision, reinforcement learning, and cutting-edge technologies like generative adversarial networks (GANs), transformer models, and true AI-enabled machines (AIEMs) have greatly expanded the capabilities of AI systems to mimic human intelligence processes more closely, generate more realistic content, and perform increasingly complex tasks.

Breakthroughs in machine learning and deep learning

Revolutionary breakthroughs in machine learning and deep learning algorithms have enabled researchers and developers to create incredibly sophisticated AI systems for a wide variety of real-world applications.

AI chatbots, for instance, are used by millions of businesses every day to answer questions, sell products, and interact with their clients. Businesses also use AI algorithms to discover trends based on a customer’s past purchases and make personalized recommendations for new products, brands, or services.

In the field of automatic speech recognition (ASR), AI services like Siri and Alexa use natural language processing (NLP) to translate human speech into written content. Similarly, advances in AI-driven “computer vision” neural networks have made it easier to tag photos on social media, and made self-driving cars safer.

Other examples of AI using machine or deep learning include automated stock trading algorithms, intelligent robots that can perform repetitive tasks in factories or on assembly lines, and the use of machine learning to help banks spot suspicious transactions and stop financial fraud.

The evolving role of AI in cybersecurity

When it comes to the role of AI in cybersecurity, there are two distinct but interrelated areas to consider: AI security and AI cybersecurity.

AI security (also called security for AI) refers to the use of cybersecurity measures to protect an organization’s AI stack, reduce or eliminate AI security risks, and secure every AI system, component, and application throughout a network, from the endpoints to the AI models. This includes:

- Safeguarding the AI stack, infrastructure, models, and training data from attack

- Maintaining the data integrity of machine learning and deep learning pipelines

- Addressing the issues of AI bias, transparency, explainability, and other ethical concerns

Ensuring the use or development of AI is fully compliant with all relevant laws, policies, and regulations

AI cybersecurity covers all the different ways AI-enabled tools can proactively enhance an organization’s cybersecurity defences faster, more accurately, and more effectively than any human cybersecurity team or security operations center (SOC) ever could. This includes using AI to:

- Identify cyber threats and defend against cyberattacks in real time

- Eliminate cybersecurity gaps and vulnerabilities faster and with greater accuracy

- Automate threat detection and response and other cybersecurity tools for faster incident response

- Enhance threat intelligence to empower or support more efficient threat management

- Automate routine tasks like vulnerability scanning and data log analysis to free the human cybersecurity team to address more complex threats

Examples of AI applications in cybersecurity

Organizations are already using AI in a variety of ways to enhance their cybersecurity posture, detect and respond to cyberattacks, and defend their networks from cyber threats such as data breaches, distributed denial-of-service (DDoS) attacks, ransomware, malware, phishing attacks, and identity threats.

In the area of threat detection and response, AI can identify and predict cyber threats, analyze patterns in activity logs and network traffic, authenticate and protect passwords and user logins, employ facial recognition and CAPTCHA logins, simulate cyberattacks, scan for network vulnerabilities, and create automated cybersecurity defences against new or emerging threats. This includes tools like:

- AI-powered next-generation firewalls (NGFWs)

- AI security information and event management (SIEM)

- AI cloud and endpoint security systems

- AI network detection and response (NDR)

- AI extended detection and response (XDR)

When an attack does happen, AI can also offer effective remediation strategies or automatically respond to security incidents based on an organization’s pre-set policies and playbooks. This can help reduce the costs and minimize the damage from an attack, and allow organizations to recover more quickly.

What ethical considerations are involved in the development and use of AI?

AI clearly offers a number of powerful advantages over other types of computing systems. But as with any new technology, there are risks, challenges, and ethical questions that need to be considered in the development, adoption, and use of AI.

Bias and fairness

AI models are trained by human beings using data drawn from existing content. This creates a risk that the model could reflect or reinforce any implicit biases contained in that content. Those biases could potentially lead to inequity, discrimination, or unfairness in the algorithms, predictions, and decisions that are made using those models.

In addition, because the content they create can be so realistic, GenAI tools have the potential to be misused to create or spread misinformation, disinformation, harmful content, and deepfake video, audio, and images.

Privacy concerns

There are also several privacy concerns surrounding the development and use of AI, especially in industries like healthcare, banking, and legal services that deal with highly personal, sensitive, or confidential information.

To safeguard that information, AI-driven applications must follow a clear set of best practices around data security, privacy, and data protection. This includes using data anonymization techniques, implementing robust data encryption, and employing advanced cybersecurity defenses to protect against data theft, data breaches, and hackers.

Regulatory compliance

Many regulatory agencies and frameworks like the General Data Protection Regulation (GDPR) require companies to follow clear sets of rules when it comes to protecting personal information, ensuring transparency and accountability, and safeguarding privacy.

To comply with those regulations, organizations should make sure they have corporate AI policies in place to monitor and control the data that’s used to build new AI models, and to protect any AI models that might contain sensitive or personal information from bad actors.

What is the future of AI technology?

What will happen in the future of AI is, of course, impossible to predict. But it’s possible to make a few educated assumptions about what comes next based on current trends in AI use and technology.

Emerging trends in AI research

In terms of AI research, innovations in autonomous AI systems, meta-AI and meta-learning, open-source large language learning (LLM) models, digital twins and red teaming for risk validation, and human-AI joint decision-making could revolutionize the way AI is developed.

Complex new systems like neuro-symbolic AI, true AI-enabled machines (AIEMs), and quantum machine learning will also likely further enhance the reach and capabilities of AI models, tools, and applications.

Another technology that has the potential to revolutionize the way AI works is the move towards next-generation agentic AI—AI that has the ability to make decisions and take actions on its own, without human direction, oversight, or intervention.

According to tech analyst Gartner, by the year 2028 agentic AI could be used to make up to 15% of all daily work decisions. Agentic AI user interfaces (UI) could also become more proactive and persona driven as they learn how to act like more human agents with set personalities, carry out more complex business tasks, make more important business decisions, and provide more personalized client recommendations.

Potential impacts of AI on the workforce

As AI increases operational efficiencies and takes over routine tasks, and as GenAI engines like ChatGPT and Midjourney become more powerful and widespread, there are valid concerns about the possible impact on jobs in numerous industries.

But as with the introduction of the internet, personal computers, cell phones, and other paradigm-shifting technologies in the past, AI will also likely create new opportunities and perhaps even whole new industries that will need skilled and talented workers.

As a result, rather than navigating job losses, the bigger challenge may be determining how best to train workers for those new opportunities, and to facilitate their transitions from shrinking to growing occupations.

The role of AI in addressing global challenges

In addition to increasing operational efficiencies and enhancing cybersecurity, AI has the potential to help solve some of the biggest challenges facing humankind today.

In the field of healthcare, AI can help doctors make faster and more accurate diagnoses, track the spread of future pandemics, and accelerate the discovery of new pharmaceutical drugs, treatments, and vaccines.

AI technologies could improve the speed and efficiency of emergency responses to natural and human-caused disasters and severe weather events.

AI could also help address climate change by optimizing the use of renewable energy, reducing the carbon footprints of businesses, tracking global deforestation and ocean pollution levels, and improving the efficiency of recycling, water treatment, and waste management systems.

Other likely trends and developments

Some of the other likely future trends, capabilities, and applications for AI include:

- Large language model (LLM) security to protect LLMs from malicious attacks, general misuse, unauthorized access, and other cyber threats. This includes measures to protect LLM data, models, and their associated systems and components.

- Personalized, user-centric AI to offer more customized, intelligent, and personalized client service, including for email endpoint marketing.

- Use of AI models to facilitate red teaming and digital twin exercises by simulating attacks on an organization’s IT systems to test for vulnerabilities and mitigate any flaws or weaknesses.

Where can I get help with AI and cybersecurity?

Trend Vision One™ delivers unmatched end-to-end protection for the entire AI stack in a single, unified AI-powered platform.

Leveraging the feature-rich capabilities of Trend Cybertron—the world’s first proactive cybersecurity AI—Trend Vision One includes a suite of agentic AI features that continuously evolve based on real-world intelligence and security operations.

This allows it to adapt rapidly to emerging threats in order to enhance an organization’s security posture, improve operational efficiencies, transform security operations from reactive to proactive, and secure every layer of AI infrastructure.

Fernando Cardoso is the Vice President of Product Management at Trend Micro, focusing on the ever-evolving world of AI and cloud. His career began as a Network and Sales Engineer, where he honed his skills in datacenters, cloud, DevOps, and cybersecurity—areas that continue to fuel his passion.

Frequently Asked Questions (FAQs)

What is an AI?

Artificial intelligence (or “AI”) is a computer that uses technology to mimic the way human beings think, function, and make decisions.

What does AI really do?

AI models use complex algorithms and draw on vast amounts of "learned" information to compute answers to user questions or generate content based on user prompts.

Is Siri an AI?

Siri is a simple form of AI that uses technologies like machine learning and speech recognition to understand and respond to human speech.

Is AI good or bad?

Like all technologies, AI isn’t inherently good or bad. It’s a tool that can be used for both positive and negative purposes.

What is the main goal of AI?

The main goal of AI is to enable computers to make smarter decisions, accomplish more difficult tasks, and learn from experience without constant human input.

Why was AI created?

AI was developed to help automate repetitive tasks, solve complex problems, and enable more advanced research and innovation across multiple different industries.

What are the risks of AI?

Some risks posed by AI include concerns about bias and fairness, privacy risks, cybersecurity risks, and the potential for job displacement.

Who made the AI?

Computer scientists Alan Turing (1912 – 1954) and John McCarthy (1927 – 2011) are usually considered to be the unofficial “fathers of AI.”

What is AI in real life?

There are many examples of AI in our daily lives, from software on the smartphone in your pocket to chatbots that respond to common customer service questions.

What is a simple AI?

Some examples of simple AIs include voice assistants like Siri or Alexa, smart search engines like Google, customer service chatbots, and even robot vacuum cleaners.

What are the disadvantages of AI?

Some disadvantages of AI include concerns about privacy and bias, cybersecurity risks, and the potential impact on jobs in certain fields.

What are 5 disadvantages of AI?

Depending on how it’s used, AI can cause job losses, generate misinformation, compromise private information, reduce creativity, and lead to over-dependence on technology.

How do I turn on AI?

Most AI tools can be turned on or off in the settings features of cellphones, computers, software applications, or websites.

Can I use AI for free?

Many AI tools like search engines or Alexa are completely free. Others, like ChatGPT, offer both free and paid options. Commercial-grade AI is usually paid-only.

Which AI is fully free?

Most AI companies offer free introductory versions including Microsoft Copilot, Grammarly, Google Gemini, and ChatGPT.

What is the most popular AI app right now?

Currently, the most popular AI apps include ChatGPT, Google Maps, Google Assistant, Microsoft Copilot, and Google Gemini.

What is the AI writing app everyone is using?

Some of the most popular AI-assisted writing apps are Grammarly, ChatGPT, Writesonic, Jasper, and Claude.

What is the AI app everyone is using for free?

Google Gemini, Microsoft’s Copilot, and ChatGPT are all among the most widely used free AI apps right now.

What is the best AI chatbot right now?

The “best” chatbot depends on what you need it for, but some of the most popular AI chatbots include Perplexity, Google Gemini, Jasper, and ChatGPT.

What is an example of AI in daily life?

Common examples of AI in daily life include smartphones, AI search engines, client service chatbots, and digital voice assistants like Siri and Alexa.