By Nitesh Surana, David Fiser

In our previous article, we examined an information disclosure vulnerability found in one of the cloud agents used on compute instances (CIs) that were created in the Azure Machine Learning (AML) service. This vulnerability could let network-adjacent attackers disclose sensitive information from the CI remotely. In the third installment of this series about the security issues found in AML, we detail how an attacker could achieve stealthy persistence in an AML workspace.

Using Azure CLI from Compute Instances

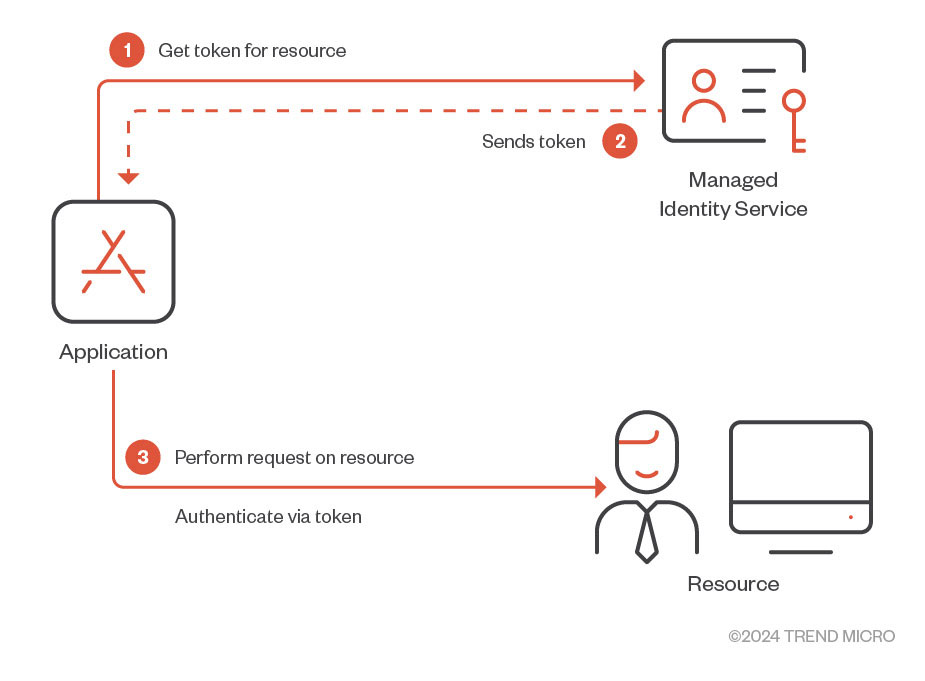

One of the issues that developers face while working with cloud services is credential management of access keys, secrets, passwords, and the like. Credentials being stored in code or in files on cloud resources increases risk of exposure in the event of a compromise. To circumvent this particular issue, Azure’s managed identities feature enables users to avoid storing credentials in code (Figure 1). Users and applications requiring access to other resources essentially fetch Entra ID tokens to gain access to a desired service.

Figure 1. Brief overview of how managed identities work

Managed identities support various services in Azure, including AML. In AML, users can give compute targets, such as CIs and compute clusters, system-assigned or user-assigned managed identities. To access other Azure services, role-based access control (RBAC) permissions can be manually assigned to a created managed identity using roles.

On CIs, one can use the Azure Command-Line Interface (CLI) to create and manage other Azure resources. One can work on Azure services (based on their RBAC permissions) by signing in using the Azure CLI as the CI owner or the assigned managed identity. To sign in as the assigned managed identity, one can run “az login –identity,” as shown in Figure 2.

Figure 2. Using Azure CLI to sign in as a managed identity

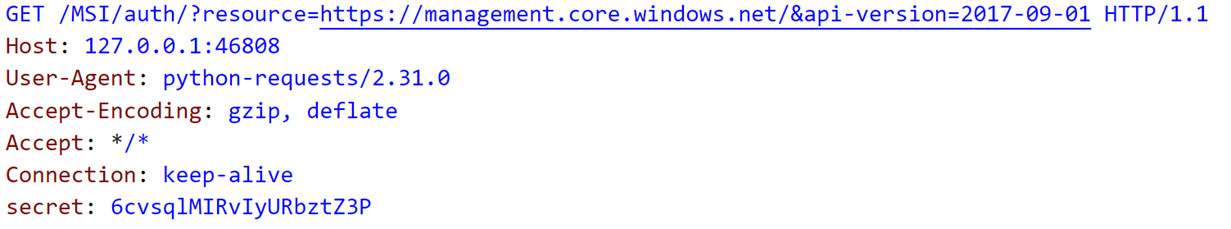

While inspecting the traffic generated upon running “az login --identity,” we came across the following request (Figure 3):

Figure 3. Traffic generated on running “az login --identity”

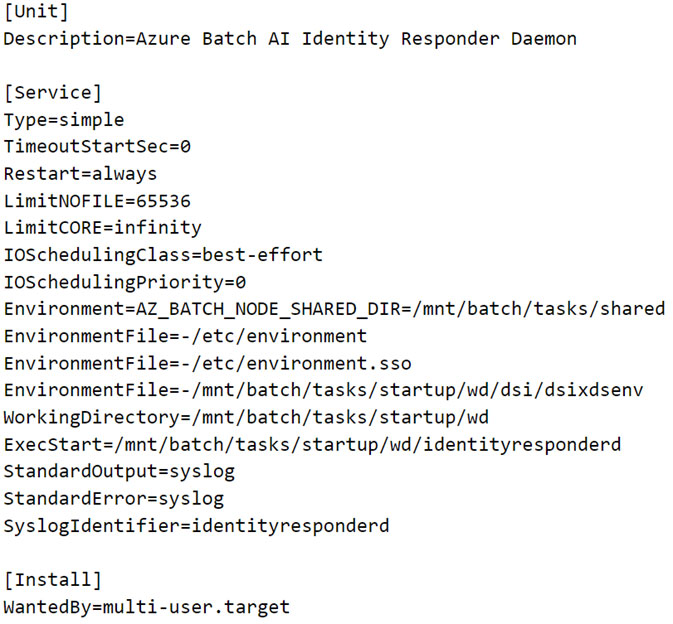

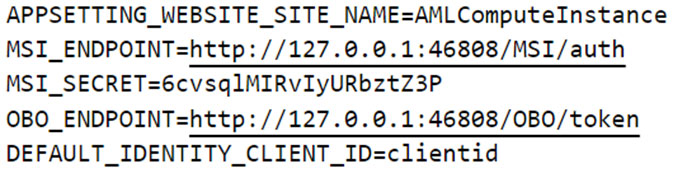

As Figure 3 shows, the request is sent to port 46808 on the local interface 127.0.0.1. Since we have access to the CI, we found that a process named “identityresponderd” listens on port 46808. This process runs as a daemon and is installed as a systemd service across all CIs created under AML. The service is called “AZ Batch AI Identity Responder Daemon.” While examining the service, we noticed that the service fetches its environment variables from certain files as defined in the “EnvironmentFile” parameter (Figure 4).

Figure 4. Service configuration of Azure Batch AI Identity Responder Daemon

The file /etc/environment.sso contains two environment variables named “MSI_ENDPOINT” and “MSI_SECRET” that are used in the GET request shown in Figure 5.

Figure 5. “MSI_ENDPOINT” and “MSI_SECRET” defined in “/etc/environment.sso”

However, we are still querying a local endpoint. While examining outbound communication of the binary, we found that the binary uses a combination of an x509 certificate and a private key to communicate with a public endpoint defined in “/mnt/azmnt/.nbvm” as an environment variable named “certurl” (Figure 6).

Figure 6. Contents of “/mnt/azmnt/.nbvm” with public endpoint defined in “certurl” key

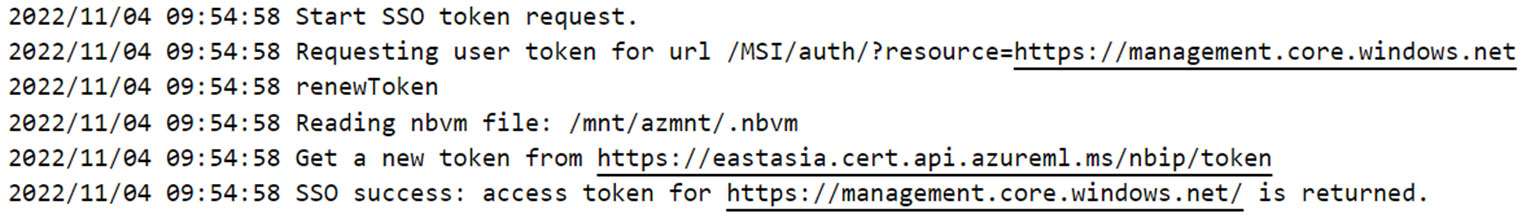

From the service configuration, we also saw that there are syslog entries observed for standard output and standard error file descriptors from the binary. To get an initial idea of what a service possibly does, one can use the generated logs. The endpoint defined in “certurl” is also observed in the syslog entries that are generated when “az login –identity” is run, as shown in Figure 7.

Figure 7. Syslog entries with the public endpoint defined in “certurl"

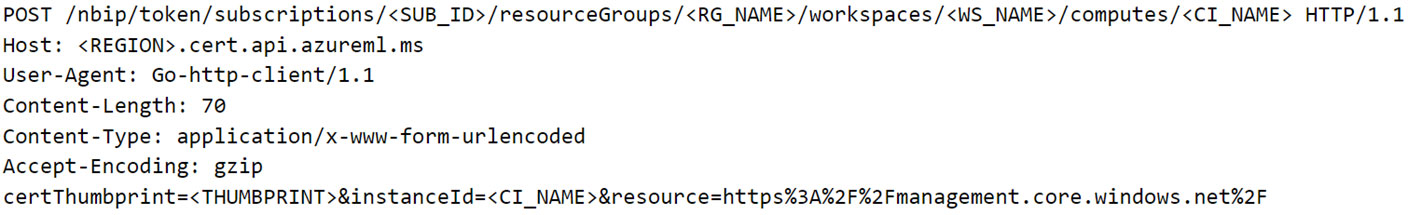

With this information, we crafted the final request that is sent to the public endpoint (Figure 8):

Figure 8. POST request to fetch Entra ID JWT using “identityresponderd”

In the response, we got the Entra ID JWT of the identity (Figure 9). This leads us to wonder if crafting an attack scenario wherein an attacker has exfiltrated the certificate and private key from a CI allows the attacker to fetch the Entra ID JWT of the CI’s assigned identity. Perhaps the assigned identity could even be fetched by an attacker running in a non-Azure environment. It was worth testing this scenario since the endpoint defined in the “certurl” environment variable could be successfully resolved from the internet.

Figure 9. Response containing the Entra ID JWT of the identity

However, upon trying to recreate the scenario of using the certificate and private key from the CI to fetch the Entra ID JWT, we got a 401 unauthorized response. Hence, our thought process and assumption were that the certificate and key are restricted for usage within the boundary of the CI. Additionally, the x509 certificate for every CI had a unique thumbprint.

We now explore why our finding was just an assumption as we probed the “dsimountagent” agent.

A closer look at “dsimountagent”

While examining the functionality of “dsimountagent,” we observed that the agent makes sure that the file share of the AML workspace’s Storage Account is mounted on the CI. As shown in Figure 10, it checks for the mount status every 120 seconds (about two minutes).

Figure 10. Main purpose of “dsimountagent” on a compute instance

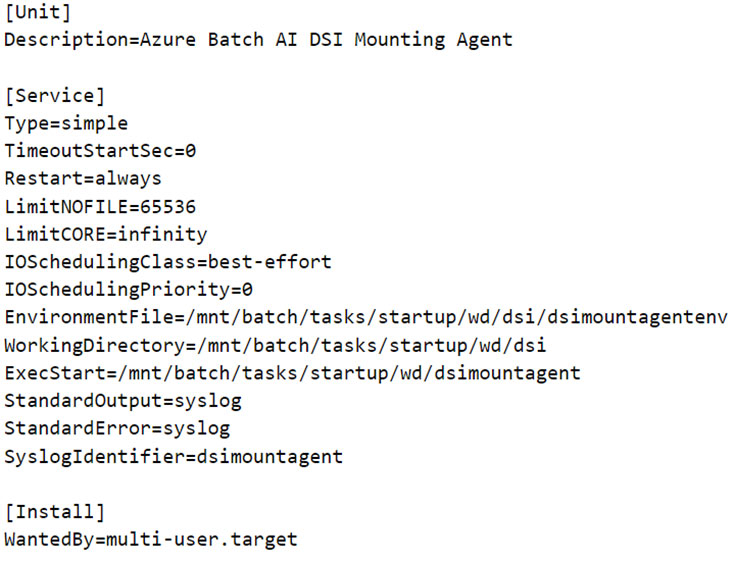

As we can observe in the systemd service config of the daemon (Figure 11), the agent fetches its configuration as environment variables from a file and, like “identityresponderd,” there are syslog entries for this service too. To mount the file share on the CI, the service fetches the AML workspace’s Storage Account’s access key from a public endpoint defined in an environment variable named “AZ_BATCHAI_XDS_ENDPOINT.” This key value pair is stored in a file named “dsimountagentenv” under the “/mnt/batch/tasks/startup/wd/dsi/” directory. Notably, in the first entry of this series, this file was previously observed to contain the Storage Account’s access key in clear text.

Figure 11. Service configuration of Azure Batch AI DSI Mounting Agent

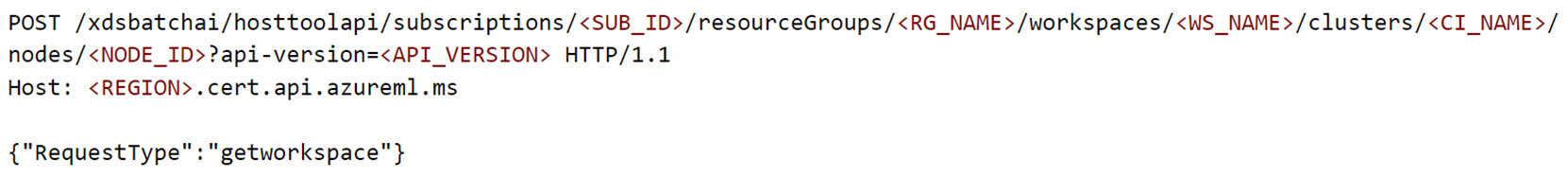

To communicate with the public endpoint, “dsimountagent” uses the same pair of x509 certificate and private key used by “identityresponderd.” While examining outbound requests to the public endpoint, we crafted the following POST request shown in Figure 12.

Figure 12. POST request to fetch workspace information using “dsimountagent”

For readability, a few headers are removed from the POST request in Figure 12. The response for this POST request contained the resource IDs for the Storage Account, key vault, container registry, application insights, and metadata about the workspace such as tenant ID, subscription ID, and whether the CI VNet is publicly reachable, among others. It is possible to think of this as the “whoami” of the AML workspace.

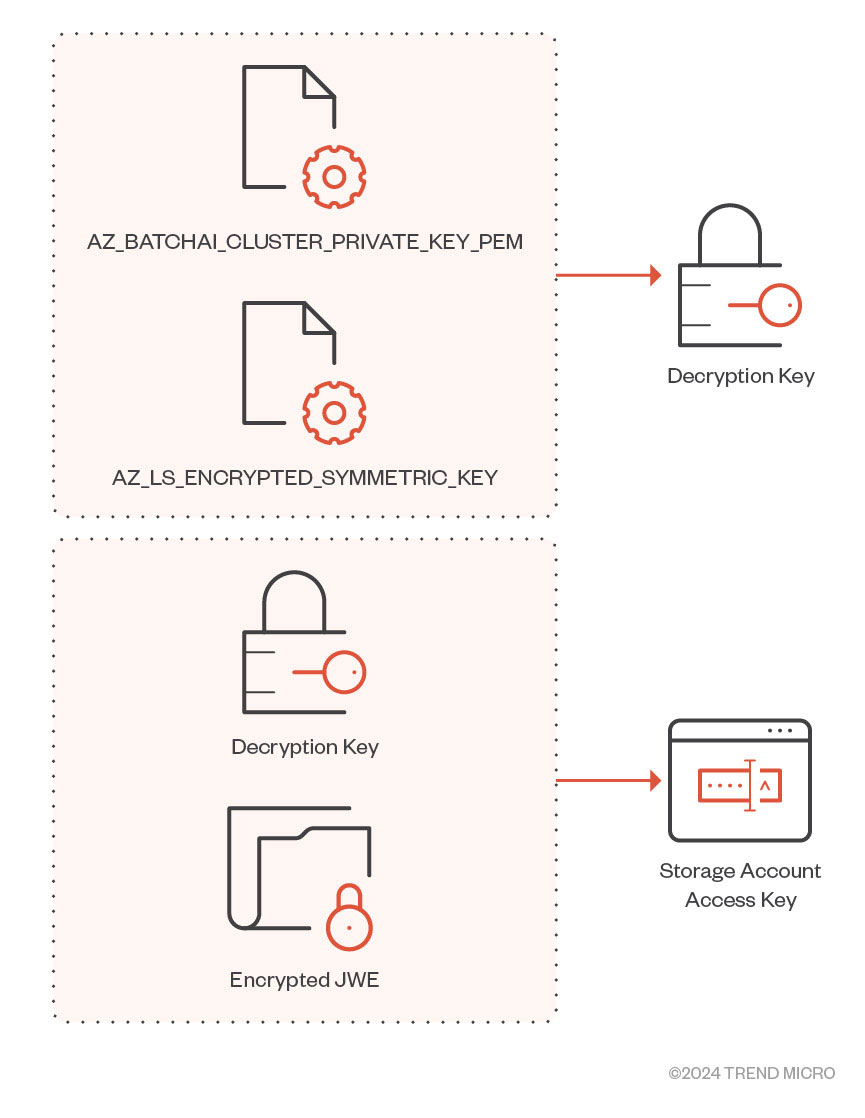

With the same request URI and endpoint, we came across another request where the “RequestType” key in the POST request’s body was set to “getworkspacesecrets.” The response contained the Storage Account name and an encrypted JWE of the Storage Account’s access key. The encrypted JWE is decrypted using two other environment variables named “AZ_LS_ENCRYPTED_SYMMETRIC_KEY” and “AZ_BATCHAI_CLUSTER_PRIVATE_KEY_PEM,” defined in the file responsible for populating environment variables for the daemon (Figure 13).

Figure 13. Decryption routine for Storage Account JWE

Since this request was also being performed from outside the CI using the compromised certificate and private key, an attacker post-compromise could fetch the Storage Account’s access key from outside the CI’s boundaries.

In case a Storage Account’s access key is leaked or compromised, users can invalidate the compromised key by rotating the key. However, we found that the certificate and key pair could be used to fetch the Storage Account access key, even after the access keys were rotated, as we see in this video:

For the two aforementioned cases, the body of the POST request is particularly interesting. Could there possibly be more candidates for the “RequestType” key? Since we had the “dsimountagent” binary (and a few more of such agents like “identityresponderd”), we investigated what else could be fetched using the certificate and private key combination.

Finding more “RequestType” candidates

The “dsimountagent” binary comes with debug symbols, as we noted in the second installment of this series. The functions corresponding to the “RequestType” values set to “getworkspace” and “getworkspacesecrets” were defined in a package called “hosttools.” These functions would, in turn, call another function named “generateXDSApiRequestSchema.” This function is responsible for creating the POST request’s body.

Additionally, since we had multiple agents other than “dsimountagent,” such as “identityresponderd,” we found some candidates while enumerating the cross references of functions calling “generateXDSApiRequestSchema,” which we list here:

- GetAADToken

- GetACRToken

- GetACRDetails

- GetAppInsightsInstrumentationKey

- GetDsiUpdateSettings

- GenerateSAS

As we can see, there were quite a few interesting candidates to go for. In the following section, we examine “GetAADToken.”

Fetching Entra ID token of an assigned managed identity

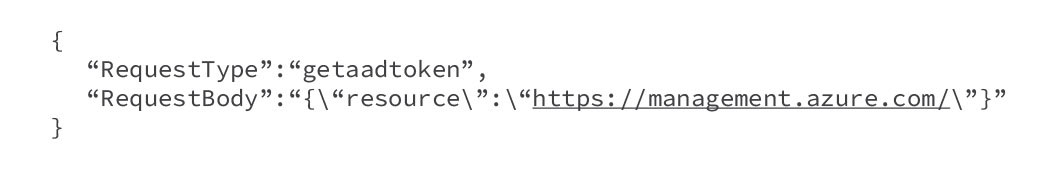

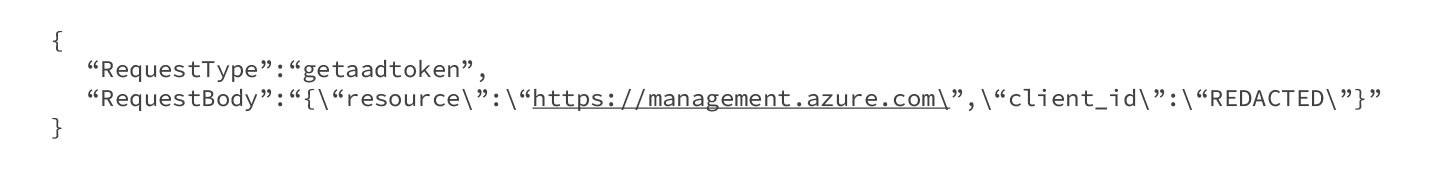

While examining the “GetAADToken” request type, we crafted the POST request body (Figure 14) to fetch a system-assigned managed identity.

Figure 14. POST request body to fetch a system-assigned managed identity Entra ID token

If there was a user-assigned managed identity, we would need to include the client ID, as shown in Figure 15.

Figure 15. POST request body to fetch a user-assigned managed identity Entra ID token

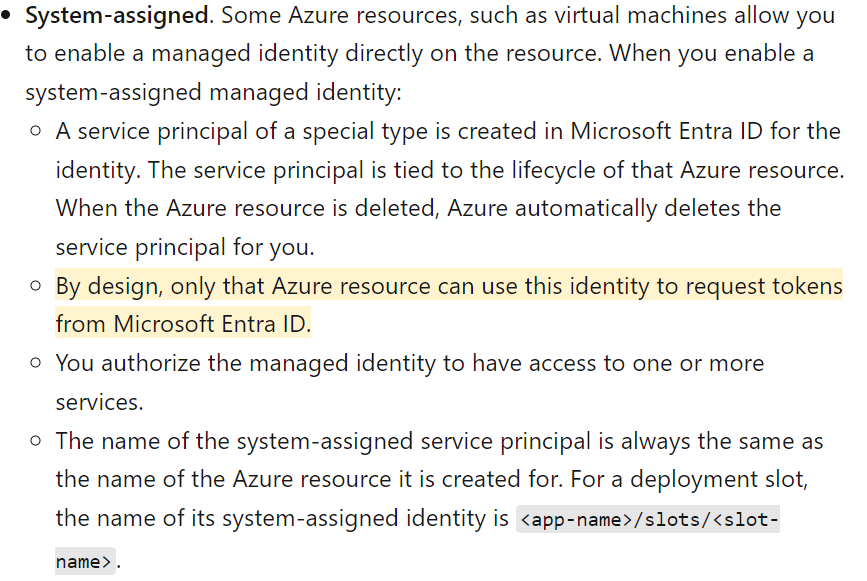

The response would contain the Entra ID JWT of the managed identity assigned to the CI. Since we could perform the request outside the CI and fetch JWTs of managed identities, this finding doesn’t quite go along with how managed identities have been designed, as seen in the documentation for managed identities (Figure 16).

Figure 16. Microsoft documentation of system-assigned managed identities

Managed identities are a way of keeping credentials off-code. It works in such a way that an application needing access to a certain resource will request a token from a managed identity service. The managed identity service is only reachable from the resource to which it has been assigned. For instance, if you assign a managed identity to an Azure virtual machine (VM), the managed identity service can only be reached from the Azure VM on a link-local unrouteable IP address like 169.254.169.254.

Previously, we found a similar issue in a serverless offering in Azure known as Azure Functions, wherein an attacker could leak the certificate from environment variables of the application container and use it to fetch the Entra ID JWT of the assigned managed identity outside the application container.

How do the generated logs look?

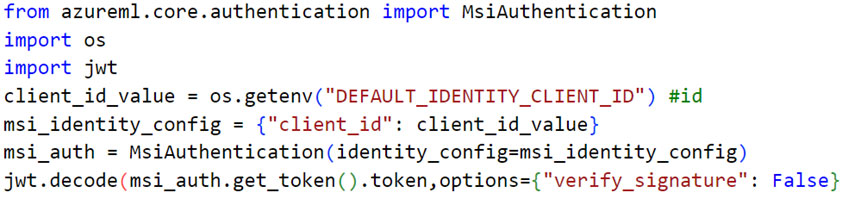

It goes without saying that cloud-native logs are paramount in performing detection and response for security incidents, compliance, auditing, and various other purposes. To examine the logs generated from a legitimate request (that is, from the CI) and a malicious request (that is, outside the CI using the certificate key pair as we detailed previously), we performed a procedure to fetch the Entra ID JWT of a system-assigned managed identity assigned to a CI:

Figure 17. Legitimate procedure to fetch Entra ID token of assigned managed identity

The malicious request would be from anywhere outside the CI. We used the certificate and private key to fetch the JWT, as we detailed previously. We found that the generated sign-in logs from a legitimate attempt and a malicious attempt were almost identical, with differences only in ID, correlation ID, and unique token identifier fields.

Additionally, one can’t know exactly where the request to fetch the token originated from since the generated logs don’t contain location specific indicators, like an IP address. Sign-in logs for managed identities don’t contain IP addresses — a known area of improvement in the logging aspect of the managed identity service — and this is quite likely because managed identity sign-ins are performed by resources that have their secrets managed by Azure. However, in our case we could use the credentials (certificate and key pair) outside the resource to which the managed identity was assigned.

To invalidate a certificate and key pair, one would have to delete the CI from the AML workspace. We didn’t find any documentation on how one could rotate the certificate and key pair. This might be because AML service is a managed service, and these credentials are managed by Microsoft. Additionally, the certificate would be valid for two years from the date of creation of the CI.

Disclosure timeline

We reported this vulnerability arising from differences in the APIs offered by the agents in AML CIs to Microsoft via Trend Micro’s Zero Day Initiative (ZDI). Here's the disclosure timeline for ZDI-23-1056:

- April 7, 2023 – ZDI reported the vulnerability to the vendor.

- April 11, 2023 – The vendor acknowledged the report.

- July 13, 2023 – ZDI asked for an update.

- July 19, 2023 – The vendor asked us to join a call to discuss the report.

- July 19, 2023 – ZDI joined the call and provided the vendor with additional details.

- July 20, 2023 – The vendor stated that it is considering this bug low severity and that it would release a fix in 30 to 45 days.

- July 20, 2023 – ZDI informed the vendor that the case is due on Aug. 5, 2023 and that we are publishing this case as a zero-day advisory on Aug. 9, 2023.

For Black Hat USA 2023, we had planned to share this research with the community. However, before the talk was recorded, we found that this vulnerability was reproducible and not fixed. We reached out to Microsoft, and they deemed the bug to be of a low severity. Later, upon Microsoft's request, we redacted the details of this bug although it was over the 90-day ZDI disclosure policy and was reproducible too.

Microsoft has recently confirmed that the vulnerability has been patched as of January 2024. Currently, the fix doesn’t allow the certificate key pair to be used outside the CI.

Conclusion

Credential management and its life cycle play a crucial role in cloud security. Safeguarding Storage Accounts in AML workspaces is of critical importance since the ML models, datasets, scripts, and logs, among other entities, are stored in file shares and blob containers. When an AML workspace is created, by default, the Storage Account is publicly accessible using the access key.

A recent example of how critical it is to safeguard Storage Accounts, particularly in AML, lies in the recent mitigation from the Microsoft Security Response Center (MSRC): An overly permissive Shared Access Signature (SAS) token was found to expose 38 terabytes of sensitive information from Microsoft AI research. Additionally, the SAS token allowed for write access to files in the Storage Account (meaning anyone with the URL could modify files on the Storage Account containing ML models). This could have resulted in a classic supply-chain attack on systems dependent on trusted repositories.

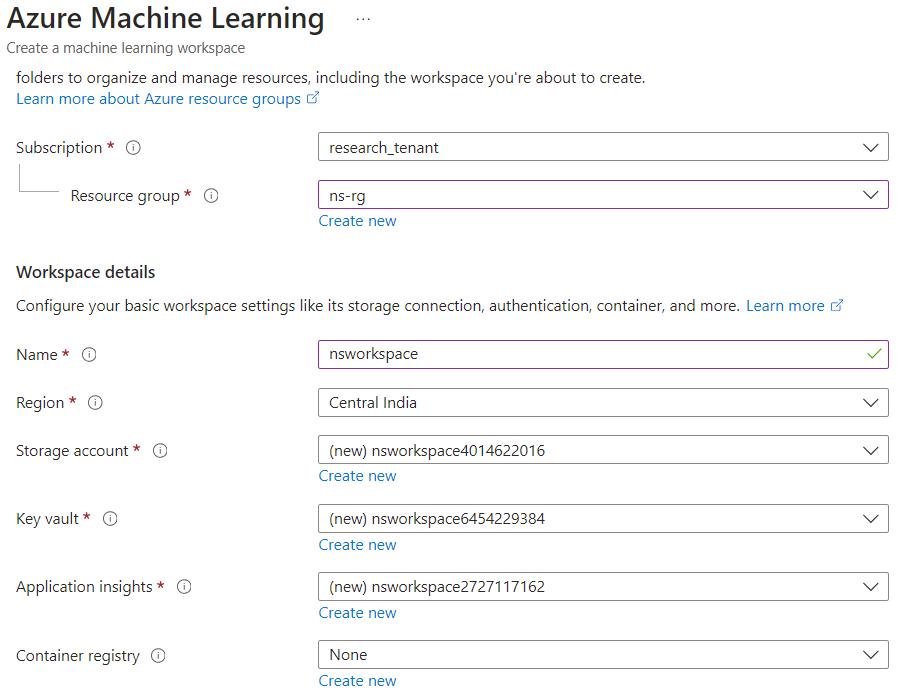

Based on our analysis of Storage Account naming convention and container names, the Storage Account of Microsoft AI research was likely to be from an AML workspace. When an AML workspace is created, the Storage Account has its name as the workspace name, followed by 10 random digits (Figure 18).

Figure 18. Storage Account naming convention observed while creating an AML workspace

Additionally, the container names beginning with “azureml” in the Storage Account “robustnessws4285631339” are specific to the AML service (Figure 19). When creating an AML workspace, similarly named containers are created by default in a Storage Account.

Figure 19. The Azure ML containers mentioned in the Storage Account “robustnessws4285631339”

Defenders can leverage various frameworks like the Threat Matrix for Storage Services and Azure Threat Research Matrix, which can help in assessing the security posture of their environments. Specifically for ML environments, one can use the MITRE ATLAS framework, a knowledge base of adversary tactics and techniques based on real-world attack observations and realistic demonstrations from AI red teams and security groups. There are specific case studies that shed light on real-world attacks targeting ML environments such as the PyTorch dependency confusion, among others.

Cloud practitioners should also follow an “assume breach” mindset to stay vigilant of unnoticed edge cases, as we detailed when discussing how credentials are sourced in on cloud resources. Following best practices helps reduce the risk of exposure, even when certain lines of defenses are breached. These practices include:

- Securing deployments by using VNets.

- Using minimal custom roles for identities.

- Following defense-in-depth practices.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Ultime notizie

- They Don’t Build the Gun, They Sell the Bullets: An Update on the State of Criminal AI

- How Unmanaged AI Adoption Puts Your Enterprise at Risk

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One