By Numaan Huq and Roel Reyes (Trend Micro Research)

Key takeaways:

- This article explores the concept of agentic edge AI, exploring its architecture and the different classes of devices. It also delves into the associated cybersecurity risks and corresponding mitigations.

- Different types of attacks, breaches, hijacking, exploitation, and threats can affect each layer of the architecture, leading to unwanted outcomes such as intellectual property (IP) theft, operational disruptions, loss of control, etc.

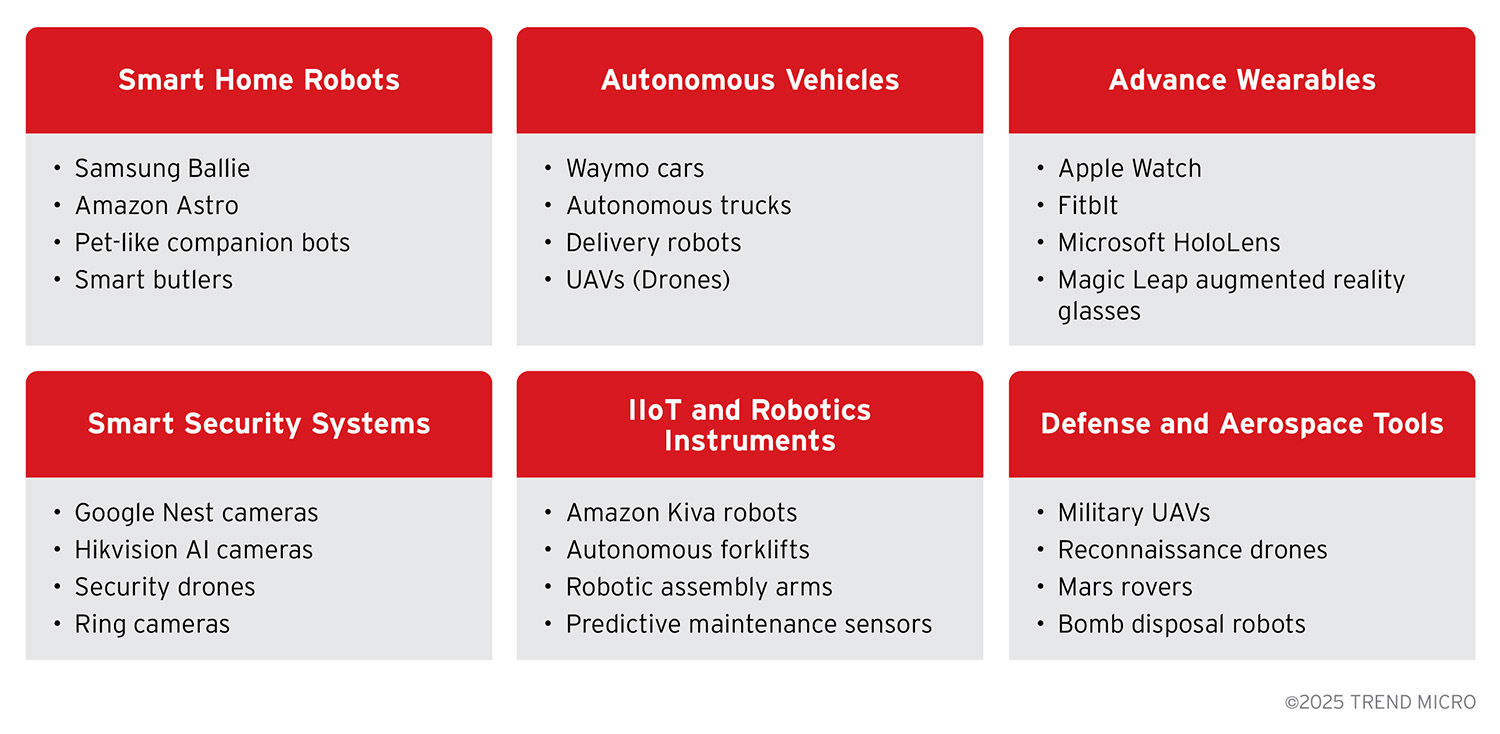

- Agentic edge AI devices include smart home robots, autonomous vehicles, advanced wearables, smart security systems, Industrial Internet of Things (IIoT) and robotics instruments, and defense and aerospace tools.

- In securing an agentic edge AI device, a multi-layered approach is required. It is important to not only safeguard the code, but also parts that give the system autonomy, such as its sensors, learning pipeline, AI models, networks, actuators, and such.

As agentic AI continues to gain prominence, one of its facets calls for further examination: agentic edge AI. What exactly is it, and how can its architecture be described? What are the cybersecurity threats associated with this technology, and what are the mitigation strategies that can manage the risks?

Let’s start with an exploration of what is agentic AI? Large Language Model (LLM) research has been ongoing for over a decade, with notable releases such as BERT in 2018 and GPT-3 in 2020. OpenAI’s public release of ChatGPT 3.5 in 2022 brought conversational AI chatbots into mainstream awareness. AI was no longer some lab curiosity, but was now a consumer-ready tool. This important milestone triggered a rapid innovation cycle.

In 2023, OpenAI introduced GPT-4V(ision), which could analyze images as well as text. Within a few months, all the leading AI chatbots started supporting some level of multimodality through text, image, audio, and video processing support. AI could now “see”, “hear”, and “speak”, and perform more advanced actions such as calling application programming interfaces (APIs) and invoking tools.

As multimodality became the norm, attention shifted to autonomous agency. By late 2024, we saw the rise of agentic AI – systems that not only reason multimodal inputs, but can plan, decide, and execute multi-step tasks. Today, the term agentic has become popular in AI discussions, but not all systems labelled as agentic are truly agentic. To address what is agentic, Trend Micro published an article exploring the concept and came to the conclusion: to be truly agentic, a system should be goal-oriented, context-aware, have multi-step reasoning, be action-driven, and finally, be self-improving.

What is Agentic Edge AI?

Advances in AI hardware and edge computing– low-power central processing unit (CPU), graphics processing unit (GPU), neural processing unit (NPU); embedded sensor stacks, etc.– have enabled a nascent but rapidly growing class of intelligent devices that can operate with goal-directed autonomy even when cloud connectivity is poor or absent. These AI-powered devices run their core perception–reasoning–actuation loop locally (“on the edge”) and can act as independent agents in their operating environment (homes, factories, vehicles, wearables, etc.).

Unlike traditional internet of things (IoT) devices that typically offload data processing to the cloud, these devices keep latency-critical decision-making local, allowing them to respond in real time and continue functioning offline. Thus, agentic Edge AI is the fusion of an agentic software architecture with edge-class hardware, where the cloud augments rather than replaces on-device autonomy.

- Agentic aspect – Inside every agentic edge AI device is an orchestrator, a reasoning engine (likely running a Compact/Lightweight/Small Language Model that uses a smaller memory footprint and less energy) that receives high-level goals from the user, deconstructs those goals into multiple tasks, and delegates each task or subtasks to one or more specialized agents.

Each agent is a self-contained program, possibly AI-powered, that can invoke tools such as a vision model, web client, actuators, etc., to sense, decide, and act.

Under the orchestrator’s watch, these agents together form an adaptive workflow that can solve complex tasks with minimal human intervention, choosing both what to do next and how to do it. Continual self-improvement (on-device learning) is advantageous but not mandatory for a system to qualify as agentic.

- Edge aspect – All-time critical tasks such as perception, decision making, and actuation run on the device’s on-board CPUs, GPUs, or NPUs, enabling the system to react in real-time and continue functioning even when cloud connectivity is poor or absent.|

Cloud services are optionally used for heavyweight analytics and inference, large-scale model training, and fleet-level coordination – opportunistic accelerants, not single points of failure.

Agentic edge AI devices are only now appearing commercially, and their range of offerings is expected to grow as edge-optimised AI chips and compact models mature. A recent example of an agentic edge AI device is Samsung’s Ballie, which is expected to be released in the US market this year.

Figure 1. Samsung Ballie home robot (Photo by Chris Welch / The Verge – source: https://www.theverge.com/2025/1/6/24337478/samsung-ballie-robot-release-date-features-2025)

Ballie is a small rolling robot designed as a smart home companion. It is equipped with cameras, light detection and ranging (LiDAR), microphones, and a built-in projector.

According to Samsung, “Ballie will use Gemini multimodal capabilities along with proprietary Samsung language models to process and understand a variety of inputs, including audio and voice, visual data from its cameras, and sensor data from its environment”.

This agentic edge AI setup enables Ballie to perceive its surroundings, process information locally, and perform autonomous actions inside the home. For example, Ballie can patrol the home, adjust lighting or control appliances, follow the owner, and project messages and videos on different surfaces.

Ballie embodies the “agentic” aspect by interacting with its environment proactively, and the “edge” aspect by using on-board computing for real-time responses.

Another interesting product in the smart home robot category is the Roborock Saros Z70, a robotic vacuum with a foldable five-axis arm that uses dual cameras and sensors for mapping, object recognition, and gentle gripping. It can learn up to 50 objects and autonomously move obstacles away, so it can clean blocked areas.

Other notable product mentions include Hengbot’s Sirius Robotic Dog and Temi 3 Personal Assistant Robot.

Even though there are differences in applications and design, these devices share common features: they process data locally, thus reducing latency and cloud reliance, act autonomously in dynamic and unstructured environments, and can integrate with smart home/IoT ecosystems to perform various functions.

Multi-Layered Architecture for Agentic Edge AI Systems

All agentic edge AI devices will implement some permutation of a multi-layered AI architecture that distributes intelligence across the device and the cloud. This layered design allows the system to separate real-time on-device functions from heavier cloud-assisted tasks, achieving a balance between autonomy and connectivity.

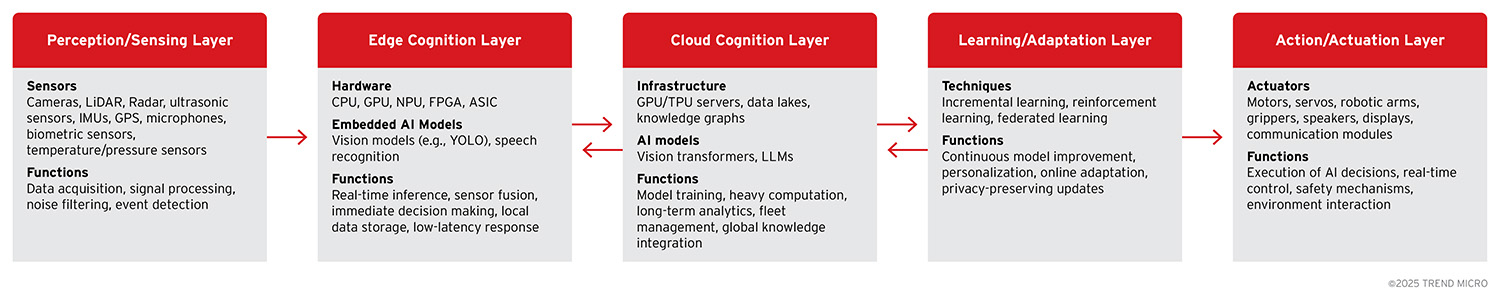

Figure 2. Five-layer architecture for Agentic Edge AI systems

In this section, we explore the core layers that make up a multi-layered agentic edge AI architecture:

- Perception/Sensing Layer – interfaces with the physical world to collect raw data

- Edge Cognition Layer – the on-device brain that interprets sensor data and makes immediate decisions

- Cloud Cognition Layer – cloud-based analytics or coordination that augments the device’s intelligence

- Learning/Adaptation Layer – mechanisms for improving the AI model over time, either on-device or via over-the-air (OTA) updates

- Action/Actuation Layer – the physical or digital outputs through which the device interacts with its environment

Perception/Sensing Layer

The perception layer is the “sensory system” of our agentic edge AI device, responsible for observing the environment and converting it into data for processing.

It interfaces with the physical world through an array of sensors such as cameras: red, green, and blue (RGB) and infrared (IR), depth sensors such as LiDAR, ultrasonic range finders, radar, inertial measurement units (IMUs), global positioning system (GPS), microphones, thermometers, and so on. The onboard sensors continuously capture raw signals from the device’s surroundings.

The perception layer also includes low-level firmware and drivers that read sensor hardware and perform initial signal processing. This can include analog-to-digital conversion, noise filtering, normalization or calibration of sensor readings, and simple event detection. For example, in a home robot like Ballie, its camera and LiDAR serve as Ballie’s electronic eyes for detecting people and obstacles in real-time.

By performing these low-level operations close to the sensor, the system reduces redundant data and filters out noise before passing information upstream.

In summary, this layer provides the “eyes and ears” of the agentic edge AI device; it delivers a stream of observations (images, audio, depth perception, etc.) to the next layer for interpretation.

Edge Cognition Layer

The edge cognition layer is the on-device intelligence that immediately processes sensor data and decides how the agent should respond. This layer is essentially the “local brain” of the device, executing AI algorithms such as computer vision, sensor fusion, path planning, etc., in real-time at the edge.

By handling interpretation and decision-making on the device itself, the edge cognition layer enables low-latency responses and continued autonomy even if connectivity to the cloud is lost. For example, if Ballie detects an obstacle in its path, the edge cognition layer will immediately decide to turn or stop without needing cloud assistance.

This local processing is mandatory for safety-critical decisions or interactive behaviours where even a few hundred milliseconds of cloud latency would be unacceptable. The edge cognition layer runs on the device’s compute hardware – typically a CPU combined with accelerators like GPUs, NPUs, or Field Programmable Gate Arrays (FPGAs) for AI workloads.

It runs a variety of AI models and logic: these include object detection networks, speech recognition models, sensor fusion algorithms (Kalman filters, etc.), and control policies. Because it operates within tight computational and energy constraints, the AI models are optimized (quantized, pruned) for efficiency.

The edge cognition layer is responsible for the device’s real-time autonomy: it must react within milliseconds or seconds. As a result, only tasks that meet strict latency requirements are kept here, while more compute-intensive or non-urgent analysis can be deferred to the cloud layer.

A well-designed device will never rely on the cloud for immediate, safety-critical decisions (for example, a self-driving car braking for an obstacle), because network delays or outages could be potentially disastrous if the edge cannot handle the situation alone.

In summary, the edge cognition layer gives the agent its independence and quick reflexes, executing core AI behaviors on the spot.

Cloud Cognition Layer

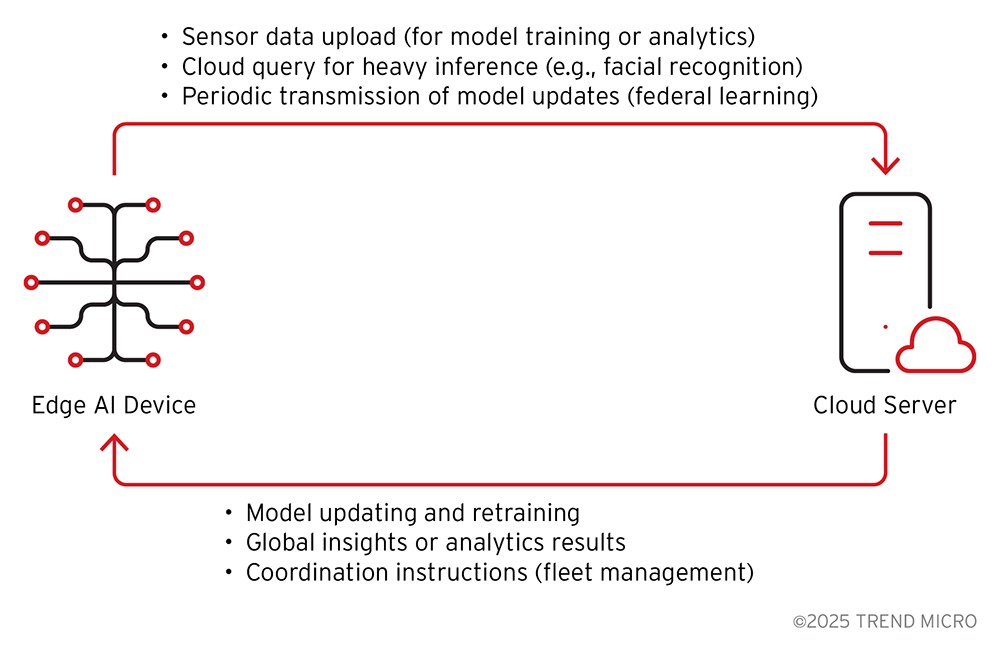

Figure 3. Interaction flow between Edge and Cloud

The cloud cognition layer provides off-device computation and deep intelligence that augments the edge’s capabilities. It is the “big brain in the cloud” that edge devices tap into when they need heavy lifting. Computationally intensive tasks, large-scale data analysis, or tasks requiring a global view beyond the device’s local perspective are offloaded to the cloud.

The cloud also aggregates data and insights from many edge devices, and deep learning models are retrained using this aggregated device data. Cloud AI services might include large-scale data storage for deep learning from aggregated device data, large pretrained models as seen in giant transformer networks for vision or natural language processing (NLP) that are impractical to run on the edge device, and coordination services for fleets of devices.

Cloud cognition functions include model training and updates, which involve periodically retraining AI models on fresh data from the field, heavy analytics e.g., searching a month of security camera footage for anomalies, and providing a global context to the agent edge AI device.

For example, an industrial agentic edge AI system will periodically upload sensor logs to a cloud service that analyzes machine performance trends across an entire factory. Cloud cognition enhances the agent’s intelligence beyond on-device limitations; it is used for tasks that can tolerate the extra latency of network communication. Time-critical control loops remain on the edge, while the cloud handles background processing, knowledge aggregation, and big-picture planning.

In essence, the cloud layer acts as an external knowledge base or higher-level planning center for the agent. It serves in a supportive role instead of controlling every move – the agentic edge AI device remains operational even without constant cloud input.

Learning/Adaptation Layer

This layer allows the agentic edge AI device to improve itself over time and adapt to new scenarios. The edge device uses the learning/adaptation layer to update its AI models, personalize them to its user or environment, and incorporate new learnings.

The learning may happen on-device (if computationally feasible) and/or in the cloud, and may be done continuously or periodically. This learning/adaptation layer turns the edge device from a fixed-function system into an evolving one that becomes smarter with use.

For example, an AI-powered home robot will learn a home’s layout and the family’s daily routines, improving navigation and predictive assistance over time. The learning layer consists of algorithms and data pipelines for model training or fine-tuning. If the device has sufficient computational resources, it will perform on-device training.

More commonly, learning involves a cloud component: devices send aggregated model updates or data summaries to the cloud, where the heavy retraining is done, and then updated models are distributed back to all devices.

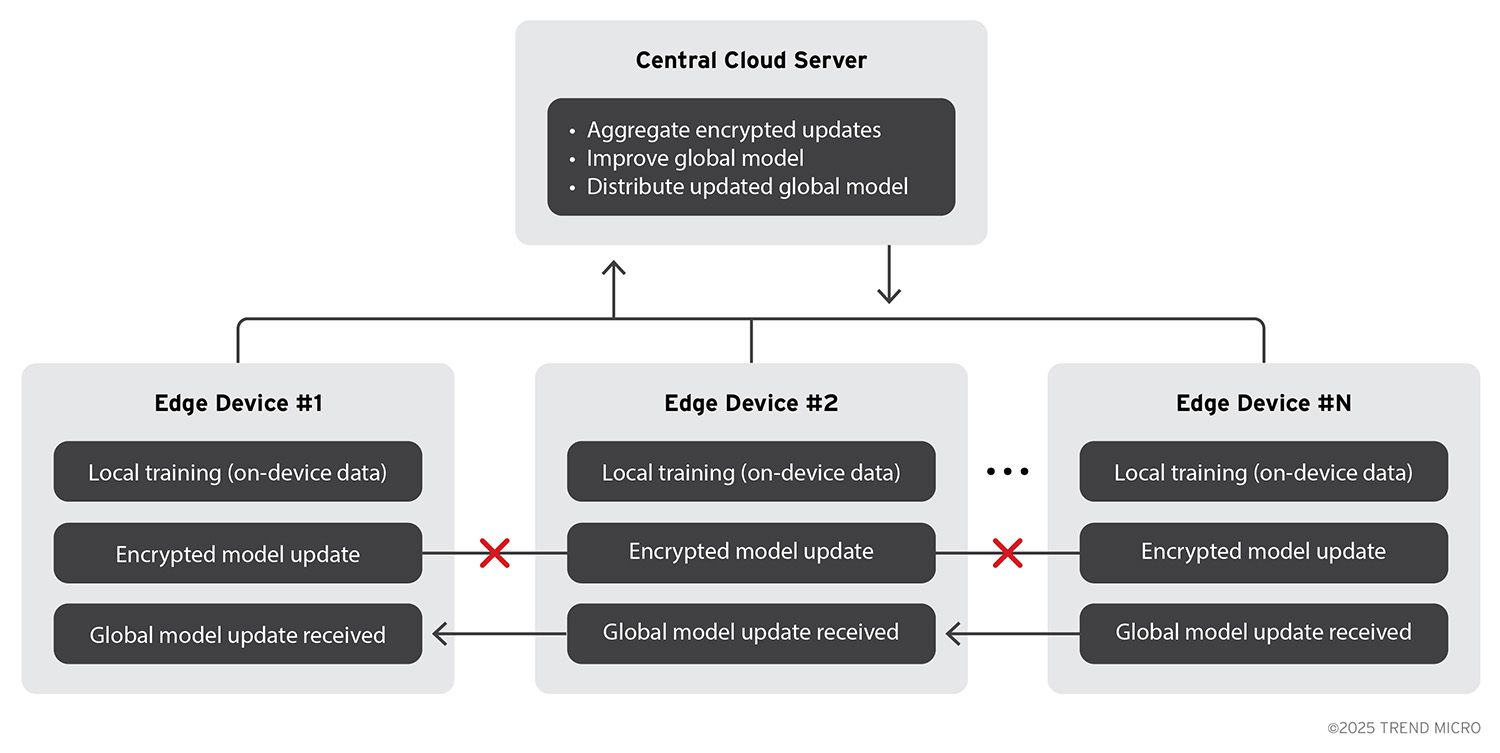

Figure 4. Federated learning process for Agentic Edge AI devices using the Cloud

A popular method for this is federated learning, where each device trains locally on its own data and only uploads model parameter updates (not raw data) to a central server that merges them into a global model. This preserves user privacy while still improving the model collectively.

Other methods in this layer include reinforcement learning (an agent refining its policy by trial-and-error feedback) and continuous cloud-based learning (like updating anomaly detectors or classifiers as new data comes in). This learning/adaptation layer ensures long-term autonomy and flexibility for agentic edge AI devices.

Action/Actuation Layer

This layer executes the agent’s decisions in the physical or digital world. It’s the output end of the perception-action loop: where sensors bring data in, actuators send commands out. In a physical agent such as a robot or an autonomous vehicle, the actuation layer controls motors, servos, wheels, robotic arms, or other mechanisms to move and interact with the environment.

In a purely software agent, such as an edge AI system managing network traffic, actuation occurs via API calls or control signals to other systems. For most agentic edge AI devices, this layer has real-time control – it runs hardware that must respond promptly and safely to the AI’s decisions. These could be motor drivers and controllers, servo control loops, power electronics, or communication interfaces to external actuators.

In Samsung’s Ballie, the actuation layer will include the motors that make Ballie roll around, the mechanism controlling its built-in projector (for projecting images or videos), its speaker, or any IR blaster to send signals to appliances.

This layer is also designed with redundancy and safety in mind: for example, multiple braking circuits in a self-driving car, or limiters to prevent a robotic arm from moving too fast. Motion-control subsystems are expected to comply with safety-of-motion standards such as ISO 13849-1 (safety-related parts of control systems for machinery), ISO 10218-1/-2 (industrial robot safety), and for road vehicles, ISO 26262 (functional safety of automotive E/E systems).

For certain high stakes applications in defense and law enforcement, this layer will incorporate human decision points. We have two human decision point implementations:

- Human in the loop architecture is needed where critical actions such as weapon discharge or use of force requires explicit human authorization, even if targeting is handled autonomously.

- Human on the loop architecture systems can act autonomously, but human operators retain veto power and situational awareness.

Both of these human-centric architectural choices are designed to insert legal, ethical, and operational choices that prioritize human judgement in cases that involve irreversible or potentially harmful actions.

The performance of the actuation layer (speed, precision, and reliability) directly impacts how effectively the agent can carry out its goals in the real world. A delay or fault in actuation can nullify the decisions from the cognition layer/s.

To mitigate this, agentic devices will implement low-level control loops, such as PID controllers for motor motion, to ensure smooth and stable execution of high-level commands.

In summary, the actuation layer allows the AI to do something tangible: moving through an environment, manipulating objects, or influencing digital systems based on the insights from all previous layers.

Multi-Layered Architecture as a Markov Chain

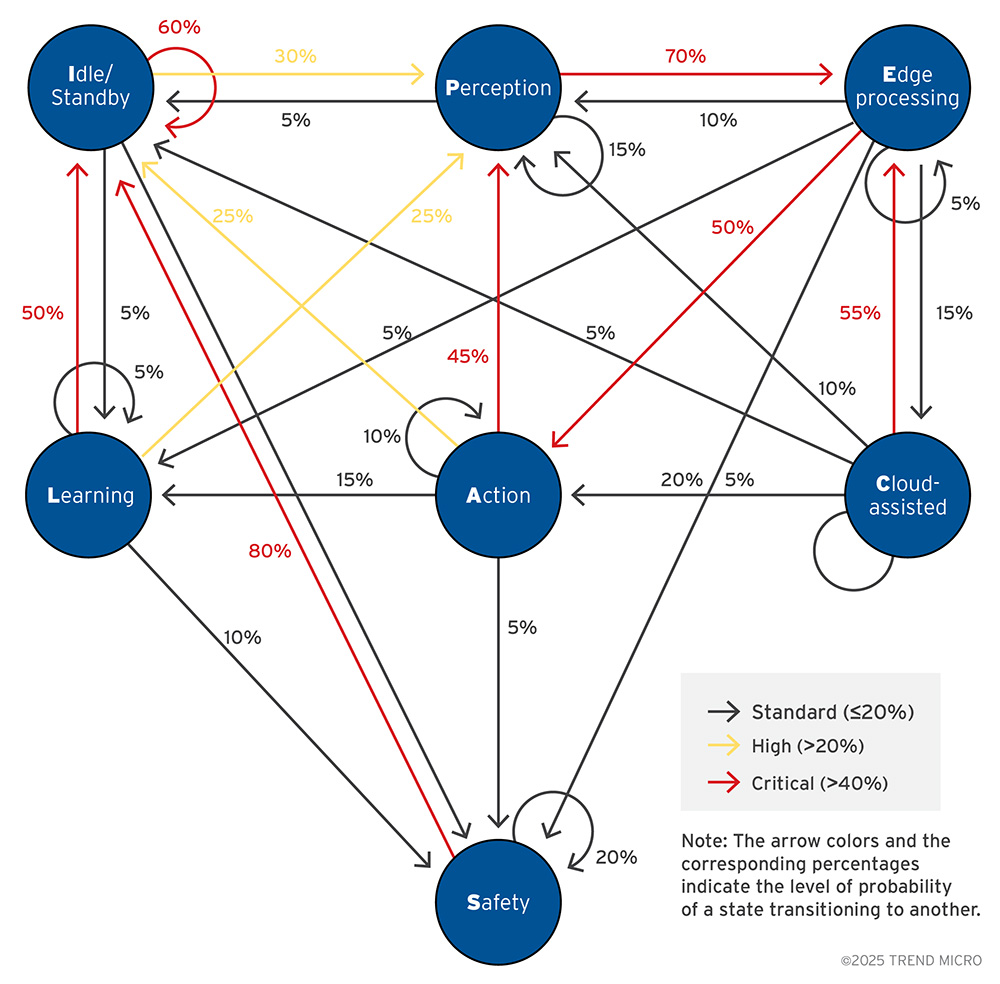

We modeled the multi-layered architecture for agentic edge AI that we introduced as a Markov chain:

Figure 5. Agentic edge AI multi-layered architecture presented as a Markov chain

This chain treats the device as always being in exactly one operational state:

- Idle/Standby

- Perception

- Edge Processing

- Cloud-assisted

- Action

- Learning, or

- Safety

From Idle/Standby, there’s a strong chance the device stays put, but it frequently wakes into Perception to sample the environment.

Perception most often hands off to Edge processing for fast, on-device inference; from there, the common outcomes are either moving straight to Action (local decision and actuation) or briefly offloading to Cloud assist for heavier analytics before returning to Action.

After Action, the system typically either reobserves → reprocesses, or goes back to Idle/Standby.

Learning represents background adaptation (e.g., fine-tuning, personalization) that periodically returns to Idle/Standby, and Safety is a non-absorbing detour (e.g., fault handling, failsafe posture) that usually resolves back to Idle/Standby after checks complete.

This diagram can be read diagram in two ways: (1) dominant path: a high-probability local loop (Perception → Edge processing → Action → Perception/Idle), which matches an edge-first, cloud-assist design; and (2) rare but important branches: occasional Edge processing → Cloud-assisted calls, periodic Learning, and a Safety detour that reduces risk without trapping the system.

Because each state’s next step depends only on where it is at the moment, we can estimate things like time spent in the tight loop versus cloud calls, expected frequency of safety interventions, or the share of cycles consumed by background learning, without needing to model every sensor reading.

Classes of Agentic Edge AI Devices

Agentic edge AI encompasses a broad range of device types and application domains. Here we identify six key classes of agentic edge AI devices, and explore their features, use cases, and design. Each class leverages the multi-layered architecture we discussed, though with different ratios between edge and cloud, and varying primary objectives.

Figure 6. Agentic Edge AI device examples by domain

| CLASS | DESCRIPTION |

|---|---|

Smart Home Robots |

|

Autonomous Vehicles |

|

Advanced Wearables |

|

Smart Security Systems |

|

IIoT and Robotics Instruments |

|

Defense and Aerospace Tools |

|

These six classes of devices demonstrate the versatility of agentic edge AI devices– from home companions to mission-critical industrial and aerospace platforms. While each category has a different edge vs. cloud balance tailored to its required latency, safety, and privacy parameters, they all share the same core: on-device perception, local reasoning, goal-driven autonomy, and the capacity to learn over time.

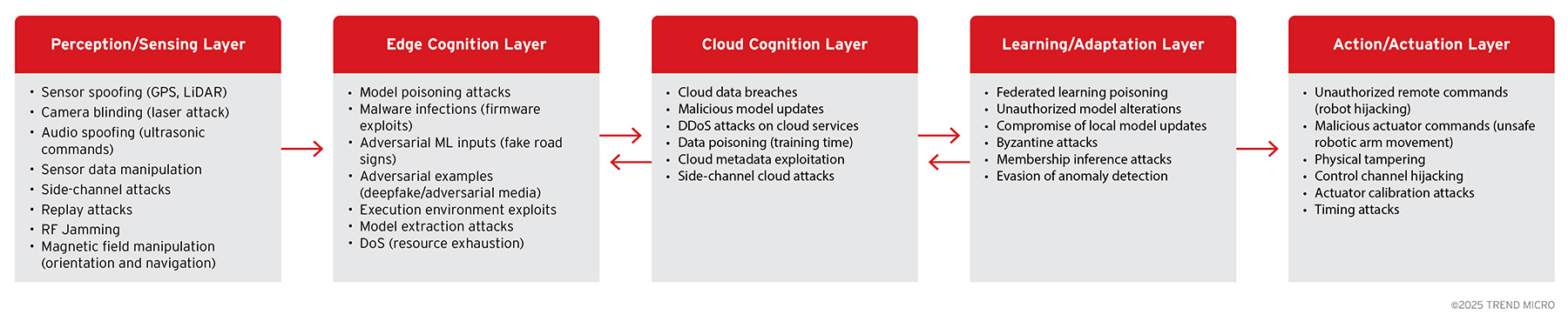

Cybersecurity Threats to the Multi-Layered Architecture

With greater autonomy comes significant security challenges. Agentic edge AI devices expand the attack surface compared to traditional IoT devices because they combine complex software, hardware sensors and actuators, and network connectivity. A malicious actor might attempt to confuse the device’s sensors by feeding it false data, compromise its on-device AI, intercept or alter its cloud communications, poison the learning process, etc.

Ensuring security for these agentic edge AI devices requires a holistic approach covering each layer of the architecture.

In the following diagram and table, we explore a collection of potential attacks against each layer to get a sense of the expanded attack surface that agentic edge AI devices may encounter.

Figure 7. Cybersecurity attack vectors by Architectural layer

| Layer | Threat | Description |

|---|---|---|

Perception / Sensing | Sensor spoofing (GPS, LiDAR) | Attacker transmits false GPS or LiDAR signals so the device believes it is elsewhere or “sees’’ phantom objects, leading to wrong navigation or collision-avoidance decisions. |

Camera blinding (laser attack) | High-intensity laser light saturates image sensors, creating white-out or dead pixels that leave the vision stack effectively blind. | |

Audio spoofing (ultrasonic commands) | Malicious ultrasonic tones outside human hearing can trigger hidden voice commands, silently instructing the agent to perform unintended actions. | |

Sensor-data manipulation | Compromised firmware alters raw sensor readings (e.g., scaling depth values) so higher layers reason over bogus data. | |

Side-channel attacks | Eavesdropping on electromagnetic or power emissions could reveal private sensor data (e.g., reconstructing what a camera sees from its power signature). | |

Replay attacks | Previously recorded “safe’’ sensor streams are injected so the device thinks conditions are unchanged while the real environment evolves. | |

Radio Frequency (RF) jamming | Electronic broad-spectrum noise blocks wireless sensors such as radar or Wi-Fi, forcing the system into fallback or degraded modes. | |

Magnetic-field manipulation | External magnets or coils distort magnetometer/IMU readings, corrupting orientation estimates used by SLAM and other navigational systems. | |

Edge Cognition | Model poisoning attacks | An attacker with OTA update access swaps the on-device model parameters, altering behaviour (e.g., ignoring a specific object class). |

Firmware exploits | Exploiting kernel or real-time operating system (RTOS) bugs allows an adversary to run code with root privileges, granting full control over AI pipelines and stored data. | |

Adversarial machine learning (ML) inputs | Carefully crafted physical objects (like stickers on a stop sign) cause vision models to mis-classify, leading to unsafe driving decisions. | |

Adversarial examples | Malicious audio or video crafted for deception fools face recognition or speech systems into accepting bogus identities. | |

Execution-environment exploits | Buffer overflows or privilege-escalation flaws in AI runtimes (such as TensorRT, ONNX) allow attackers to escape sandboxes and tamper with AI inference. | |

Model-extraction attacks | Repeated API calls or side-channel timing let an attacker approximate proprietary on-device AI models, stealing IP or enabling tailored attacks. | |

Denial-of-service | Flooding the CPU or GPU with junk inference requests starves critical control loops, causing latency spikes or safety shutdowns. | |

Cloud Cognition | Cloud data breaches | Cloud servers that aggregate telemetry or video are compromised, exposing sensitive user environments and model IP. |

Malicious model updates | A hijacked update channel (through a supply chain attack) pushes tampered weights to thousands of devices, instantly inserting a backdoor fleet-wide. | |

Distributed Denial-of-Service (DDoS) attacks on cloud services | DDoS knocks the orchestration backend offline; devices that over-rely on cloud APIs lose key functionality. | |

Training-time data poisoning | Attackers inject maliciously crafted data into cloud training pipelines (for instance, federated learning), biasing future models toward unsafe outputs. | |

Cloud metadata exploitation | Misconfigured buckets, logs, or identity and access management (IAM) roles reveal device credentials or network topologies, which can be exploited for lateral movement. | |

Side-channel cloud attacks | Co-resident virtual machines (VMs) monitor cache or power patterns to infer proprietary model parameters or user data processed in multi-tenant GPUs. | |

Learning / Adaptation | Federated-learning poisoning | A set of rogue devices uploads manipulated data, so the aggregated global model adopts attacker-chosen behaviors. |

Unauthorized model alterations | Fine-tuned model weights are edited, silently degrading performance or embedding covert triggers. | |

Compromise of local model updates | A man-in-the-middle (MiTM) attack swaps a valid incremental update with a malicious one before it is verified by the device. | |

Byzantine attacks | Multiple rogue colluding nodes supply inconsistent updates that destabilise or slow collective learning while evading simple anomaly checks. | |

Membership-inference attacks | The attacker queries an adaptive model to determine whether specific private data points were used in training, leaking personal information. | |

Model-inversion attacks | The attacker tries to figure out the inner workings of the adaptive model by looking at its gradients or logits to reconstruct sensitive training data (such as private images or sensor traces) that were never meant to leave the device or cloud, leaking personal or proprietary information. | |

Evasion of anomaly detection | Attack traffic is tuned to stay below learnt thresholds, gradually corrupting models without triggering security monitors. | |

Action / Actuation | Unauthorized remote commands (robot hijacking) | Stolen credentials or protocol flaws let attackers remotely drive, steer, or reposition the robot. |

Malicious actuator commands | Injected trajectories make robotic arms exceed safe speed or torque, risking human injury or equipment damage. | |

Physical tampering | Cutting wires, inserting shim boards, or flipping dual in-line package (DIP) switches bypasses electronic safeguards and alters actuator response. | |

Control-channel hijacking | Controller area network (CAN), universal asynchronous receiver-transmitter (UART), or pulse-width modulation (PWM) lines are probed and overwritten, so genuine control packets are ignored and attacker commands take precedence. | |

Actuator-calibration attacks | Small tweaks to calibration constants cause gradual positional drift, degrading task accuracy or creating hidden safety hazards. | |

Timing attacks | Precisely timed signal injections exploit race conditions in control loops, making the device jerk or stall at critical moments. |

Mitigation Strategies

Securing an agentic edge AI device means defending not only its code, but also its sensors, learning pipeline, AI models, networks, and actuators – every component that gives the system autonomy. Compromise at any layer can cascade up or down, so we need to adopt a layered, defense-in-depth mindset.

Here we present a set of mitigation strategies and best practices to keep agentic edge AI devices safe and their operations reliable. Keep in mind that this is an ever-changing threat landscape, where the threat vectors are evolving, and the defenses also need to evolve to keep pace with the threats.

- Secure boot and signed firmware – enforce cryptographic verification of every software image, preventing malware injection, firmware rollbacks, or unauthorised model swaps.

- Hardware root of trust (RoT) and trusted platform module (TPM) – store keys and verify runtime integrity so attackers cannot tamper with boot chain, calibration data, or model weights.

- End-to-end encryption (TLS1.3/QUIC) with mutual authentication – protects all device↔cloud, device↔app, and intra-robot control links against eavesdropping, replay, and command injection.

- Zero-trust network posture – default-deny firewalls, rate-limiting, and strong API keys for every remote command or update channel, minimising hijack surface.

- Redundant, cross-checked sensors – fuse camera, LiDAR, IMU, radar, etc., and flag outliers to defeat spoofing, jamming, replay, RF or magnetic-field manipulation.

- Optical and acoustic hardening – laser filters, auto-exposure clamping, microphone frequency-gating, and ultrasonic noise detectors block camera-blinding and hidden-command attacks.

- Adversarial-robust ML practices – train models with adversarial examples, perform input sanitation (e.g., confidence thresholds, sanity checks) to resist fake road signs, deepfakes, or crafted anomalies.

- Runtime sandboxing and least-privilege execution – containerise perception and planning modules; if one is exploited, the attacker cannot pivot to kill-chain actuators or sensitive data.

- Resource quotas and watchdog timers – throttle CPU/GPU/NPU, memory, and input/output (I/O) per process; restart or degrade gracefully when usage anomalies signal DoS or runaway tasks.

- Encrypted model and data storage – lock down on-device weights, logs, and telemetry with advanced encryption standard (AES) and hardware-accelerated sealing to defeat model extraction or side-channel snooping.

- Code-signing and staged model rollout – require signatures plus batch deployment to catch malicious or corrupted model updates before fleet-wide release.

- Secure, audited update pipeline – use software bill of materials (SBOMs), reproducible builds, and Continuous Integration (CI) attestations so only vetted binaries and learning artefacts reach devices.

- Federated-learning defenses – secure aggregation, update-clipping, anomaly scoring, and Byzantine-resilient aggregation to thwart poisoning, membership inference, or gradient hijack.

- Anomaly detection and behavioural monitoring – continuously watch sensor statistics, model outputs, and actuator commands; freeze or alert on deviations from learned baselines.

- Manual and automated failsafe – physical stop buttons, hardware interlocks, software kill-switches, and safe-state fall-back when integrity checks fail or comms drop.

- Human oversight protocols for critical actions – implement mandatory human authorization/override checkpoints for high stakes decisions especially in defense/security applications, ensuring autonomous capabilities augment instead of replace human judgement in ethically or legally sensitive operations.

- Tiered access controls and multi-factor authentication (MFA) – separate credentials for local maintenance, cloud admin, and user apps; enforce multi-factor and rotate keys to stop credential theft.

- Tamper-evident, sealed enclosures – disable unused debug ports, use intrusion sensors, conformal coatings, and secure screws to deter physical probing or board swaps.

- Safety-constrained actuator firmware – hard-coded motion limits, calibration checksum checks, and dual-channel drive commands prevent unsafe speeds, directions, or torque even if higher layers are compromised.

- Regular penetration testing and red-team exercises – simulate sensor spoofing, DoS, and model attacks; feed findings into continuous hardening cycle.

- Privacy-first data governance – store only necessary telemetry, apply differential privacy where viable, and honour user-controlled privacy modes to reduce data breach impact.

Implementing these layered defense mitigation strategies turns each potential vulnerability into a controlled, monitored, and recoverable condition – preserving the autonomy, safety, and trustworthiness of Agentic Edge AI systems and devices.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization Ransomware Spotlight: DragonForce

Ransomware Spotlight: DragonForce Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One