Real threats, real TTPs, real resilience

We replicate the same tactics, techniques, and procedures (TTPs) used by threat actors in Incident Response cases. Instead of generic scenarios, you face authentic adversary behaviors targeting organizations, even your AI and LLM applications. It's the most relevant and effective way to assess your resilience.

Tailored attacks, proven defenses

We don’t just simulate generic threats, we replicate tailored attacks. Demonstrating how attacks move through your defenses illuminates hidden detection gaps. The result: precise testing for faster rule tuning, sharper response, and improvements in your security posture.

Exceed compliance with risk-aligned testing

Regulatory frameworks like DORA, NIS2, and NIST demand realistic, threat-led testing. Our red and purple teaming services align with these standards, delivering risk-based assessments that go beyond compliance. With deep knowledge of your environment and tooling, we help you meet regulatory requirements while building a stronger, more proactive security posture ready for evolving threats.

Red Teaming

Red Teaming is a full-scope, threat-led engagement that mimics real attackers. From social engineering to exploiting exposed attack surfaces, we test defenses with the same techniques as potential adversaries to reach crown-jewel assets without detection. Red Team testing could cut security incidents by as much as 25% and reduce associated costs by as much as 35% while improving your security posture. *

Outside-in realism

We work just like real attackers, from the outside. Whether it’s through phishing, exposed infrastructure, or stolen credentials, that means replicating real-world entry vectors to test your perimeter defenses without shortcuts or pre-arranged access.

Full kill chain

Our process follows the full attack lifecycle, from initial compromise to lateral movement, privilege escalation, domain takeover, and data exfiltration, exactly as demonstrated in real-world IR cases.

Expose gaps through covert testing

The engagement is covert by design: only the White Team is informed. This ensures authenticity when testing your detection, escalation, and response capabilities under real-world pressure.

How it works

We start with tailored scoping to define objectives, threat models, and rules of engagement. Following that, multi-phase adversarial simulations are deployed. During the operation, we keep in touch with the White Team via an out-of-band communication channel to keep them up to date. When the engagement has ended, you’ll receive a detailed report outlining our methods, TTPs, and prioritized, actionable recommendations.

Planning

Attack simulation

Daily collaboration

Reporting and Recommendations

Purple Teaming

Ideal for organizations ready to collaborate, Purple Teaming blends offensive and defensive actions to improve detection and response. An analyst works alongside your Blue Team, guiding real-time detection in Trend Vision One and providing continuous feedback between teams to create a hands-on collaborative experience in an assumed breach scenario.

Hands-on learning

Your SOC team partners with our offensive experts in a hands-on, collaborative environment. As we emulate attacker behavior, your defenders gain real-time insight into unfolding threats and how to stop them.

Fast-track your security posture

In just five days, you’ll see improvements to your team’s detection and response methods using assumed breach scenarios modeled on threats you’re likely to face.

Trend Vision One integration

We integrate with the Trend Vision One platform and your existing security stack. This allows us to co-develop detections, fine-tune alert logic, and visualize attacker movement for maximum detection coverage.

Key service steps:

Planning

An exploratory call to discuss launch dates, team structure, scope, and engagement instructions, typically held 14 days before the engagement.

Collaboration

Daily calls between our Purple Team and your Blue Team to share knowledge and collaborate.

Learning

Your team will become skilled in Trend Vision One capabilities, incident response, and threat hunting.

Insight

We’ll uncover issues, errors, misconfigurations, and “open doors” to share with your security team after testing begins.

Red Teaming for Gen AI and LLM

Trend’s dedicated red teaming for GenAI and LLMs challenges your AI applications’ security and aligns assessments with OWASP Top 10 risks to help you mitigate real-world threats.

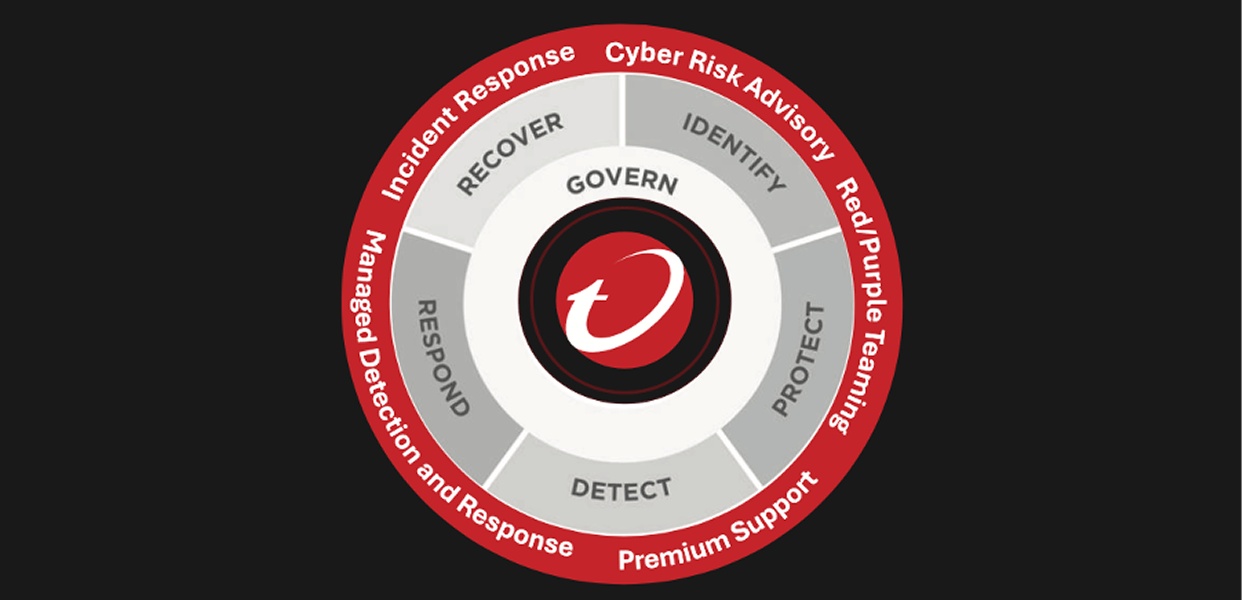

The Trend difference

Most security services stop at penetration testing. Trend goes further, combining decades of threat expertise, frontline intelligence, and real attacker techniques. From red teaming and adversarial AI and LLM testing to full purple team engagements, Trend uncovers gaps across people, processes, and technology to deliver actionable insights that strengthen, validate, and operationalize your security posture.

Voice of the customer

“The technical expertise and professionalism demonstrated by the Trend Red Team have been invaluable to our organization. Their meticulous approach to simulating real-world cyberattacks has provided us with actionable insights to improve our security resilience.”

Mikkel Madsen, IT Security Operations Manager, Copenhagen Airport

Discover other Trend Vision One Services

*Source: Forrester Research, “How to Get the Most Out of Red Teaming,” May 3, 2024.

Ready to transform your cybersecurity strategy?

Let’s connect.

*Source: Forrester Research, “How to Get the Most Out of Red Teaming,” May 3, 2024.