By David Fiser and Alfredo Oliveira

During the 2019 Security Fest and 2020 CARO Workshop, we extensively discussed the problem of using out-of-the-box working solutions, especially in the case of containers deployed within the environment. While this is convenient for development speed, it is not an inherently secure approach as in many cases, either security features are not enabled by default or the container is not built with security in mind. This has been proven true even within cloud environments, as we have seen happen in cases of some Azure App Services (AAS) containers that are set in default.

However, multiple cloud service providers (CSPs) provide an option to design a custom container image. In the next sections, we reiterate basic principles that should be followed to create a safe container image design, as well as threat scenarios posed by actors that we have already seen at large.

Designing safe container images

In the past, we wrote about why running a privileged container is a bad idea. And while this is not the case inside cloud services such as Azure App Services since the whole container will not be running as privileged, some processes inside will still be running while enabled with local privileges. Hence, the important lesson here is that running web applications should still not have more privileges than necessary for certain services. For instance, it’s pertinent to ask whether the container running the web application needs root permissions with capabilities CAP_NET_RAW or CAP_SYS_PTRACE, wherein these permissions allow unauthorized users to capture network traffic and debug processes during compromise. In most instances, applications don’t need those permissions to run as intended.

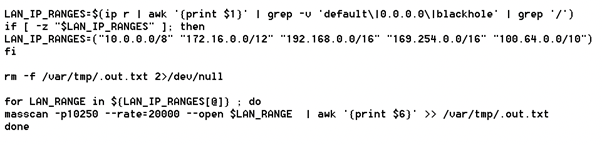

These capabilities provide additional attack surfaces for threat actors in the instance of compromise. We have seen, for example, threat actors such as TeamTNT probing environments at the moment of infection to maximize the number of devices infected, local networks included.

Figure 1. An example of a local area network (LAN) being probed upon infection.

Another security risk that we have been continually discussing is proper secrets management, including simple errors such as hard-coding plaintext credentials within configuration files and storing them within environmental variables in plaintext, wherein have shown that these actions can lead to full-service compromises.

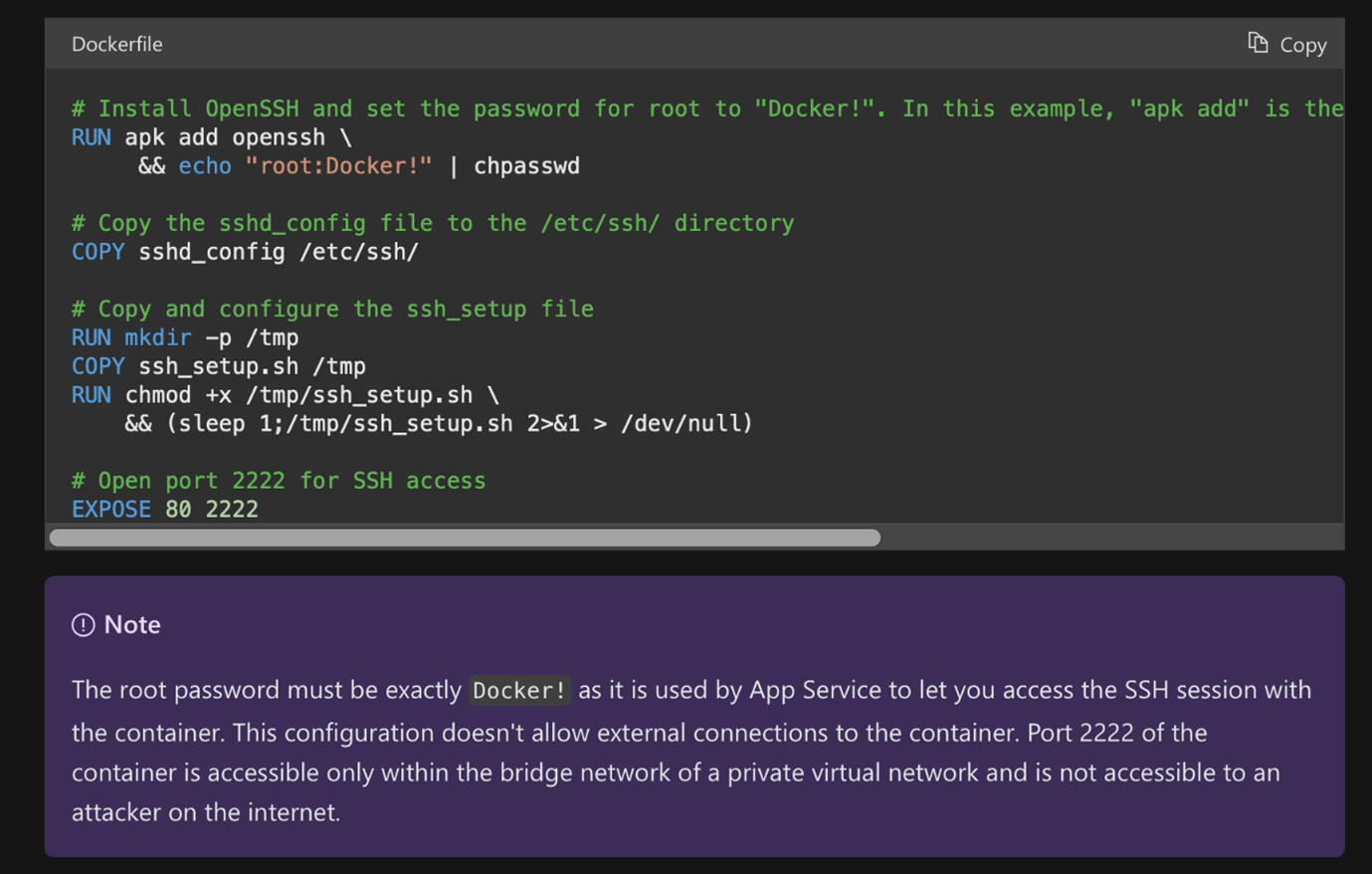

In the case of container image design, the equivalent error could be hard-coding root credentials and even making them the master password of all service containers just to be able to connect using secure shell (SSH).

Figure 2. An example of a service design gone wrong (Image source: Microsoft)

This approach is a security misstep as it makes privilege escalation easy, thus leading us to question if the developers understand the concept of containers to begin with since there are much safer options. For example, generating a private/public keypair on the image built and login using public key cryptography, instead of a master password, would provide a more secure environment when remote SSH access to the container is needed.

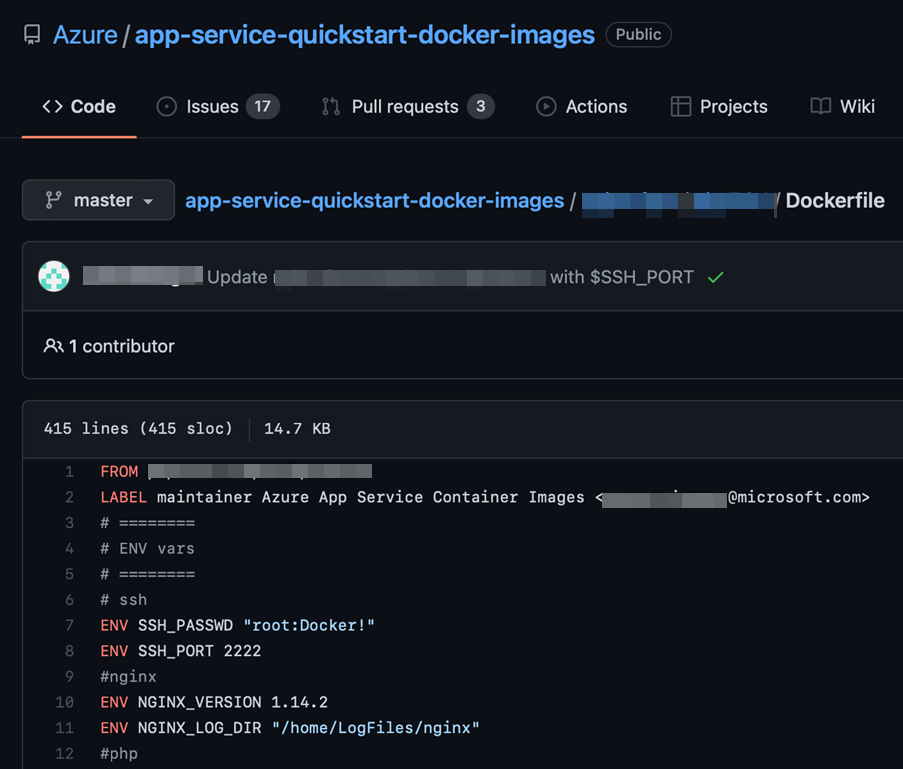

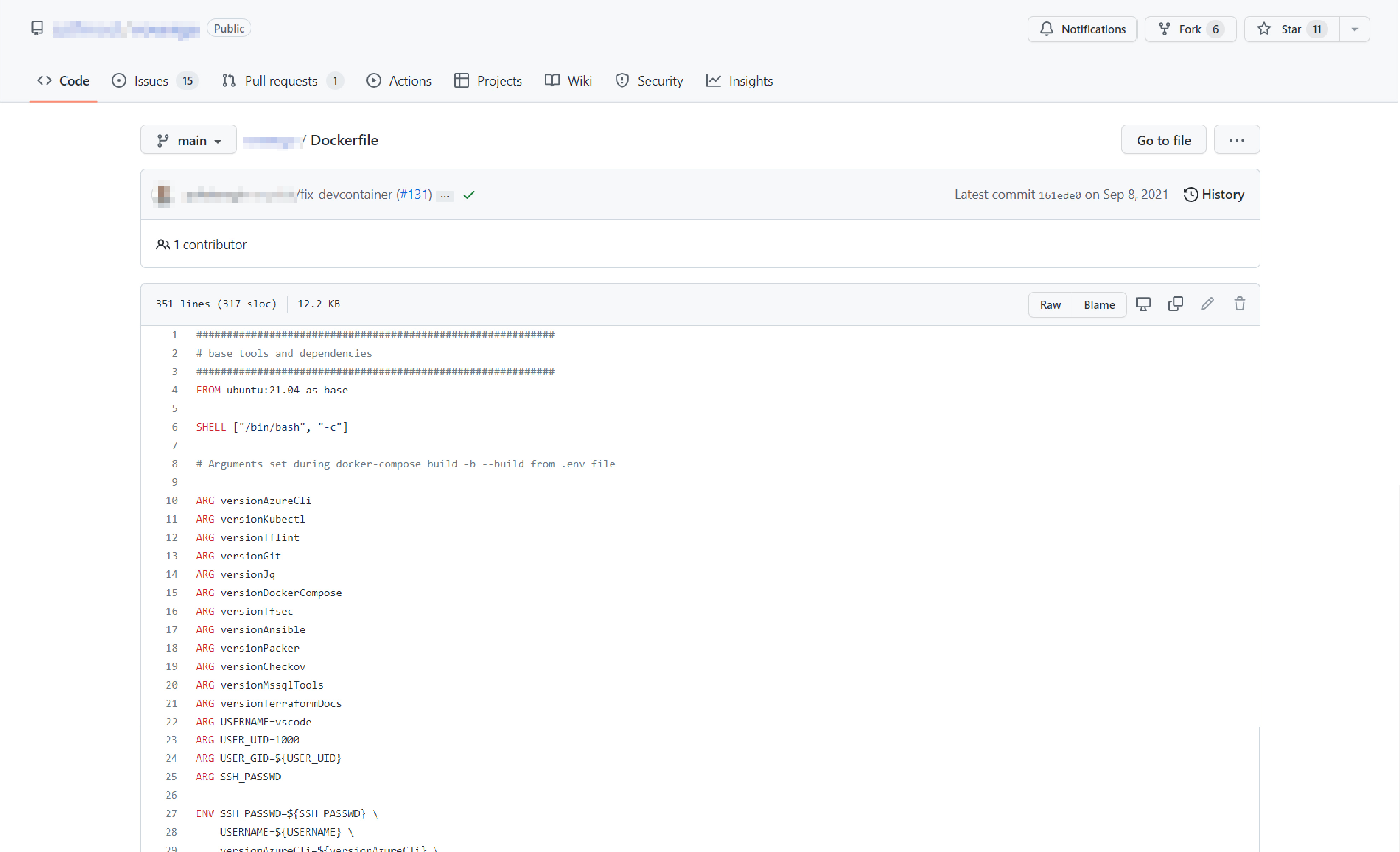

Figure 3. Wrong principle followed in many App Services-based projects

In the Figure 3, the password is in plaintext and hard-coded as an environment variable to be used later. Most of the services allow customers to manage variables through the console in a different context, so credentials do not need to be hard-coded. Variables with sensitive content can only be accessed through the administrator’s web console and are imported to the running container where they can be assigned and used.

When searching in public repositories, it’s not rare to find this technique being used in order to hide secrets.

Figure 4. Project hiding sensitive content with console variable declaration

However, the way Azure App Services is designed, the main problem is the single master hardcoded password "Docker!". Otherwise, the app would not work as desired (hence, the note in Figure 2), making it not only a problem of leaked secrets but also a real weakness in app design.

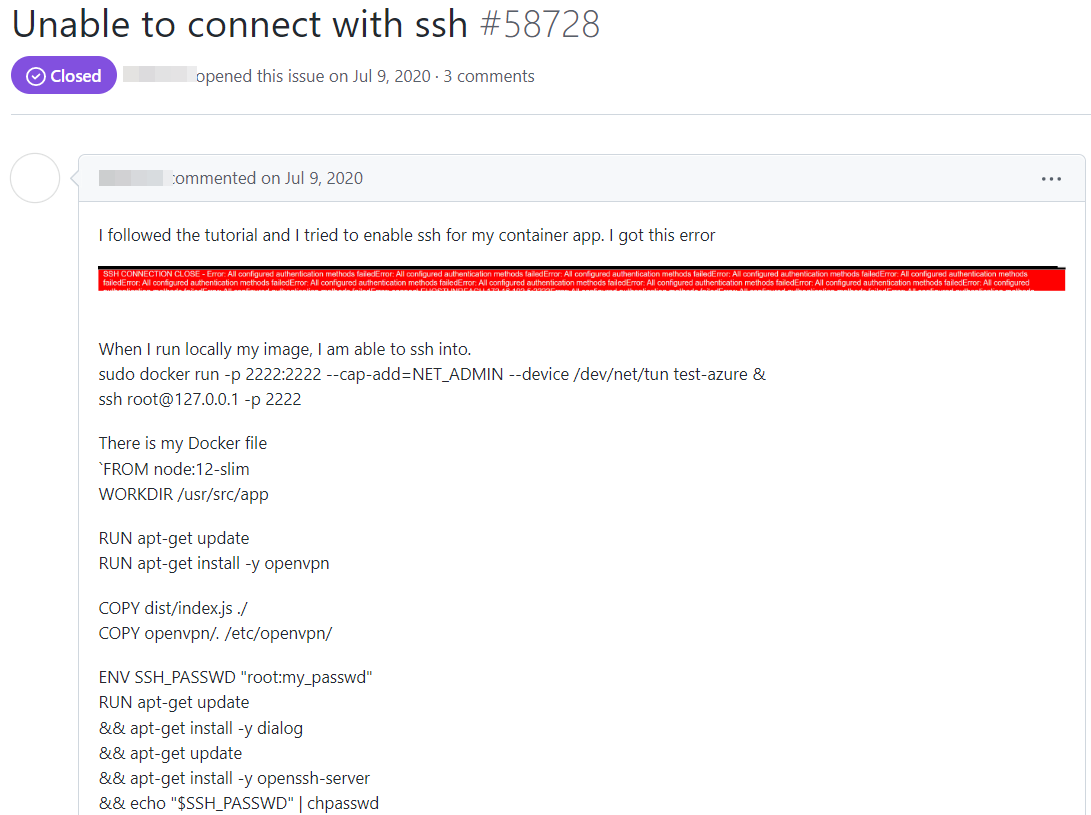

Figure 5. Issue opened by an AAS user describing the malfunction of the app when the password is not default

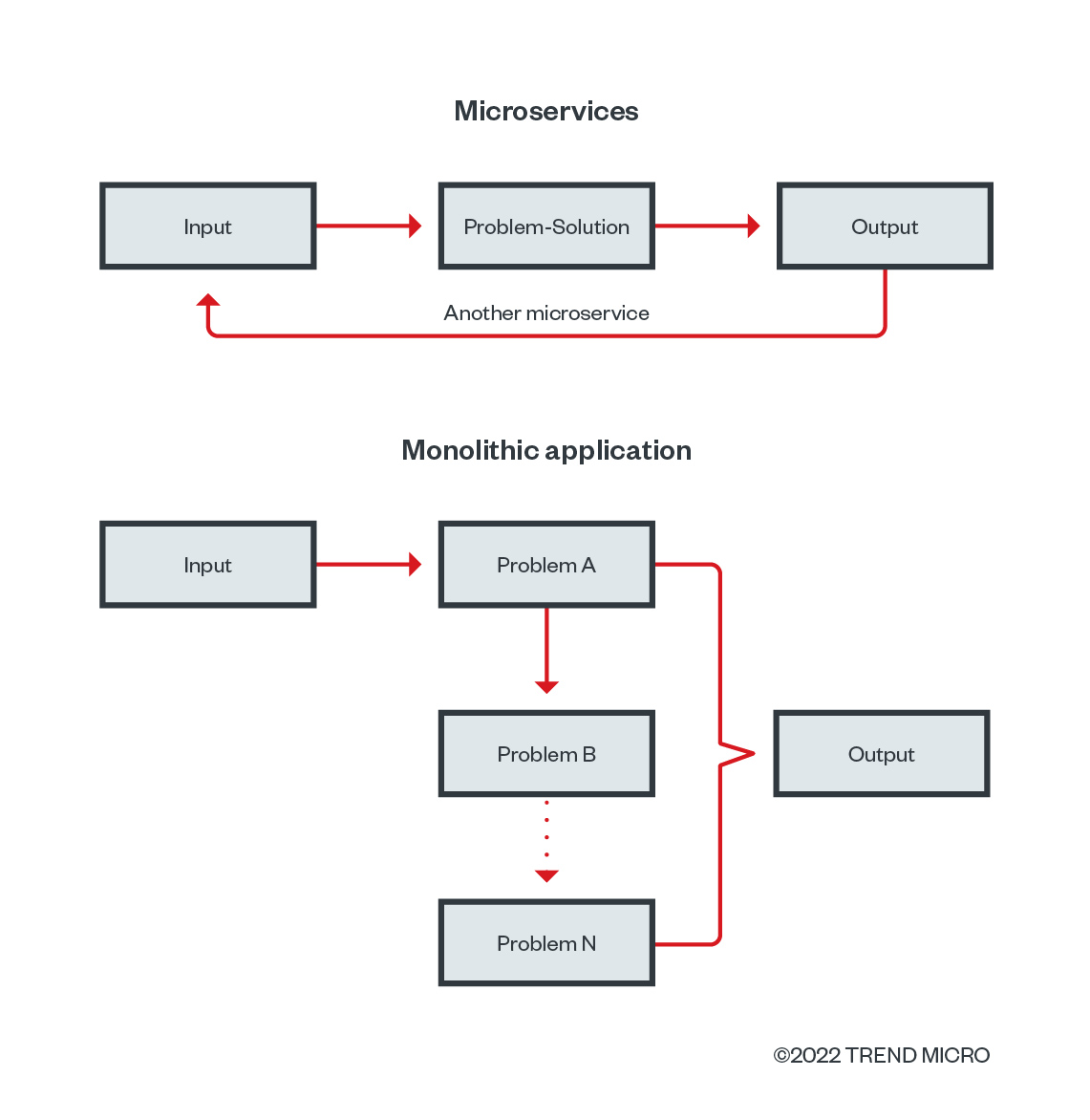

One of the microservice and container image design principles is that either a container or a microservice should solve one problem at a time. This means users don’t need the full Linux distribution or software installed within the container. For example, users most likely do not need a GNU Compiler Collection (GCC) compiler or network tools while running web applications.

Figure 6. A diagram following one of the microservice and container image design principles — solving one problem at a time

At this point it is also worth asking if an SSH is really necessary within the container of a web application. Additionally, if an SSH container is necessary there, why not generate a unique keypair for accessing those containers and using a single master password instead?

In the following part of this article, we discuss the possibility of designing a secure custom container within Azure App Services for running a Python-based application.

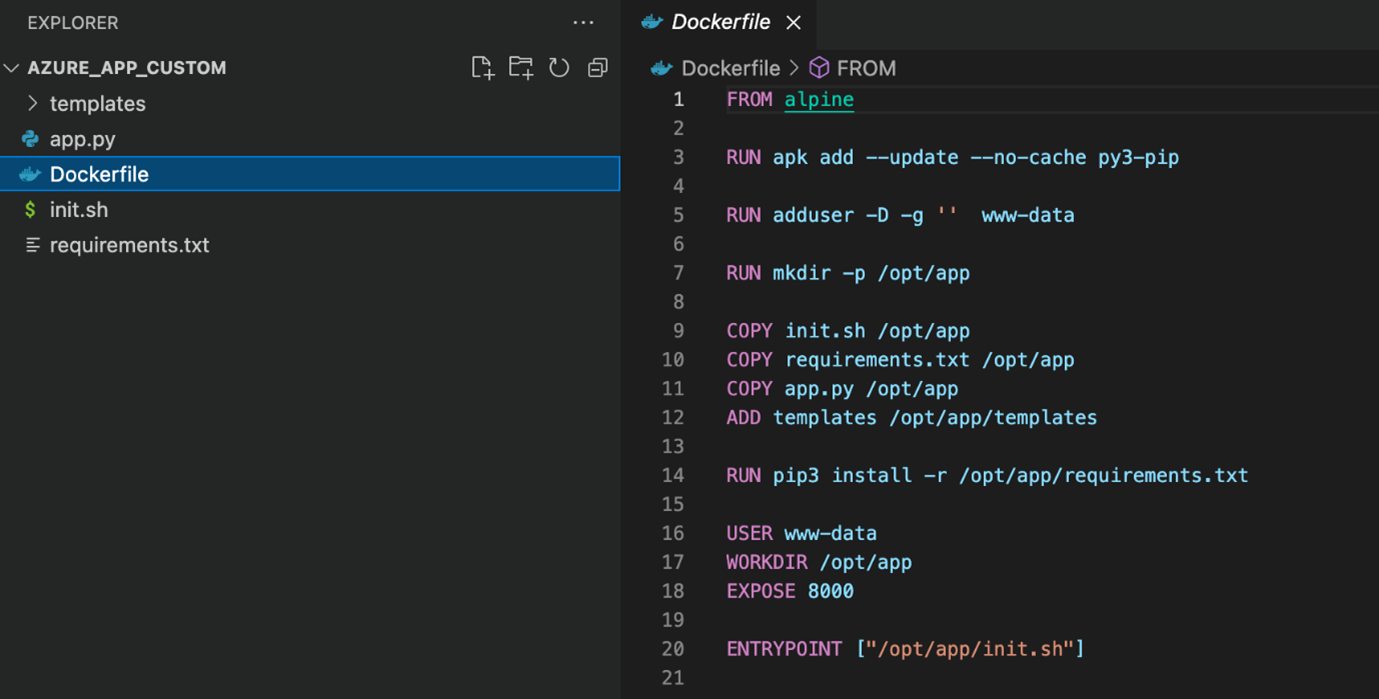

As Docker images are used for running the Azure App Services, we will have to construct a Dockerfile specifying our image. To follow the principle of least privilege and microservices orientation, we will base our image on Alpine Linux image, which is known to be minimalistic.

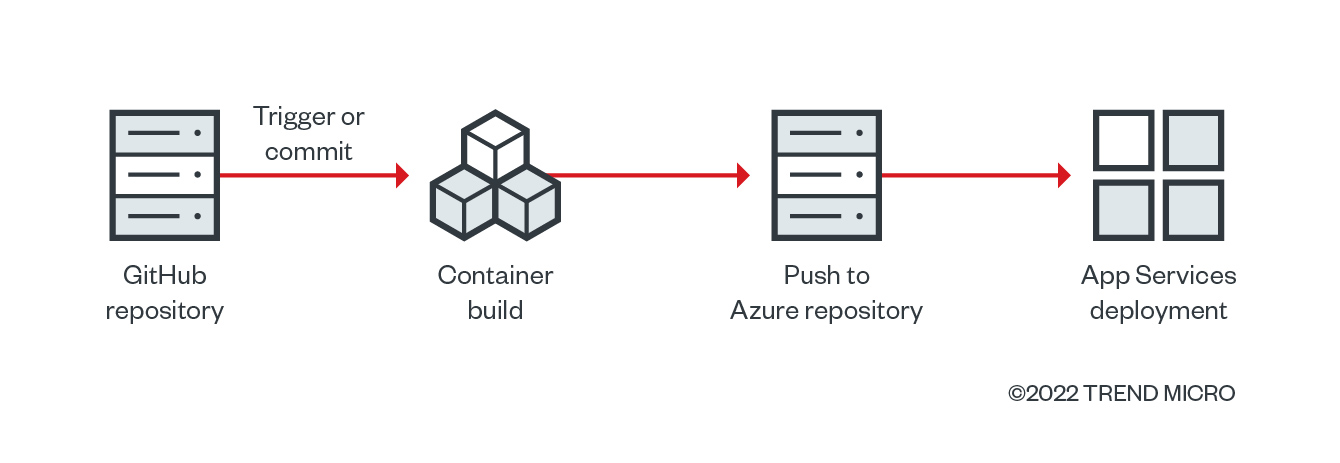

To achieve continuous deployment (CD), we will use following scheme:

Figure 7. Achieving continuous deployment with a secure custom container in Azure App Services

Starting with the structure of Python Flask: Hello World, a sample of a preloaded project, we created a non-privileged user and made it the default user of the image. We specifically focused on the proper setup of an application permission-running user within the container image specification, following the best practices identified in Figure 7 and not running either the container or the user as root.

Figure 8. An example of image specifications with limited permissions

Afterward, we defined the GitHub Actions (GHA) workflow for building a Docker container and deployed it into the cloud environment. Of course, the image building can be performed on the developer machine as well. But the principle is to build a more secure custom image and to deploy it into the linked repository with App Services, and then to restart the service either to apply the changes or configure continuous integration and continuous deployment (CI/CD).

Figure 9. Running the application under a low-privileged

user “www-data”

Conclusion and best practices

Using the default configurations on cloud services doesn’t necessarily mean these features automatically become the best options from a security perspective as these services usually have usability and speed as their main goals. Even though the attack surface is smaller than traditional architecture when using these services, there are still security implications that need to be addressed depending on the organizations’ risk appetite. Rather, the concepts of hardening an operating system (OS) can and should also be brought to the container and serverless world.

Handling and managing secrets, as well as sensitive data, should also be a concern for both the provider and user of services. Neglecting these could easily turn into a security breach or compromise, as documented in cases of well-known, massive security breaches because of containers with default passwords. Using other out-of-the-box solutions as additional security layers for protection can also help to secure the environment. Here are some additional best practices for keeping your custom containers secure:

- Achieve compliance with the “assume breach” paradigm, wherein users know that vulnerabilities exist. In the instance of compromise and given web vulnerabilities’ prevalence in today's attacks, the impact of the infiltration from the abuse of an exploit is minimized.

- Follow the principle of least privilege by using a non-privileged user for your container and applications. Also, consider using safer mechanisms for generating and managing secrets such as passwords and API keys.

- Audit and secure all employed out-of-the-box solutions by performing third party reviews and following the vendor best practices for security.

Although these services are designed to be practical and disruptive in terms of technology and infrastructure, all the traditional elements of computer resources are still present. There are libraries, operating systems, tools, applications, and a kernel. Even if access to these components are different and — most of the time — unexpected, “old” security measures should not be discarded when using these resources. With a bit of adaptation, most traditional security best practices will still work, and thus should be checked and implemented.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One