By David Fiser

According to the description, the Azure App Service is used to “quickly and easily create enterprise-ready web and mobile apps for any platform or device, and deploy them on a scalable and reliable cloud infrastructure.” In other words, it provides a ready-to-use infrastructure for applications.

From a technical perspective, the service is running inside a Docker container. The container image contains a language interpreter for the chosen runtime stack. The developers can bind the App Service with their code repository (e.g. GitHub) and build a continuous delivery and continuous integration (CD/CI) pipeline for deploying the code inside App Service.

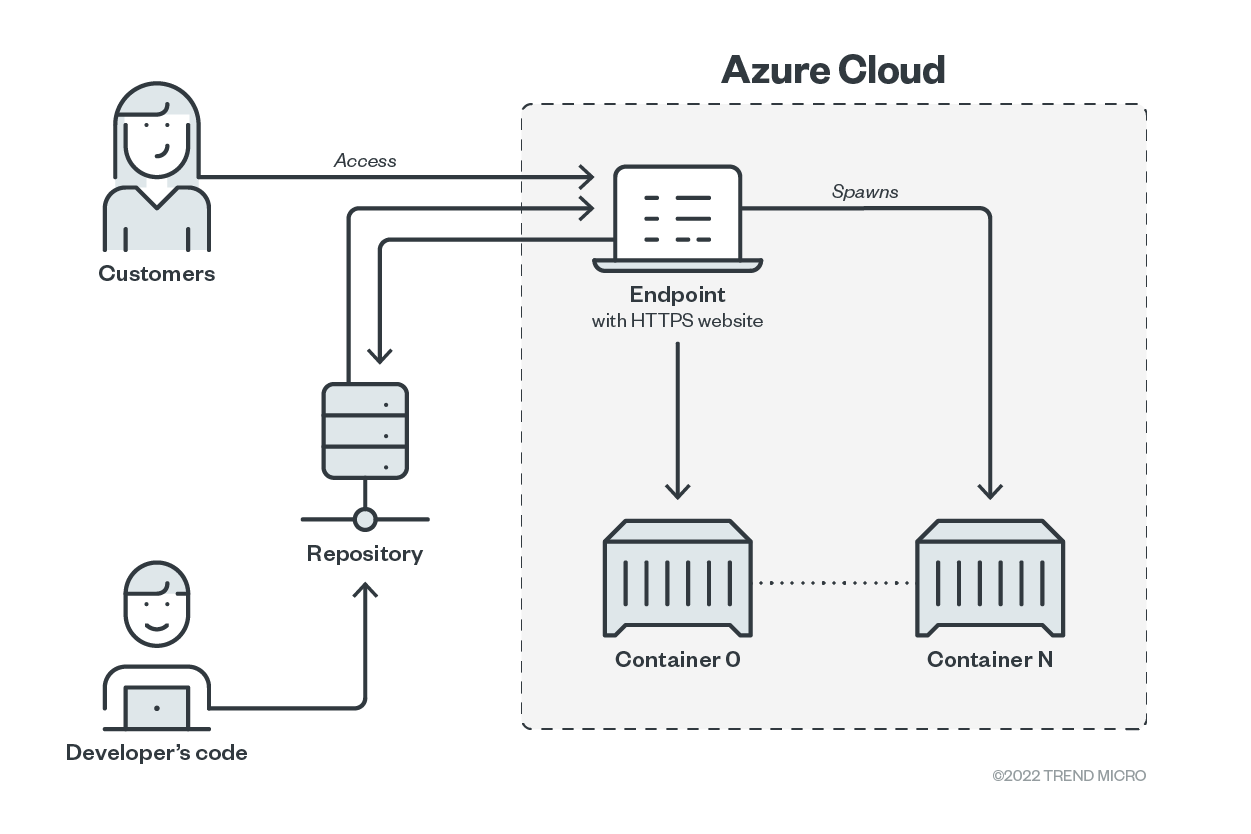

Figure 1. Azure App Services with CD/CI integration

Once a commit is pushed into the GitHub repository, a GitHub Actions (GHA) task is executed, effectively building a Docker image for Azure App Services’ linked account. When the customers access the http endpoint of the service, a container is spawned for serving the query. From a security perspective, there is no access token saved within the build container. Instead, an access token for the Azure App Services is stored within GHA, and thus limited to its security boundary.

Security and environmental analysis

Upon request, the developer-provided code is executed within the container. Assuming the code can contain a vulnerability, we analyzed attacker options when the vulnerable user-provided code is exploited. That means the boundaries of the environment— in this case, a container, its permissions, configurations, and their consequences.

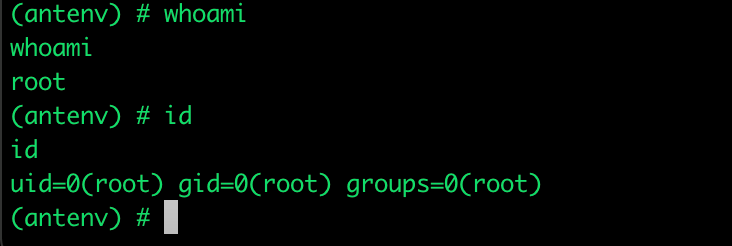

This includes the user’s permissions when the application is executed and the available capabilities within the container. For Python and Node.js container images as an example, the executed code runs under root permissions. If attackers find and exploit a vulnerability such as spawning a shell, they can get the same permissions that the current user has. This contradicts the security principle of least privilege and effectively increases an attacker’s options in cases of compromise.

Figure 2. User permissions within the App Services environment-container running in Python

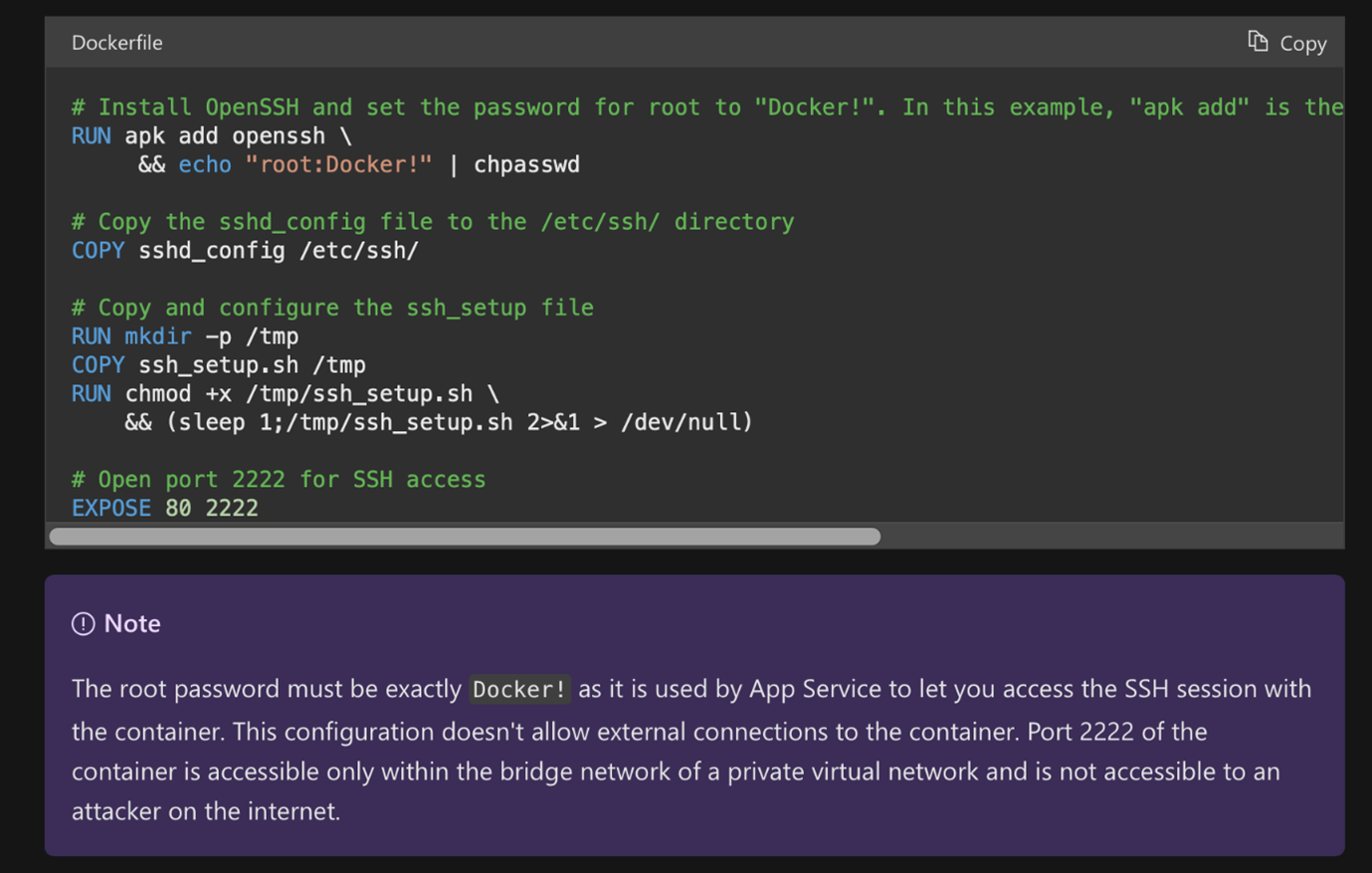

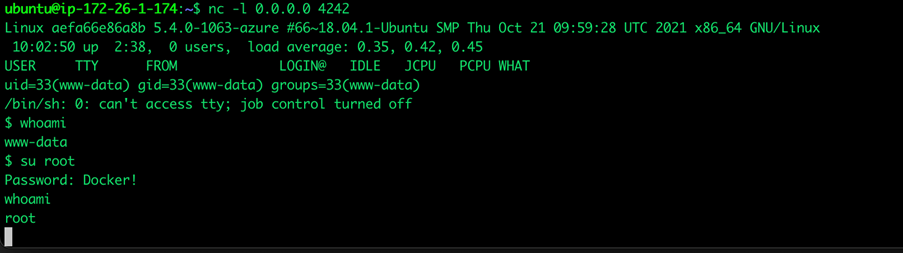

Unfortunately, even when an application is running under a low-privileged user such as www-data in case of a PHP container image, one can easily escalate the privileges to the root user within the running container. This is due to the non-security-oriented container design used for app services. Listing the documentation, one can escalate privileges, since the root password remains exposed — which is the same for every instance the customer will use out of the default repository — they also propose to use the same password for custom-made containers.

Figure 3. A screenshot of a container customization tutorial from Azure

Figure 4. An example of privilege escalation of a running container within App Services

On analysis, the root user still does not have all the capabilities on the host. It is limited within container isolation schemes, meaning the container is not running in privileged mode. The available capabilities within the container are:

- CAP_CHOWN

- CAP_DAC_OVERRIDE

- CAP_FOWNER

- CAP_FSETID

- CAP_KILL

- CAP_SETGID

- CAP_SETUID

- CAP_SETPCAP

- CAP_NET_BIND_SERVICE

- CAP_NET_RAW

- CAP_SYS_CHROOT

- CAP_SYS_PTRACE

- CAP_MKNOD

- CAP_AUDIT_WRITE

- CAP_SETFCAP

We looked at the list and saw one dangerous and unnecessary capability: the CAP_NET_RAW capability can allow an attacker to craft low-level packets that can cause additional stress to the infrastructure by creating raw sockets. The level of compromise depends on the services available based on the cloud network’s design, but examples of infiltration can include the discovery of services, DNS attacks, attacking TCP/IP exploiting previously documented gaps with assigned CVEs (e.g., CVE-2020-14386), or flooding devices accessible within the network with packets.

The SMB3 mounted volume in the /home folder is also interesting. This allows the container to have stateful storage, so anything stored in it remains intact among container spawns. The SMB service is also known to be prone to vulnerabilities. When newer exploits similar to EternalBlue appear, it can be abused to compromise hosted storage service.

The app service is intended to host web applications or services. In the case of ordinary web applications, the web request is handled within milliseconds (up to 0.5 seconds). It tends to be served as soon as possible to achieve a good user experience. Our tests found that the timeout for HTTP requests is around 240 seconds and additional processes spawned within the container can live up to 10 minutes. And while there are no “official” timeout settings available, the developer can influence this within the code itself.

From the user’s perspective, this is considered beneficial if they were running complex tasks. However, this can be prone to attacks such as denial of service (DoS). Moreover, scaling the service is required when a service hangs before the timeout trigger is reached. At some point, the user will reach the limit in scalability if triggered repeatedly. When the user needs higher timeouts, they should ask a question if this is the suitable service in the first place.

We previously emphasized the need for proper secrets management within the DevOps environment as they play an important role in securing the environment. But how does Azure App Services stand in terms of secrets management?

In our analysis, we could distinguish between user-defined secrets and platform-defined secrets. In the case of user-defined secrets, the responsibility is fully on the user. Azure provides KeyVault service for managing secrets.

On the other hand, a common practice in the DevOps community is to use environmental variables for secrets within the container environments. We highly disagree with this approach as the secrets do not need to be present within the environment the entire time and it provides an additional attack vector — as they are also copied into every child process by design. For example, a simple environment leak would expose the secrets.

With that in mind, we don’t recommend using application settings for storing secrets within App Services as they will be exposed as environmental variables upon execution. The same recommendation applies for connection strings as the only difference is in the variable prefix.

Figure 5. Application settings within the App Services

On the other hand, the application settings contain environmental variables that are present in the system architecture design and the user can’t affect those because these are provided by default for the service to run. From a security perspective, the user should focus on the following items:

- WEBSITE_AUTH_ENCRYPTION_KEY: The generated key is used as the encryption key by default. To override this automated key, set it to a desired key. This is recommended if the user shares tokens or sessions across multiple apps.

- WEBSITE_AUTH_SIGNING_KEY: The generated key is used as the signing key by default. To override this, set it to a desired key. This is recommended if the user shares tokens or sessions across multiple apps.

According to the Azure documentation, these keys are used for encryption and signing. We are sure they are not a good candidate for environmental variables.

Network analysis

Available network boundaries can provide another angle that an attacker could use for infiltration. We identified the following types:

- Incoming connections

- Outgoing connections

- Accessible devices within the local area network (LAN)

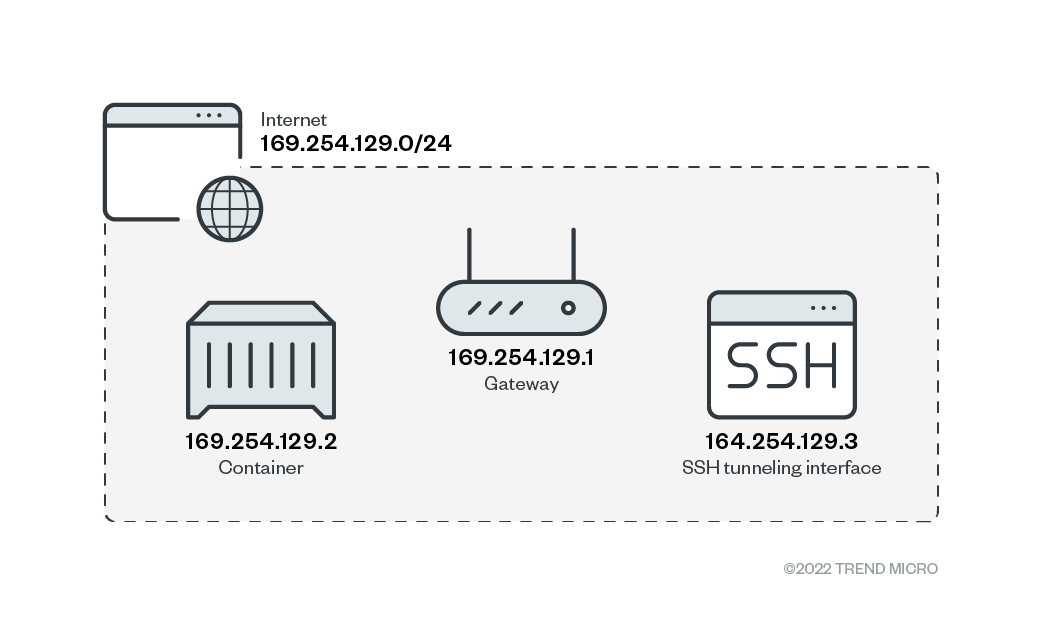

The actual boundaries are dependent on the user’s cloud architecture, use cases, and scenarios. As App Service maps the HTTP endpoint to the container where the application listens for connections, the container also exposes port 2222 for SSH connections. The Web SSH gateway is used for initiating the SSH connection to the container that requires Azure authentication, so an unauthenticated user can’t connect to container.

By default, outbound connections are possible and not limited, allowing an attacker to spawn a reverse shell upon successful exploitation of the user code.

The default container accessible network contains a minimum of three IP addresses. One is the container network interface itself, the second is the default gateway for accessing the internet, and the last one for incoming SSH connections from Web SSH.

Figure 6. Default network scheme

Conclusions and recommendations

The default settings can be altered by modifying the network settings, Azure Virtual Networks (VNets) and hybrid connections, among others. As different organizations will have multiple variations and use cases depending on their needs, companies and developer teams should apply the principle of least privilege. From a network perspective, this means denying all other traffic besides those deemed necessary for the application to work, especially if your network consists of multiple endpoints within one VNet.

Overall, what does this mean for the App Services consumer? The answer is simple: The user is the biggest security risk, either by misconfiguring the cloud service and creating a wider attack surface or by implementing a code that has vulnerabilities. To mitigate the risks, we suggest the following best practices and recommendations:

- Exercise peer reviews of the code

- Execute continuous testing of the code

- Secure secrets and don’t trust environmental variables

- Follow the principle of least privilege

- Configure the services assuming a breach or attack scenario (to minimize the impact of a breach)

Is there anything that Azure can do to address these issues? They could make sure that the default container images don’t run web applications with root permissions. Second, it would be great if we could change the mindset within the DevOps community, which uses environmental variables to store secrets within the runtime. Even as Azure — or any cloud service provider for that matter — is doing everything else in a safe manner (such as providing =TLS, encryption, and others), these little details can degrade security. Ultimately, the strength of the chain is defined by the strength of its weakest parts.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

- Agentic Edge AI: Development Tools and Workflows

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One